目标检测是计算机视觉中用于识别和定位图像或视频中对象的一种技术。图像定位是使用边界框来识别一个或多个对象的正确位置的过程,这些边界框对应于对象周围的矩形形状。这个过程有时会与图像分类或图像识别混淆,后者的目标是将图像或图像中的对象预测为一个类别或类别之一。

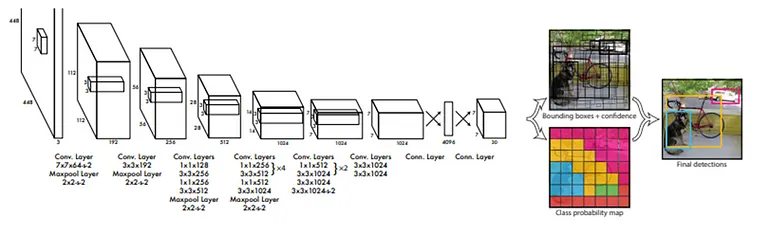

YOLO开发者将目标检测问题构建为回归问题,而不是分类任务,通过空间上分离边界框,并使用单个卷积神经网络(CNN)将概率与每个检测到的图像相关联,如这里所示。

将图像分割成小单元,并将概率与每个检测到的图像关联

YOLO在竞争中领先的原因包括其:

- 速度

- 检测精度

- 良好的泛化能力

- 开源

将YOLO模型部署到Flutter应用程序中,通过手机相机识别对象:

1. 创建新项目并设置您的环境:

在android/app/build.gradle中,在android块中添加以下设置。

android{

aaptOptions {

noCompress 'tflite'

noCompress 'lite'

}

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

在同一路径的android/app/build.gradle中,调整“minSdkVersion”,“targetSdkVersion”和“compileSdkVersion”在android块如下所示:

android/app/build.gradle --> android块

在android/build.gradle中,调整“ext.kotlin_version”构建脚本块为此:

buildscript {

ext.kotlin_version = '1.7.10'

repositories {

google()

mavenCentral()

}

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

2. 将您的Yolov8模型添加到项目环境中:

模型必须以“.tflite”的形式导出,以便于在边缘设备上部署,如手机。如果您想用Python训练自定义的Yolov模型并将其导出为.tflite而不是.pt,您必须遵循这里的说明。我将在这里插入一个用于测试的预训练好的Yolov8目标检测模型。在您的Flutter项目中:

(1) 创建一个assets文件夹,并将标签文件和模型文件放在其中。在pubspec.yaml中添加:

assets:

- assets/labels.txt

- assets/yolov8n.tflite- 1.

- 2.

- 3.

(2) 导入所需的包flutter_vision / camera:

import 'package:flutter_vision/flutter_vision.dart';

import 'package:camera/camera.dart';- 1.

- 2.

3. 开始编程:

(1) 在您的项目中初始化相机如下:

late List<CameraDescription> camerass;- 1.

(2) 创建“YoloVideo”类:

class YoloVideo extends StatefulWidget {

const YoloVideo({Key? key}) : super(key: key);

@override

State<YoloVideo> createState() => _YoloVideoState();

}

class _YoloVideoState extends State<YoloVideo> {

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

(3) 在“YoloVideo”类中,声明所需的变量:

late CameraController controller;

late FlutterVision vision;

late List<Map<String, dynamic>> yoloResults;

CameraImage? cameraImage;

bool isLoaded = false;

bool isDetecting = false;

double confidenceThreshold = 0.5;- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

(4) 初始化模型和相机:

@override

void initState() {

super.initState();

init();

}

init() async {

camerass = await availableCameras();

vision = FlutterVision();

controller = CameraController(camerass[0], ResolutionPreset.high);

controller.initialize().then((value) {

loadYoloModel().then((value) {

setState(() {

isLoaded = true;

isDetecting = false;

yoloResults = [];

});

});

});

}

@override

void dispose() async {

super.dispose();

controller.dispose();

await vision.closeYoloModel();

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

(5) 简单的UI和确定视频流大小

@override

Widget build(BuildContext context) {

final Size size = MediaQuery.of(context).size;

if (!isLoaded) {

return const Scaffold(

body: Center(

child: Text("Model not loaded, waiting for it"),

),

);

}

return Scaffold(

body: Stack(

fit: StackFit.expand,

children: [

AspectRatio(

aspectRatio: controller.value.aspectRatio,

child: CameraPreview(

controller,

),

),

...displayBoxesAroundRecognizedObjects(size),

Positioned(

bottom: 75,

width: MediaQuery.of(context).size.width,

child: Container(

height: 80,

width: 80,

decoration: BoxDecoration(

shape: BoxShape.circle,

border: Border.all(

width: 5, color: Colors.white, style: BorderStyle.solid),

),

child: isDetecting

? IconButton(

onPressed: () async {

stopDetection();

},

icon: const Icon(

Icons.stop,

color: Colors.red,

),

iconSize: 50,

)

: IconButton(

onPressed: () async {

await startDetection();

},

icon: const Icon(

Icons.play_arrow,

color: Colors.white,

),

iconSize: 50,

),

),

),

],

),

);

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

(6) 加载模型

Future<void> loadYoloModel() async {

await vision.loadYoloModel(

labels: 'assets/CLASSES.txt',

modelPath: 'assets/curr_float32.tflite',

modelVersion: "yolov8",

numThreads: 1,

useGpu: true);

setState(() {

isLoaded = true;

});

}

// 通过yoloOnFrame进行实时目标检测函数

Future<void> yoloOnFrame(CameraImage cameraImage) async {

final result = await vision.yoloOnFrame(

bytesList: cameraImage.planes.map((plane) => plane.bytes).toList(),

imageHeight: cameraImage.height,

imageWidth: cameraImage.width,

iouThreshold: 0.4,

confThreshold: 0.4,

classThreshold: 0.5);

if (result.isNotEmpty) {

setState(() {

yoloResults = result;

});

}

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

(7) 启动视频流和开始或停止检测的函数

Future<void> startDetection() async {

setState(() {

isDetecting = true;

});

if (controller.value.isStreamingImages) {

return;

}

await controller.startImageStream((image) async {

if (isDetecting) {

cameraImage = image;

yoloOnFrame(image);

}

});

}

Future<void> stopDetection() async {

setState(() {

isDetecting = false;

yoloResults.clear();

});

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

(8) 检测到的对象周围的边界框

List<Widget> displayBoxesAroundRecognizedObjects(Size screen) {

if (yoloResults.isEmpty) return [];

double factorX = screen.width / (cameraImage?.height ?? 1);

double factorY = screen.height / (cameraImage?.width ?? 1);

Color colorPick = const Color.fromARGB(255, 50, 233, 30);

return yoloResults.map((result) {

double objectX = result["box"][0] * factorX;

double objectY = result["box"][1] * factorY;

double objectWidth = (result["box"][2] - result["box"][0]) * factorX;

double objectHeight = (result["box"][3] - result["box"][1]) * factorY;

speak() {

String currentResult = result['tag'].toString();

DateTime currentTime = DateTime.now();

if (currentResult != previousResult ||

currentTime.difference(previousSpeechTime) >= repeatDuration) {

tts.flutterSpeak(currentResult);

previousResult = currentResult;

previousSpeechTime = currentTime;

}

}

speak();

return Positioned(

left: objectX,

top: objectY,

width: objectWidth,

height: objectHeight,

child: Container(

decoration: BoxDecoration(

borderRadius: const BorderRadius.all(Radius.circular(10.0)),

border: Border.all(color: Colors.pink, width: 2.0),

),

child: Text(

"${result['tag']} ${((result['box'][4] * 100).toStringAsFixed(0))}",

style: TextStyle(

background: Paint()..color = colorPick,

color: const Color.fromARGB(255, 115, 0, 255),

fontSize: 18.0,

),

),

),

);

}).toList();

}

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

最后,您可以在Main.dart中将YoloVideo类作为函数调用,以在启动应用程序时启动视频流和实时目标检测,如下所示:

main() async {

WidgetsFlutterBinding.ensureInitialized();

runApp(

const MaterialApp(

home: YoloVideo(),

),

);

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

我的Yolov8 Flutter应用程序的截图