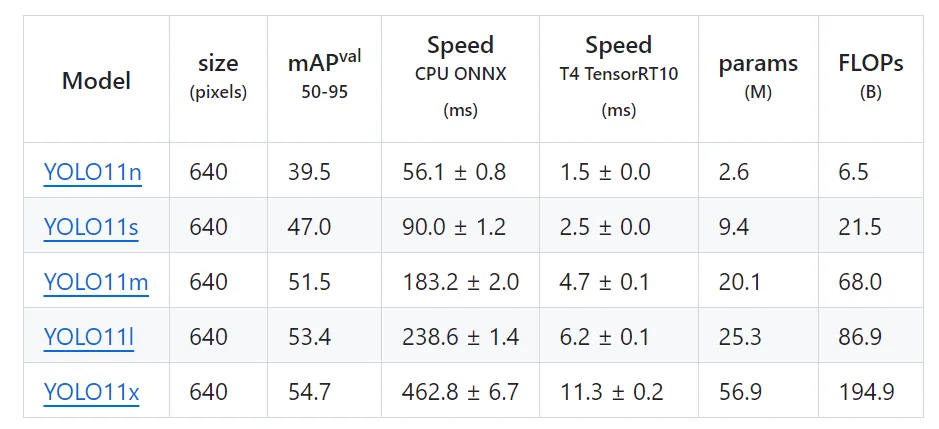

归功于Ultralytics,在Python中运行目标检测模型已经变得非常容易,但在C++中运行YOLO模型呢?本文将向你展示如何仅使用OpenCV库在C++中运行YOLO模型。本文介绍的是如何在CPU上运行YOLOv11模型,而不是GPU。在GPU上运行模型需要安装CUDA、CUDNN等,这些步骤可能会让人感到困惑且耗时。我将在未来写另一篇文章,介绍如何在支持CUDA的情况下运行YOLO模型。

目前,你只需要安装OpenCV库。如果还没有安装,可以学习这个链接安装:https://www.youtube.com/watch?v=CnXUTG9XYGI&t=159s。

1. 克隆ultralytics/yolov11仓库以导出为ONNX模型

- 创建一个新文件夹,并随意命名。打开终端并将yolov11仓库克隆到此文件夹中。我们将使用此仓库将模型导出为onnx格式。

git clone https://github.com/ultralytics/ultralytics- 我将使用yolov11s.pt模型,但你可以使用自定义的yolov11模型,过程不会改变。你可以从这个链接(https://github.com/ultralytics/ultralytics)下载预训练模型,或者使用自己的模型。

现在让我们将使用下面命令将模型导出为onnx。在模型转换过程中有不同的参数可以设置,你可以查看第二部分代码中的model.export中的常用参数及解释。

from ultralytics import YOLO

# 加载一个模型,路径为 YOLO 模型的 .pt 文件

model = YOLO("/path/to/best.pt")

# 导出模型,格式为 ONNX

model.export(format="onnx")model.export(

format="onnx", # 导出格式为 ONNX

imgsz=(640, 640), # 设置输入图像的尺寸

keras=False, # 不导出为 Keras 格式

optimize=False, # 不进行优化 False, 移动设备优化的参数,用于在导出为TorchScript 格式时进行模型优化

half=False, # 不启用 FP16 量化

int8=False, # 不启用 INT8 量化

dynamic=False, # 不启用动态输入尺寸

simplify=True, # 简化 ONNX 模型

opset=None, # 使用最新的 opset 版本

workspace=4.0, # 为 TensorRT 优化设置最大工作区大小(GiB)

nms=False, # 不添加 NMS(非极大值抑制)

batch=1, # 指定批处理大小

device="cpu" # 指定导出设备为CPU或GPU,对应参数为"cpu" , "0"

)2. 创建TXT文件以存储YOLO模型标签

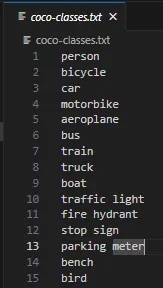

这一步非常简单,你只需要创建一个txt文件来存储标签。如果你像我一样使用预训练的YOLO模型,你可以直接从这个链接下载txt文件:https://github.com/amikelive/coco-labels/blob/master/coco-labels-2014_2017.txt。

如果你有自定义模型,那么创建一个新的txt文件并在其中写入你的标签,并可以随意命名此文件。

3. 创建CMakeLists.txt文件

现在,让我们创建一个CMakeLists.txt文件。使用CMake编译C++程序时需要此文件。如果你从我分享的链接安装了OpenCV,你应该已经安装了CMake。

cmake_minimum_required(VERSION 3.10)

project(cpp-yolo-detection) # your folder name here

# Find OpenCV

set(OpenCV_DIR C:/Libraries/opencv/build) # path to opencv

find_package(OpenCV REQUIRED)

add_executable(object-detection object-detection.cpp) # your file name

# Link OpenCV libraries

target_link_libraries(object-detection ${OpenCV_LIBS})4. 代码

最后,这是最后一步。我使用了这个仓库中的代码,但我修改了一些部分并添加了注释,以帮助你更好地理解。

#include <fstream>

#include <opencv2/opencv.hpp>

// Load labels from coco-classes.txt file

std::vector<std::string> load_class_list()

{

std::vector<std::string> class_list;

// change this txt file to your txt file that contains labels

std::ifstream ifs("C:/Users/sirom/Desktop/cpp-ultralytics/coco-classes.txt");

std::string line;

while (getline(ifs, line))

{

class_list.push_back(line);

}

return class_list;

}

// Model

void load_net(cv::dnn::Net &net)

{

// change this path to your model path

auto result = cv::dnn::readNet("C:/Users/sirom/Desktop/cpp-ultralytics/yolov5s.onnx");

std::cout << "Running on CPU/n";

result.setPreferableBackend(cv::dnn::DNN_BACKEND_OPENCV);

result.setPreferableTarget(cv::dnn::DNN_TARGET_CPU);

net = result;

}

const std::vector<cv::Scalar> colors = {cv::Scalar(255, 255, 0), cv::Scalar(0, 255, 0), cv::Scalar(0, 255, 255), cv::Scalar(255, 0, 0)};

// You can change this parameters to obtain better results

const float INPUT_WIDTH = 640.0;

const float INPUT_HEIGHT = 640.0;

const float SCORE_THRESHOLD = 0.5;

const float NMS_THRESHOLD = 0.5;

const float CONFIDENCE_THRESHOLD = 0.5;

struct Detection

{

int class_id;

float confidence;

cv::Rect box;

};

// yolov5 format

cv::Mat format_yolov5(const cv::Mat &source) {

int col = source.cols;

int row = source.rows;

int _max = MAX(col, row);

cv::Mat result = cv::Mat::zeros(_max, _max, CV_8UC3);

source.copyTo(result(cv::Rect(0, 0, col, row)));

return result;

}

// Detection function

void detect(cv::Mat &image, cv::dnn::Net &net, std::vector<Detection> &output, const std::vector<std::string> &className) {

cv::Mat blob;

// Format the input image to fit the model input requirements

auto input_image = format_yolov5(image);

// Convert the image into a blob and set it as input to the network

cv::dnn::blobFromImage(input_image, blob, 1./255., cv::Size(INPUT_WIDTH, INPUT_HEIGHT), cv::Scalar(), true, false);

net.setInput(blob);

std::vector<cv::Mat> outputs;

net.forward(outputs, net.getUnconnectedOutLayersNames());

// Scaling factors to map the bounding boxes back to original image size

float x_factor = input_image.cols / INPUT_WIDTH;

float y_factor = input_image.rows / INPUT_HEIGHT;

float *data = (float *)outputs[0].data;

const int dimensions = 85;

const int rows = 25200;

std::vector<int> class_ids; // Stores class IDs of detections

std::vector<float> confidences; // Stores confidence scores of detections

std::vector<cv::Rect> boxes; // Stores bounding boxes

// Loop through all the rows to process predictions

for (int i = 0; i < rows; ++i) {

// Get the confidence of the current detection

float confidence = data[4];

// Process only detections with confidence above the threshold

if (confidence >= CONFIDENCE_THRESHOLD) {

// Get class scores and find the class with the highest score

float * classes_scores = data + 5;

cv::Mat scores(1, className.size(), CV_32FC1, classes_scores);

cv::Point class_id;

double max_class_score;

minMaxLoc(scores, 0, &max_class_score, 0, &class_id);

// If the class score is above the threshold, store the detection

if (max_class_score > SCORE_THRESHOLD) {

confidences.push_back(confidence);

class_ids.push_back(class_id.x);

// Calculate the bounding box coordinates

float x = data[0];

float y = data[1];

float w = data[2];

float h = data[3];

int left = int((x - 0.5 * w) * x_factor);

int top = int((y - 0.5 * h) * y_factor);

int width = int(w * x_factor);

int height = int(h * y_factor);

boxes.push_back(cv::Rect(left, top, width, height));

}

}

data += 85;

}

// Apply Non-Maximum Suppression

std::vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, SCORE_THRESHOLD, NMS_THRESHOLD, nms_result);

// Draw the NMS filtered boxes and push results to output

for (int i = 0; i < nms_result.size(); i++) {

int idx = nms_result[i];

// Only push the filtered detections

Detection result;

result.class_id = class_ids[idx];

result.confidence = confidences[idx];

result.box = boxes[idx];

output.push_back(result);

// Draw the final NMS bounding box and label

cv::rectangle(image, boxes[idx], cv::Scalar(0, 255, 0), 3);

std::string label = className[class_ids[idx]];

cv::putText(image, label, cv::Point(boxes[idx].x, boxes[idx].y - 5), cv::FONT_HERSHEY_SIMPLEX, 2, cv::Scalar(255, 255, 255), 2);

}

}

int main(int argc, char **argv)

{

// Load class list

std::vector<std::string> class_list = load_class_list();

// Load input image

std::string image_path = cv::samples::findFile("C:/Users/sirom/Desktop/cpp-ultralytics/test2.jpg");

cv::Mat frame = cv::imread(image_path, cv::IMREAD_COLOR);

// Load the modeL

cv::dnn::Net net;

load_net(net);

// Vector to store detection results

std::vector<Detection> output;

// Run detection on the input image

detect(frame, net, output, class_list);

// Save the result to a file

cv::imwrite("C:/Users/sirom/Desktop/cpp-ultralytics/result.jpg", frame);

while (true)

{

// display image

cv::imshow("image",frame);

// Exit the loop if any key is pressed

if (cv::waitKey(1) != -1)

{

std::cout << "finished by user\n";

break;

}

}

std::cout << "Processing complete. Image saved /n";

return 0;

}5. 编译并运行代码

mkdir build

cd build

cmake ..

cmake --build .

.\Debug\object-detection.exe