背 景

公司A面临着监控其IT基础设施的需求,包括Windows和Linux平台上的端口、进程和内网域名状态。随着业务的增长,维护系统的稳定性和安全性变得尤为重要。传统的监控方法可能实时性和灵活性不够好,因此采用Prometheus监控工具,以便更高效地获取系统状态和性能指标。

目 标

- 实现跨平台监控:选择合适的监控工具,能够在Windows和Linux上均可安装和运行。

- 实时监控端口和进程:对关键服务的端口和进程进行实时监控,确保服务可用性,并及时告警。

- 内网域名状态检查:定期检查内网域名的解析和可达性,确保内部服务的正常运行。

- SSL证书监控:自动检查SSL证书的有效性和到期时间,确保证书时间健康。

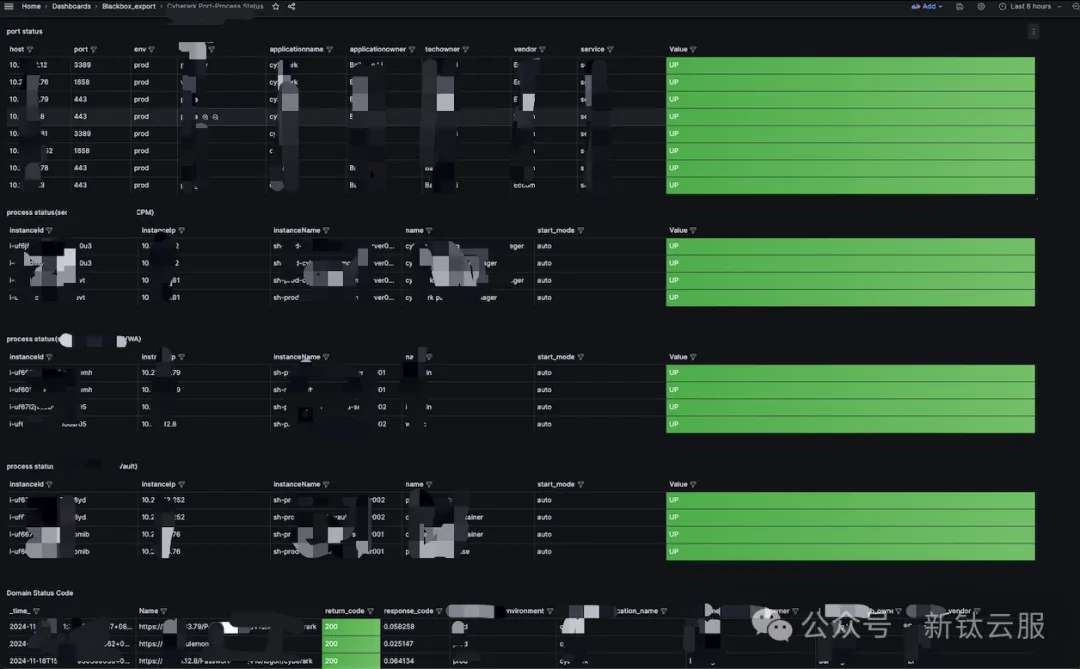

- 数据可视化与告警:通过可视化工具(如Grafana)展示监控数据,并配置告警机制,以便及时通知监控与开发相关技术人员。

业务流程

- 工具选择与部署

选择Prometheus作为监控系统,并利用适配器(如node_exporter、process_exporter、自定义port采集器)来满足不同的监控需求。

在Windows和Linux服务器上部署Prometheus及其相关Exporter。

- 配置端口和进程监控

使用node_exporter来监控系统的端口和进程状态。

配置Prometheus以抓取node_exporter提供的指标,确保可以监控特定的端口和进程。

- 告警机制

采用exporter来实现进程和端口状态检查。配置HTTP探测,确保服务的可用性。

将Prometheus数据源连接到Grafana,创建仪表盘以可视化监控数据。

- 测试与优化

在实施后进行测试,确保监控系统能够准确捕捉到各项指标。

根据反馈进行优化,调整监控策略和告警规则,确保系统的高效运行。

Prometheus进程、端口配置

①Dockerfile编译配置

cat Dockerfile

FROM python

ENV LANG=C.UTF-8

ENV TZ=Asia/Shanghai

RUN pip install pyyaml --upgrade -i https://pypi.tuna.tsinghua.edu.cn/simple

RUN pip install requests --upgrade -i https://pypi.tuna.tsinghua.edu.cn/simple

RUN pip install prometheus_client -i https://pypi.tuna.tsinghua.edu.cn/simple

RUN pip install Flask -i https://pypi.tuna.tsinghua.edu.cn/simple

RUN pip install pyyaml -i https://pypi.tuna.tsinghua.edu.cn/simple

RUN pip install asyncio -i https://pypi.tuna.tsinghua.edu.cn/simple

COPY host_port_monitor.py /opt/

CMD ["sleep","999"]#编译与推送镜像到仓库

docker build -t harbor.export.cn/ops/service_status_monitor_export_port:v2 .

docker push harbor.export.cn/ops/service_status_monitor_export_port:v2②配置特定端口采集器与端口标签规范化

cat host_port_monitor.py

# -*- coding:utf-8 -*-

import socket

import os

import yaml

import prometheus_client

from prometheus_client import Gauge

from prometheus_client.core import CollectorRegistry

from flask import Response, Flask

import re

import asyncio

app = Flask(__name__)

def get_config_dic():

"""

Load YAML config file and return it as a dictionary.

"""

pro_path = os.path.dirname(os.path.realpath(__file__))

yaml_path = os.path.join(pro_path, "host_port_conf.yaml")

with open(yaml_path, "r", encoding="utf-8") as f:

sdata = yaml.full_load(f)

return sdata

async def explore_udp_port(ip, port):

try:

loop = asyncio.get_running_loop()

transport, protocol = await loop.create_datagram_endpoint(

lambda: UDPProbe(),

remote_addr=(ip, port)

)

transport.close()

return 1

except Exception:

return 0

class UDPProbe:

def connection_made(self, transport):

self.transport = transport

self.transport.sendto(b'test')

def datagram_received(self, data, addr):

self.transport.close()

def error_received(self, exc):

pass

def connection_lost(self, exc):

pass

def explore_tcp_port(ip, port):

"""

Check if the TCP port is open on the given IP.

"""

try:

tel = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

tel.connect((ip, int(port)))

socket.setdefaulttimeout(0.5)

return 1

except:

return 0

def is_valid_label_name(label_name):

"""

Check if the label name is valid according to Prometheus conventions.

"""

return re.match(r'^[a-zA-Z_][a-zA-Z0-9_]*$', label_name) is not None

def format_label_name(label_name):

"""

Format invalid label_name to valid ones by replacing invalid characters.

"""

return re.sub(r'[^a-zA-Z0-9_]', '_', label_name)

def check_port():

"""

Check the ports for all configured services and apply different requirements.

"""

sdic = get_config_dic()

result_list = []

for sertype, config in sdic.items():

iplist = config.get("host")

portlist = config.get("port")

requirement = config.get("requirement")

protocol_list = config.get("protocol", ["tcp"])

# Extract dynamic labels and filter valid ones

dynamic_labels = {key: value for key, value in config.items() if key not in ['host', 'port', 'requirement', 'protocol']}

valid_labels = {format_label_name(key): value for key, value in dynamic_labels.items() if is_valid_label_name(key)}

status_all = True

for ip in iplist:

for port in portlist:

for protocol in protocol_list:

if protocol == "tcp":

status = explore_tcp_port(ip, port)

elif protocol == "udp":

status = asyncio.run(explore_udp_port(ip, port))

else:

status = explore_tcp_port(ip, port)

result_dic = {"sertype": sertype, "host": ip, "port": str(port), "status": status}

# Merge valid dynamic labels into the result dictionary

result_dic.update(valid_labels)

result_list.append(result_dic)

if requirement == "all":

status_all = status_all and result_dic["status"]

elif requirement == "any":

if result_dic["status"]:

status_all = True

break

if requirement in ["all", "any"]:

for result in result_list:

if result["sertype"] == sertype:

result["status"] = int(status_all)

return result_list

@app.route("/metrics")

def api_response():

"""

Generate Prometheus metrics based on the checked ports.

"""

checkport = check_port()

REGISTRY = CollectorRegistry(auto_describe=False)

# Define the metric with labels dynamically

base_labels = ["sertype", "host", "port"]

dynamic_labels = set()

# Collect all unique dynamic labels

for datas in checkport:

dynamic_labels.update(datas.keys())

dynamic_labels = dynamic_labels.difference(base_labels) # Exclude base labels

# Create a Gauge with all valid labels

muxStatus = Gauge("server_port_up", "Api response stats is:", base_labels + list(dynamic_labels), registry=REGISTRY)

for datas in checkport:

# Extract base label values

sertype = datas.get("sertype")

host = datas.get("host")

port = datas.get("port")

status = datas.get("status")

# Prepare label values for dynamic labels

label_values = [sertype, host, port] + [datas.get(label, "unknown") for label in dynamic_labels]

muxStatus.labels(*label_values).set(status)

return Response(prometheus_client.generate_latest(REGISTRY), mimetype="text/plain")

if __name__ == "__main__":

app.run(host="0.0.0.0", port=8080)#通过yaml配置端口信息、标签、协议等等,告警更加直观。

cat host_port_conf.yaml

# Prometheus monitor server port config.

pw:

env: "prod"

applicationowner: "zhangsan"

applicationname: "zhangsan"

vendor: "export"

techowner: "zhangsan"

service: "pass"

host:

- "10.10.10.123"

- "10.10.10.124"

port:

- 3389

requirement: "check" #正常返回通就通,不通就不通

protocol: "tcp"

ack:

env: "prod"

applicationowner: "zhangsan"

applicationname: "zhangsan"

vendor: "export"

techowner: "zhangsan"

service: "good"

host:

- "10.10.10.128"

- "10.10.10.129"

port:

- 1858

requirement: "any" # 只需满足一个就算全通

protocol: "tcp"#本地服务测试查看监控特定端口数据返回状态信息

curl -s -k http://ip:8080/metrics③在Cronjob配置

cat prometheus-service-monitor-export-port-deploy.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: service-monitor-export-port

namespace: monitor

spec:

replicas: 1

selector:

matchLabels:

app: prometheus-service-monitor-export-port

template:

metadata:

labels:

app: prometheus-service-monitor-export-port

spec:

containers:

- name: service-monitor-export-port

image: harbor.export.cn/ops/service_status_monitor_export_port:v2

imagePullPolicy: Always

command: ["sh","-c"]

args: ["python /opt/host_port_monitor.py"]

resources:

requests:

cpu: 500m

memory: 500Mi

limits:

cpu: 4000m

memory: 4000Mi

ports:

- containerPort: 8080

volumeMounts:

- name: host-port-conf-volume

mountPath: /opt/host_port_conf.yaml

subPath: host_port_conf.yaml

volumes:

- name: host-port-conf-volume

configMap:

name: host-port-conf

---

apiVersion: v1

kind: Service

metadata:

name: service-monitor-export-port

namespace: monitor

spec:

selector:

app: prometheus-service-monitor-export-port

ports:

- name: http

port: 80

targetPort: 8080

type: ClusterIPcat prometheus-service-monitor-export-port-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: host-port-conf

namespace: monitor

data:

host_port_conf.yaml: |

# Prometheus monitor server port config.

pw:

env: "prod"

applicationowner: "zhangsan"

applicationname: "zhangsan"

vendor: "export"

techowner: "zhangsan"

service: "pass"

host:

- "10.10.10.123"

- "10.10.10.124"

port:

- 3389

requirement: "check" #正常返回通就通,不通就不通

protocol: "tcp"

crm:

env: "prod"

applicationowner: "wangwu"

applicationname: "wangwu"

vendor: "export"

techowner: "wangwu"

service: "good"

host:

- "10.10.10.128"

- "10.10.10.129"

port:

- 1858

requirement: "any" # 只需满足一个就算全通

protocol: "tcp"#推送到ack运行该服务

kubectl apply -f prometheus-service-monitor-export-port-deploy.yaml

kubectl apply -f prometheus-service-monitor-export-port-configmap.yaml#配置自定义服务发现

- job_name: service-status-monitor-export-port

scrape_interval: 30s

scrape_timeout: 30s

scheme: http

metrics_path: /metrics

static_configs:

- targets: ['service-monitor-export-port.monitor.svc:80']

#端口PromQL语句

sum by( host, port, sertype, env, applicationname,applicationowner,techowner,vendor,service,status ) (server_port_up{instance="service-monitor-export-port.monitor.svc:80"}) !=1④进程配置

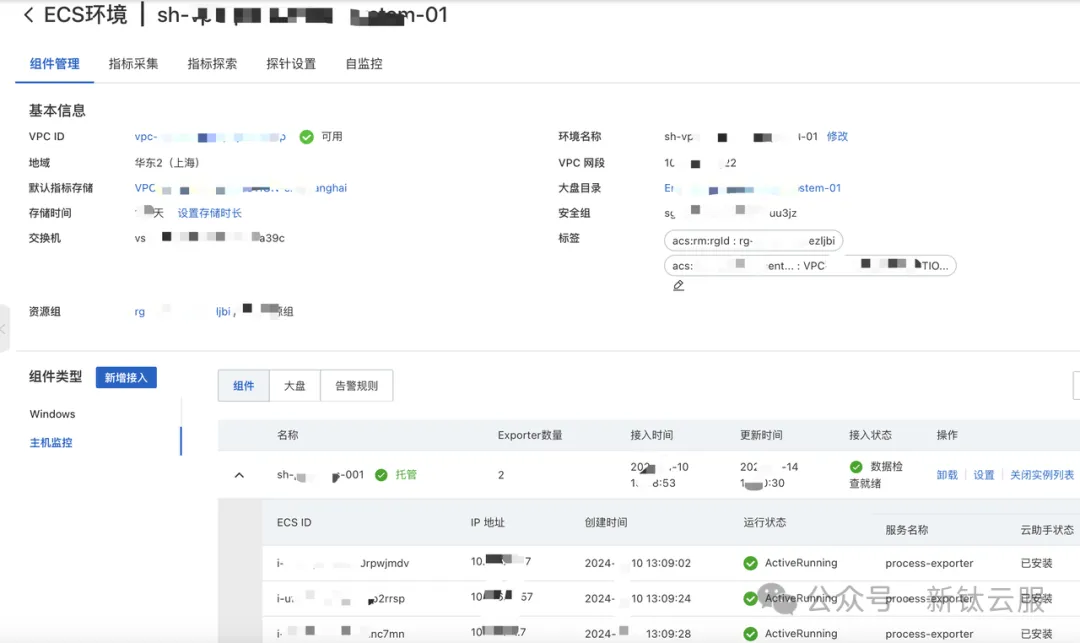

图片

图片

Windows进程状态查询语句如下:

windows_service_start_mode{instanceIp=~"10.10.10.76|10.10.10.252",start_mode="auto",name=~"mysqld|redis"}⑤防火墙配置端口策略

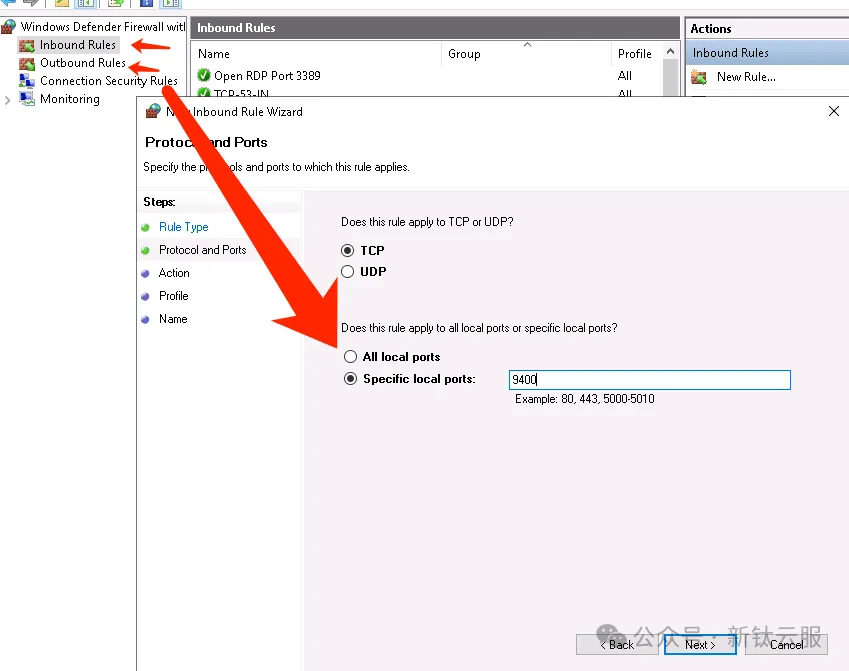

如果Windows出现主机http://10.10.10.22:9400/metrics 为down的,查看Windows的防火墙是否允许9400端口配置,打开cmd命令输入WF.msc进入Windows Defender 防火墙。

图片

图片

Linux平台防火墙配置

#列出系统中的 iptables 规则,同时显示规则的行号

iptables -L --line -n

#INPUT和OUTPUT规则允许9400

iptables -I INPUT 1 -p tcp --dport 9400 -m comment --comment "Allow arms prometheus - 9400 TCP" -j ACCEPT

iptables -I OUTPUT 2 -p tcp --dport 9400 -m comment --comment "Allow arms prometheus - 9400 TCP" -j ACCEPT

#防火墙保存配置

service iptables save传统方式进程、端口配置

①Linux平台配置

可以使用阿里云云助手或者是ansible推送脚本执行任务;

#创建脚本执行目录和日志存放目录

mkdir -p /opt/prot-monitor

mkdir -p /var/log/prot-monitor监控特定进程与端口举例进程sshd,端口22;

cat port-monitor.sh

#!/bin/bash

# 设置要监控的服务

declare -A MONITORS

# 格式: MONITORS["服务名称"]="进程1,进程2:端口1,端口2"

MONITORS["ssh"]="sshd:" # 监控进程

MONITORS["cyberark"]=":22" # 监控端口

# 日志文件路径

LOG_DIR="/var/log/prot-monitor"

DATE=$(date +"%Y-%m-%d")

PORT_LOG="${LOG_DIR}/port-${DATE}.log"

PROCESS_LOG="${LOG_DIR}/process-${DATE}.log"

# 当前时间

TIMESTAMP=$(date +"%Y-%m-%d %H:%M:%S")

# 获取主机名和IP地址

HOSTNAME=$(hostname)

IP_ADDRESS=$(hostname -I | awk '{print $1}') # 取第一个IP地址

# 清理过期日志(超过30天的日志)

find "$LOG_DIR" -name "*.log" -type f -mtime +30 -exec rm -f {} \;

# 检查端口状态函数

check_port_status() {

local port=$1

local port_status="UP"

# 检查端口状态

local port_list_output=$(netstat -tunlp | grep -v '@pts\|cpus\|master' | awk '{sub(/.*:/,"",$4);sub(/[0-9]*\//,"",$7);print $4}' | sort -n | uniq | egrep -w "$port")

if [[ -z "$port_list_output" ]]; then

port_status="DOWN"

fi

echo $port_status

}

# 检查进程状态函数

check_process_status() {

local process=$1

local process_status="UP"

if ! ps -aux | grep -v grep | grep -q "$process"; then

process_status="DOWN"

fi

echo $process_status

}

# 检查端口或进程状态

for SERVICE in "${!MONITORS[@]}"; do

IFS=':' read -r PROCESSES PORTS <<< "${MONITORS[$SERVICE]}"

# 检查进程状态

if [[ -n $PROCESSES ]]; then

IFS=',' read -r -a PROCESS_ARRAY <<< "$PROCESSES"

for PROCESS in "${PROCESS_ARRAY[@]}"; do

PROCESS_STATUS=$(check_process_status "$PROCESS")

echo "{\"timestamp\": \"$TIMESTAMP\", \"hostname\": \"$HOSTNAME\", \"ip_address\": \"$IP_ADDRESS\", \"service\": \"$SERVICE\", \"process\": \"$PROCESS\", \"process_status\": \"$PROCESS_STATUS\"}" >> "$PROCESS_LOG"

done

fi

# 检查端口状态

if [[ -n $PORTS ]]; then

IFS=',' read -r -a PORT_ARRAY <<< "$PORTS"

for PORT in "${PORT_ARRAY[@]}"; do

PORT_STATUS=$(check_port_status "$PORT")

echo "{\"timestamp\": \"$TIMESTAMP\", \"hostname\": \"$HOSTNAME\", \"ip_address\": \"$IP_ADDRESS\", \"service\": \"$SERVICE\", \"port_status\": \"$PORT_STATUS\", \"port\": \"$PORT\"}" >> "$PORT_LOG"

done

fi

doneCrontab定时执行任务计划

#每分钟执行脚本port-monitor.sh

crontab -l

*/1 * * * * /bin/bash /opt/prot-monitor/port-monitor.sh

#执行查看日志输出

bash /opt/prot-monitor/port-monitor.sh

#端口日志json输出

cat /var/log/prot-monitor/port-2024-11-18.log

{"timestamp": "2024-11-18 13:51:01", "hostname": "iZufXXXXXXXXXXXXXXX4Z", "ip_address": "10.10.10.80", "service": "sshd", "port_status": "UP", "port": "22"}

#进程日志json输出

cat /var/log/prot-monitor/process-2024-11-18.log

{"timestamp": "2024-11-18 13:50:01", "hostname": "iZufxxxxxxxxxxxxxx4Z", "ip_address": "10.10.10.80", "service": "sshd", "process": "sshd", "process_status": "UP"}

#查看下进程和端口日志服务返回UP状态信息

#启动crond和查看crond状态

systemctl start crond

systemctl status crond

#查看crond服务是否开启启动

systemctl list-unit-files -t service | grep cron阿里云SLS对接服务器日志路径采集日志;

#服务器安装logtail并运行服务

wget http://logtail-release-cn-hangzhou.oss-cn-hangzhou.aliyuncs.com/linux64/logtail.sh -O logtail.sh; chmod +x logtail.sh

#运行logtail服务

./logtail.sh install auto

sudo /etc/init.d/ilogtaild start

sudo /etc/init.d/ilogtaild status

#查看服务开机启动

systemctl list-unit-files -t service | grep ilo②Windows平台配置

监控特定进程与端口举例进程PM.exe,CA.exe,rdpclip.exe,端口3389

#创建目录

md C:\monitor-logscat C:\monitor-logs\port-monitor.ps1

# 设置要监控的服务

$MONITORS = @{

"cyberark" = "PM.exe,CA.exe,rdpclip.exe:" # 监控进程

"rdp" = ":3389" # 监控端口

}

# 日志文件路径

$LOG_DIR = "C:\monitor-logs"

if (!(Test-Path $LOG_DIR)) {

New-Item -Path $LOG_DIR -ItemType Directory | Out-Null

}

$DATE = Get-Date -Format "yyyy-MM-dd"

$PORT_LOG = Join-Path $LOG_DIR "port-$DATE.log"

$PROCESS_LOG = Join-Path $LOG_DIR "process-$DATE.log"

# 当前时间

$TIMESTAMP = Get-Date -Format "yyyy-MM-dd HH:mm:ss"

# 获取主机名和IP地址

$HOSTNAME = $env:COMPUTERNAME

$IP_ADDRESS = (Get-NetIPAddress | Where-Object { $_.AddressFamily -eq 'IPv4' -and $_.InterfaceAlias -ne 'Loopback Pseudo-Interface 1' }).IPAddress

# 清理过期日志(超过30天的日志)

Get-ChildItem -Path $LOG_DIR -Filter "*.log" | Where-Object { $_.LastWriteTime -lt (Get-Date).AddDays(-30) } | Remove-Item

# 检查端口状态函数

function Check-PortStatus {

param (

[int]$port

)

$port_status = "UP"

if (-not (Get-NetTCPConnection -LocalPort $port -ErrorAction SilentlyContinue)) {

$port_status = "DOWN"

}

return $port_status

}

# 使用 tasklist 和 findstr 查询进程状态

function Check-ProcessStatus {

param (

[string]$processNameToCheck

)

Write-Host "Checking process: $processNameToCheck"

$process_status = "UP"

# 运行 tasklist 并使用 findstr 来查找进程

$tasklist_command = "tasklist /FO CSV /NH | findstr /I `"$processNameToCheck`""

$process_found = Invoke-Expression $tasklist_command

if (-not $process_found) {

Write-Host "Process $processNameToCheck not found."

$process_status = "DOWN"

} else {

Write-Host "Process found: $processNameToCheck"

}

return $process_status

}

# 检查端口或进程状态

foreach ($SERVICE in $MONITORS.Keys) {

$entry = $MONITORS[$SERVICE]

$parts = $entry -split ':'

$processes = $parts[0]

$ports = $parts[1]

# 检查进程状态

if ($processes) {

$process_array = $processes -split ',' | Where-Object { $_ -ne "" }

foreach ($process in $process_array) {

$process_status = Check-ProcessStatus -processNameToCheck $process

$log_entry = @{

timestamp = $TIMESTAMP

hostname = $HOSTNAME

ip_address = $IP_ADDRESS

service = $SERVICE

process = $process

process_status = $process_status

}

($log_entry | ConvertTo-Json -Compress) | Out-File -Append -FilePath $PROCESS_LOG

}

}

# 检查端口状态

if ($ports) {

$port_array = $ports -split ',' | Where-Object { $_ -ne "" }

foreach ($port in $port_array) {

$port_status = Check-PortStatus -port $port

$log_entry = @{

timestamp = $TIMESTAMP

hostname = $HOSTNAME

ip_address = $IP_ADDRESS

service = $SERVICE

port_status = $port_status

port = $port

}

($log_entry | ConvertTo-Json -Compress) | Out-File -Append -FilePath $PORT_LOG

}

}

}#查询特定进程信息

tasklist /FO CSV /NH | findstr /I "PM.exe"

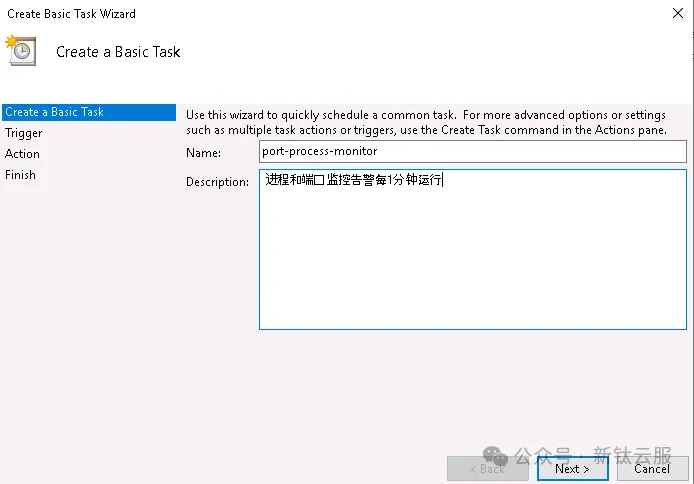

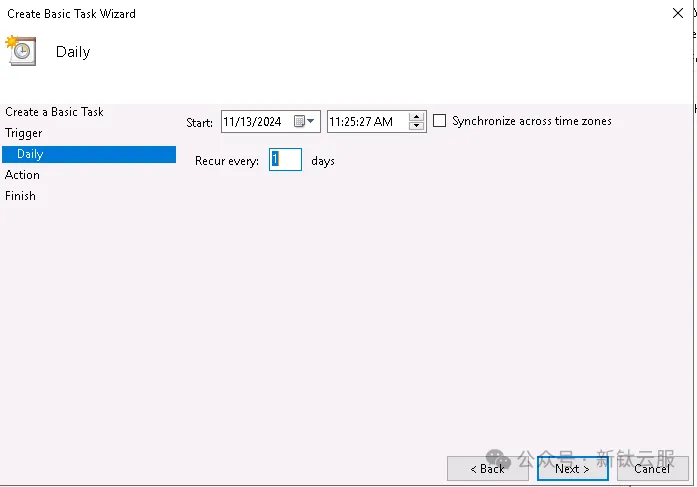

tasklist /FO CSV /NH | findstr /I "CA.exe"③计划任务

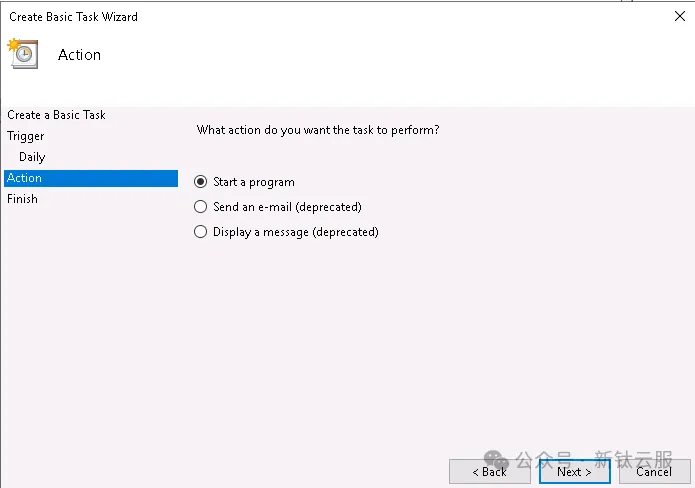

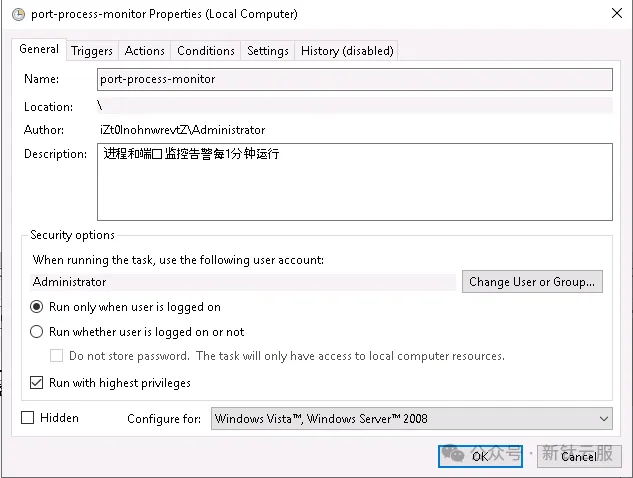

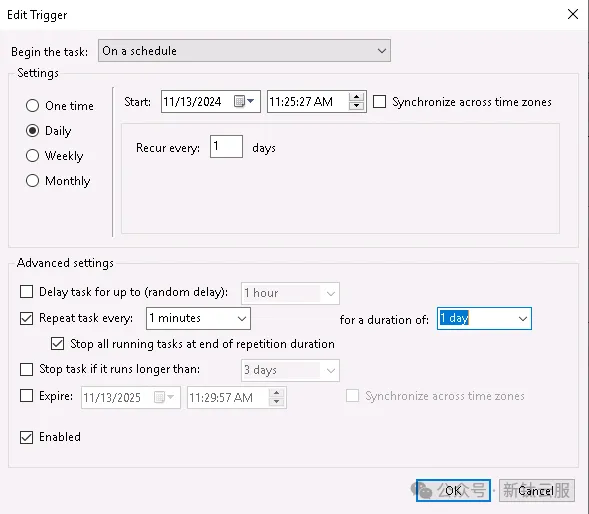

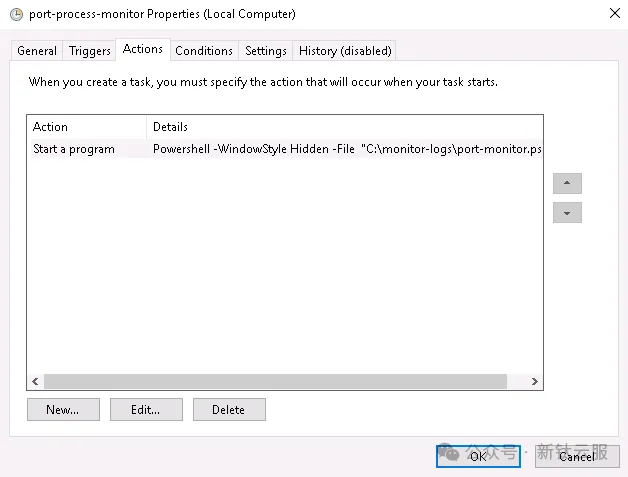

cmd打开命令taskschd.msc打开定时任务;

进程和端口监控告警每1分钟运行;

图片

图片

图片

图片

图片

图片

图片

图片

图片

图片

powershell -WindowStyle Hidden -File "C:\monitor-logs\port-monitor.ps1"

图片

图片

④SLS进程与端口告警配置

#端口查询语句

*| select timestamp,ip_address,hostname,service,port_status,port from log where port_status is not null and port is not null ORDER BY timestamp DESC limit 1000

#进程查询语句

*| select timestamp,ip_address,hostname,service,process_status from log where process_status is not null ORDER BY timestamp DESC limit 1000⑤Grafana大屏展示

图片

图片

内网域名状态检查

①Dockerfile配置

FROM python

RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

# 指定工作目录不存会自己创建

RUN pip install pyyaml --upgrade -i https://pypi.tuna.tsinghua.edu.cn/simple

RUN pip install requests --upgrade -i https://pypi.tuna.tsinghua.edu.cn/simple

COPY domain_return_code.py /opt/

ENV TZ=Asia/Shanghai

# 配置环境变量信息

CMD ["sleep","999"]②检查脚本调式

#!/usr/bin/env pyhon

# 获取站点返回状态码

import requests

import yaml

import json

import ssl

import socket

import datetime

def get_cert_expiration_date(host, port):

try:

host = host.split("//")[1].split("/")[0]

context = ssl.create_default_context()

with socket.create_connection((host, port)) as sock:

with context.wrap_socket(sock, server_hostname=host) as sslsock:

cert = sslsock.getpeercert()

expiration_date = ssl.cert_time_to_seconds(cert['notAfter'])

return expiration_date

except Exception as e:

#print("获取证书过期时间失败:", e)

#证书不正确返回一个2008-08-08时间

return 1218168000

def get_status_code(url,timeout):

try:

requests.packages.urllib3.disable_warnings(requests.packages.urllib3.exceptions.InsecureRequestWarning)

response = requests.get(url,timeout=timeout,verify=False)

response_time = response.elapsed.total_seconds()

#获取ssl到期时间

cert_expiration_date = get_cert_expiration_date(url, 443)

#时间戳转换成年月日

dateArray = datetime.datetime.fromtimestamp(cert_expiration_date, datetime.timezone.utc)

cert_expiration_date_format = dateArray.strftime("%Y-%m-%d")

return response.status_code, response_time, cert_expiration_date_format

except requests.exceptions.RequestException as e:

# print("请求发生错误:", e)

return None

def get_domain_returncode(url,timeout):

status_code = get_status_code(url,timeout=timeout)

if status_code is not None:

# print("状态码:", status_code)

return status_code[0], status_code[1], status_code[2]

else:

# print(url + ": " + "504")

return 504,100,'2008-08-08'

def read_yaml(file_path):

with open(file_path, 'r') as file:

try:

yaml_data = yaml.safe_load(file)

return yaml_data

except yaml.YAMLError as e:

print("读取YAML文件时发生错误:", e)

return None

if __name__ == "__main__":

file_path = "/opt/domain_returncode_conf.yaml"

domain_status_dict = {}

reade_config = read_yaml(file_path)

config = reade_config["domain"]

for i in config:

code = get_domain_returncode(i["Name"],timeout=i["timeout"])

#print('code--------')

#print(code)

# print(i)

for k,v in i.items():

domain_status_dict[k] = v

domain_status_dict["return_code"] = code[0]

domain_status_dict["response_code"] = code[1]

domain_status_dict["EndDate"] = code[2]

json_str = json.dumps(domain_status_dict)

domain_status_dict = {}

print(json_str)cat /opt/domain_returncode_conf.yaml

domain:

- Name: https://admin.export.cn

timeout: 15

Network: intranet

- Name: https://api.export.cn

timeout: 15

Network: intranet③Cronjob配置服务

cat aliyun_domain_code.yaml

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: aliyun-domaincode-monitor-server

namespace: monitor

spec:

schedule: "*/1 * * * *"

concurrencyPolicy: Forbid

jobTemplate:

spec:

parallelism: 1

completions: 1

backoffLimit: 3

activeDeadlineSeconds: 60

ttlSecondsAfterFinished: 600

template:

spec:

volumes:

- name: domain-returncode-config

configMap:

name: domain-returncode

containers:

- name: aliyun-domaincode-monitor

image: harbor.export.cn/ops/domain-return-code:v1

#imagePullSecrets:

imagePullPolicy: Always

command:

- /bin/sh

- -c

- python3 /opt/domain_return_code.py

volumeMounts:

- name: domain-returncode-config

mountPath: /opt/domain_returncode_conf.yaml

subPath: domain_returncode_conf.yaml

restartPolicy: OnFailure

startingDeadlineSeconds: 300

---

apiVersion: v1

kind: ConfigMap

metadata:

name: domain-returncode

namespace: monitoring

data:

domain_returncode_conf.yaml: |

domain:

- Name: https://admin.export.cn

timeout: 15

Network: internet+intranet

environment: prod

vendor: zhangsan

application_name: admin

application_owner: zhangsan

tech_owner: zhangsan

Assignment_group: admin Operations

- Name: https://api.test.cn

timeout: 15

Network: internet

environment: prod

vendor: wangwu

application_name: api

application_owner: test

tech_owner: test

Assignment_group: api Support④日志输出返回信息与SLS SQL查询;

#输出的日志信息

{"Name": "https://admin.export.cn", "return_code": 200, "response_code": 5.087571, "EndDate": "2025-01-21", "timeout": 15, "Network": "internet+intranet", "environment": "prod", "vendor": "zhangsan", "application_name": "admin", "application_owner": "zhangsan", "tech_owner": "zhangsan", "Assignment_group": "admin Operations"}SLS查询语句配置告警;

#查询证书域名证书时间

(EndDate: * and _namespace_ : monitoring and _container_name_: aliyun-domaincode-monitor)| select DISTINCT Name,return_code,EndDate,date_diff('day', date_parse(split(_time_, 'T')[1], '%Y-%m-%d'), date_parse(EndDate, '%Y-%m-%d')) as days having days > 0 AND days <= 60 ORDER BY days ASC LIMIT 1000

#查询5XX的状态码域名返回信息

(((return_code : 5?? ))) not name:"https://view.export.cn" | SELECT DISTINCT Name,return_code,content from log LIMIT 10000总结来说,公司A通过采用Prometheus作为监控工具,成功地实现了对Windows和Linux平台上端口、进程和内网域名状态的监控。通过精心设计的流程,包括工具选择与部署、配置端口和进程监控、告警机制的建立、以及数据可视化和优化,公司能够确保其IT基础设施的稳定性和安全性。此外,通过使用Grafana进行数据可视化和配置告警机制,公司能够及时通知监控与开发相关技术人员,从而提高了系统的可用性和响应速度。通过这些措施,公司A能够有效地管理和维护其业务关键服务,支持业务的持续增长和扩展。