大家好,我是小寒

上次,我们从理论的角度给大家详细介绍了什么是 Transformer 算法,并对 Transformer 的核心组件进行了完整的解读。

今天将带领大家使用 PyTorch 来从头构建一个 Transformer 模型

使用 PyTorch 构建 Transformer 模型

1.导入必要的库和模块

我们将首先导入 PyTorch 库以实现核心功能、导入神经网络模块以创建神经网络、导入优化模块以训练网络。

import torch

import torch.nn as nn

import torch.optim as optim

import torch.utils.data as data

import math

import copy- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

2. 定义基本构建块

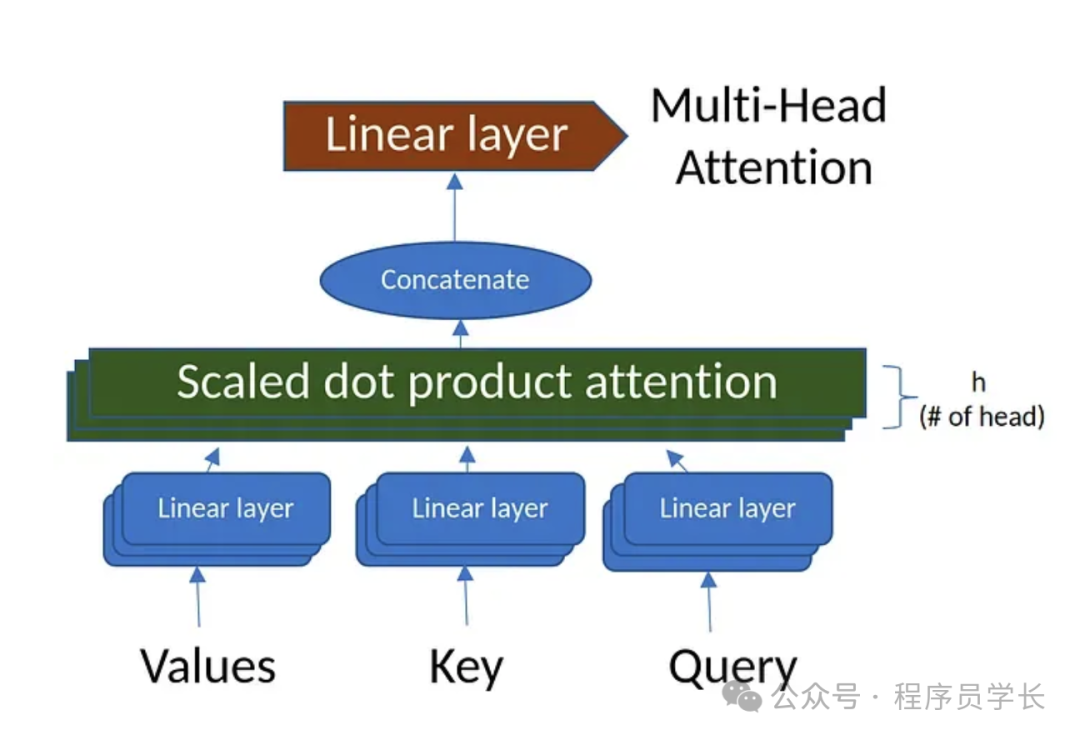

多头注意力机制

多头注意力机制计算序列中每对位置之间的注意力。

它由多个“注意力头”组成,用于捕捉输入序列的不同方面。

图片

图片

class MultiHeadAttention(nn.Module):

# d_model:输入的维数

# num_heads:注意力头的数量

def __init__(self, d_model, num_heads):

super(MultiHeadAttention, self).__init__()

# Ensure that the model dimension (d_model) is divisible by the number of heads

assert d_model % num_heads == 0, "d_model must be divisible by num_heads"

# Initialize dimensions

self.d_model = d_model # Model's dimension

self.num_heads = num_heads # Number of attention heads

self.d_k = d_model // num_heads # Dimension of each head's key, query, and value

# Linear layers for transforming inputs

self.W_q = nn.Linear(d_model, d_model) # Query transformation

self.W_k = nn.Linear(d_model, d_model) # Key transformation

self.W_v = nn.Linear(d_model, d_model) # Value transformation

self.W_o = nn.Linear(d_model, d_model) # Output transformation

# 缩放点积注意力机制

def scaled_dot_product_attention(self, Q, K, V, mask=None):

# Calculate attention scores

attn_scores = torch.matmul(Q, K.transpose(-2, -1)) / math.sqrt(self.d_k)

# Apply mask if provided (useful for preventing attention to certain parts like padding)

if mask is not None:

attn_scores = attn_scores.masked_fill(mask == 0, -1e9)

# Softmax is applied to obtain attention probabilities

attn_probs = torch.softmax(attn_scores, dim=-1)

# Multiply by values to obtain the final output

output = torch.matmul(attn_probs, V)

return output

def split_heads(self, x):

# Reshape the input to have num_heads for multi-head attention

batch_size, seq_length, d_model = x.size()

return x.view(batch_size, seq_length, self.num_heads, self.d_k).transpose(1, 2)

def combine_heads(self, x):

# Combine the multiple heads back to original shape

batch_size, _, seq_length, d_k = x.size()

return x.transpose(1, 2).contiguous().view(batch_size, seq_length, self.d_model)

def forward(self, Q, K, V, mask=None):

# Apply linear transformations and split heads

Q = self.split_heads(self.W_q(Q))

K = self.split_heads(self.W_k(K))

V = self.split_heads(self.W_v(V))

# Perform scaled dot-product attention

attn_output = self.scaled_dot_product_attention(Q, K, V, mask)

# Combine heads and apply output transformation

output = self.W_o(self.combine_heads(attn_output))

return output- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

MultiHeadAttention 类封装了 Transformer 模型中常用的多头注意力机制,负责将输入拆分成多个注意力头,对每个注意力头施加注意力,然后将结果组合起来,这样模型就可以在不同尺度上捕捉输入数据中的各种关系,提高模型的表达能力。

前馈网络

class PositionWiseFeedForward(nn.Module):

def __init__(self, d_model, d_ff):

super(PositionWiseFeedForward, self).__init__()

self.fc1 = nn.Linear(d_model, d_ff)

self.fc2 = nn.Linear(d_ff, d_model)

self.relu = nn.ReLU()

def forward(self, x):

return self.fc2(self.relu(self.fc1(x)))- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

PositionWiseFeedForward 类定义了一个前馈神经网络,它由两个线性层组成,中间有一个 ReLU 激活函数。

位置编码

位置编码用于注入输入序列中每个 token 的位置信息。它使用不同频率的正弦和余弦函数来生成位置编码。

class PositionalEncoding(nn.Module):

def __init__(self, d_model, max_seq_length):

super(PositionalEncoding, self).__init__()

pe = torch.zeros(max_seq_length, d_model)

position = torch.arange(0, max_seq_length, dtype=torch.float).unsqueeze(1)

div_term = torch.exp(torch.arange(0, d_model, 2).float() * -(math.log(10000.0) / d_model))

pe[:, 0::2] = torch.sin(position * div_term)

pe[:, 1::2] = torch.cos(position * div_term)

self.register_buffer('pe', pe.unsqueeze(0))

def forward(self, x):

return x + self.pe[:, :x.size(1)]- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

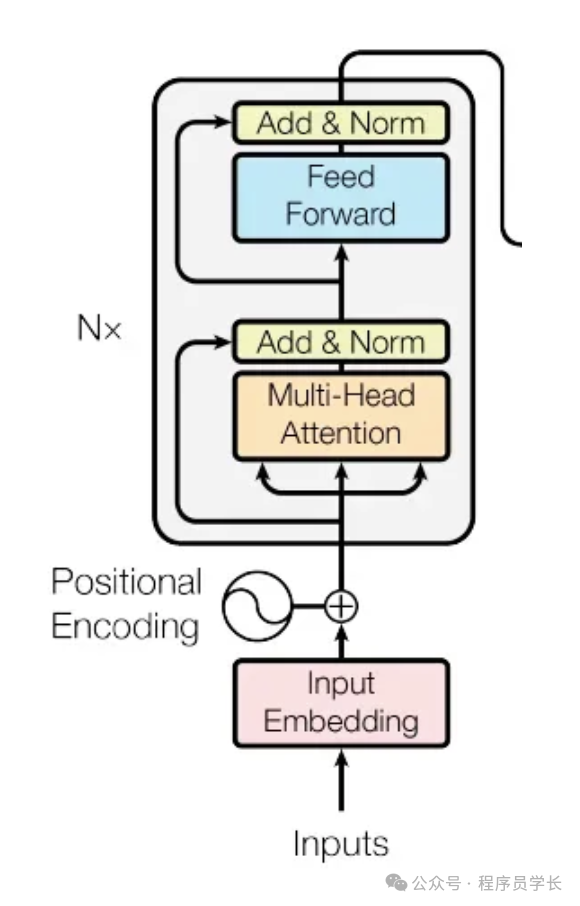

3.构建编码器模块

图片

图片

EncoderLayer 类定义 Transformer 编码器的单层。

它封装了一个多头自注意力机制,随后是前馈神经网络,并根据需要应用残差连接、层规范化。

这些组件一起允许编码器捕获输入数据中的复杂关系,并将其转换为下游任务的有用表示。

通常,多个这样的编码器层堆叠在一起以形成 Transformer 模型的完整编码器部分。

class EncoderLayer(nn.Module):

def __init__(self, d_model, num_heads, d_ff, dropout):

super(EncoderLayer, self).__init__()

self.self_attn = MultiHeadAttention(d_model, num_heads)

self.feed_forward = PositionWiseFeedForward(d_model, d_ff)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

self.dropout = nn.Dropout(dropout)

def forward(self, x, mask):

attn_output = self.self_attn(x, x, x, mask)

x = self.norm1(x + self.dropout(attn_output))

ff_output = self.feed_forward(x)

x = self.norm2(x + self.dropout(ff_output))

return x- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

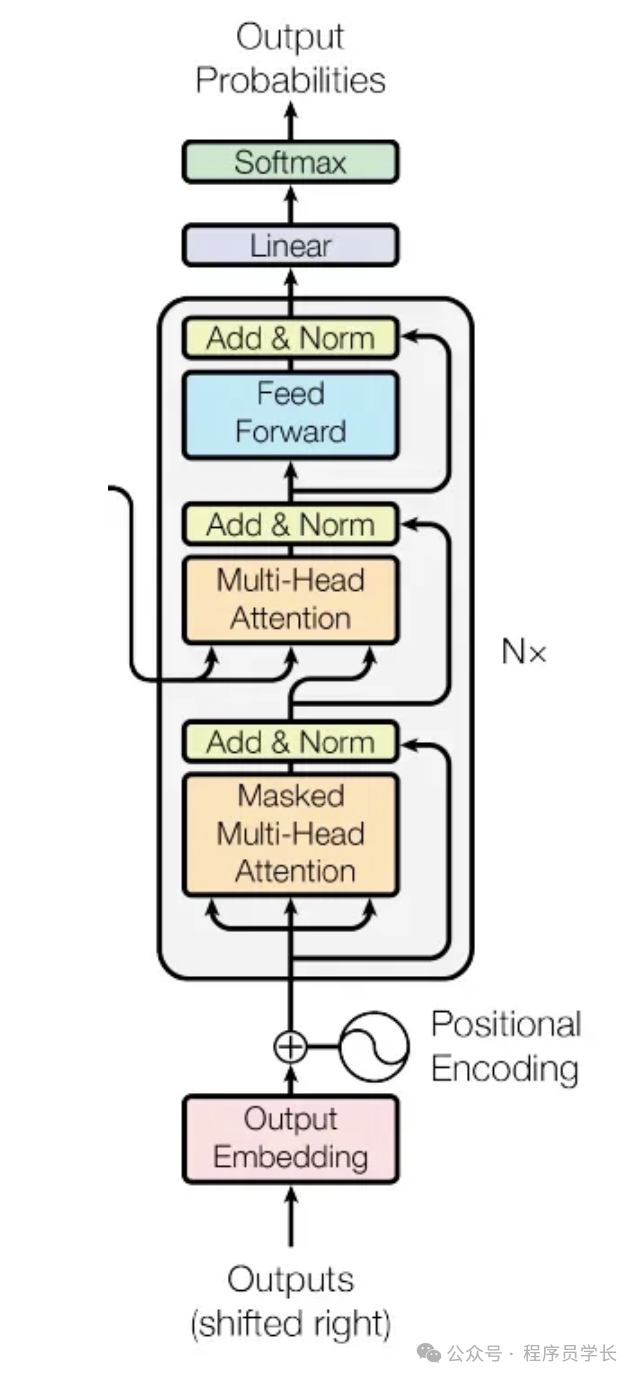

4.构建解码器模块

图片

图片

DecoderLayer 类定义 Transformer 解码器的单层。

它由多头自注意力机制、多头交叉注意力机制(关注编码器的输出)、前馈神经网络以及相应的残差连接、层规范化组成。

这种组合使解码器能够根据编码器的表示生成有意义的输出,同时考虑目标序列和源序列。

与编码器一样,多个解码器层通常堆叠在一起以形成 Transformer 模型的完整解码器部分。

class DecoderLayer(nn.Module):

def __init__(self, d_model, num_heads, d_ff, dropout):

super(DecoderLayer, self).__init__()

self.self_attn = MultiHeadAttention(d_model, num_heads)

self.cross_attn = MultiHeadAttention(d_model, num_heads)

self.feed_forward = PositionWiseFeedForward(d_model, d_ff)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

self.norm3 = nn.LayerNorm(d_model)

self.dropout = nn.Dropout(dropout)

def forward(self, x, enc_output, src_mask, tgt_mask):

attn_output = self.self_attn(x, x, x, tgt_mask)

x = self.norm1(x + self.dropout(attn_output))

attn_output = self.cross_attn(x, enc_output, enc_output, src_mask)

x = self.norm2(x + self.dropout(attn_output))

ff_output = self.feed_forward(x)

x = self.norm3(x + self.dropout(ff_output))

return x- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

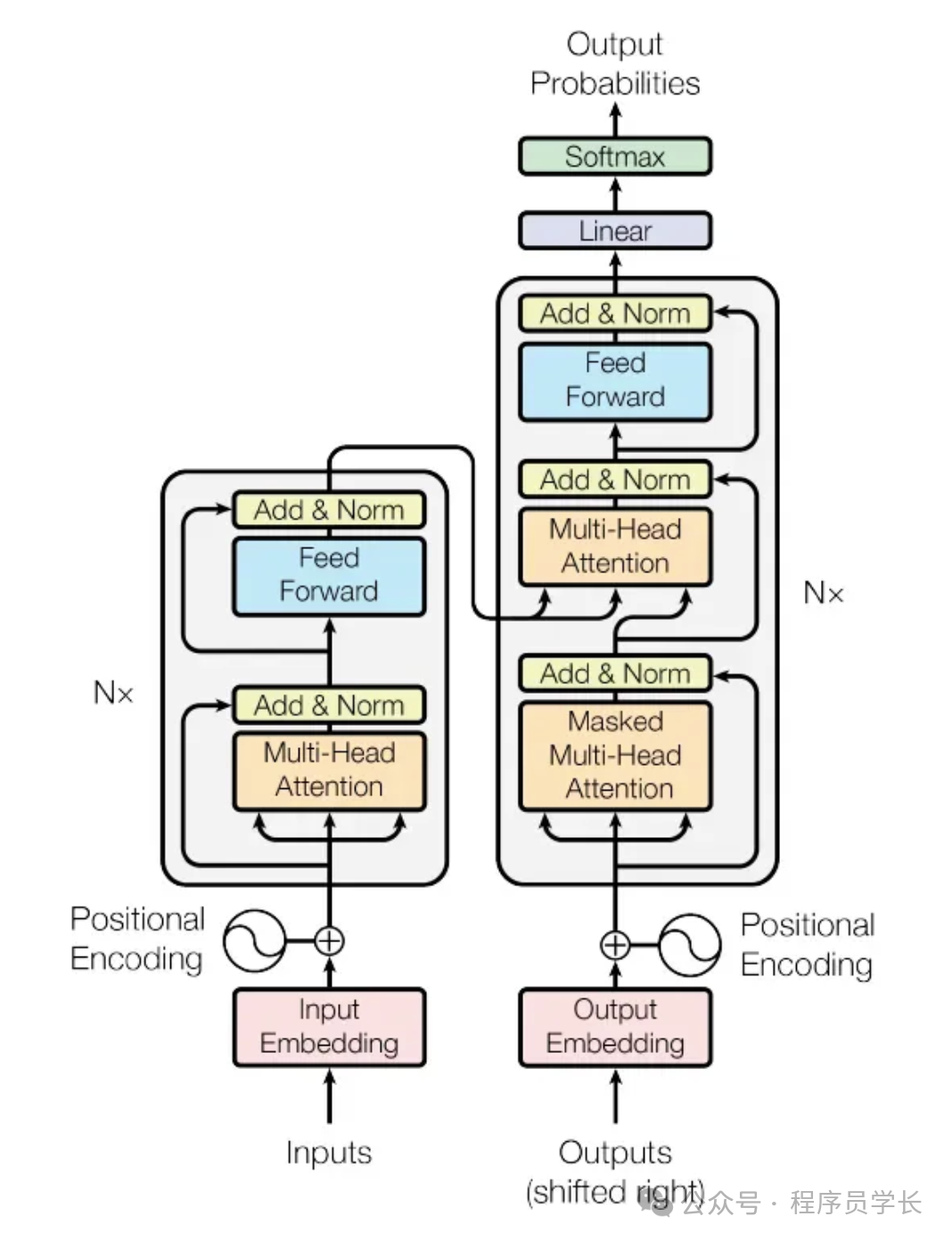

5.结合编码器和解码器层来创建完整的 Transformer 网络

图片

图片

class Transformer(nn.Module):

def __init__(self, src_vocab_size, tgt_vocab_size, d_model, num_heads, num_layers, d_ff, max_seq_length, dropout):

super(Transformer, self).__init__()

self.encoder_embedding = nn.Embedding(src_vocab_size, d_model)

self.decoder_embedding = nn.Embedding(tgt_vocab_size, d_model)

self.positional_encoding = PositionalEncoding(d_model, max_seq_length)

self.encoder_layers = nn.ModuleList([EncoderLayer(d_model, num_heads, d_ff, dropout) for _ in range(num_layers)])

self.decoder_layers = nn.ModuleList([DecoderLayer(d_model, num_heads, d_ff, dropout) for _ in range(num_layers)])

self.fc = nn.Linear(d_model, tgt_vocab_size)

self.dropout = nn.Dropout(dropout)

def generate_mask(self, src, tgt):

src_mask = (src != 0).unsqueeze(1).unsqueeze(2)

tgt_mask = (tgt != 0).unsqueeze(1).unsqueeze(3)

seq_length = tgt.size(1)

nopeak_mask = (1 - torch.triu(torch.ones(1, seq_length, seq_length), diagnotallow=1)).bool()

tgt_mask = tgt_mask & nopeak_mask

return src_mask, tgt_mask

def forward(self, src, tgt):

src_mask, tgt_mask = self.generate_mask(src, tgt)

src_embedded = self.dropout(self.positional_encoding(self.encoder_embedding(src)))

tgt_embedded = self.dropout(self.positional_encoding(self.decoder_embedding(tgt)))

enc_output = src_embedded

for enc_layer in self.encoder_layers:

enc_output = enc_layer(enc_output, src_mask)

dec_output = tgt_embedded

for dec_layer in self.decoder_layers:

dec_output = dec_layer(dec_output, enc_output, src_mask, tgt_mask)

output = self.fc(dec_output)

return output- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

Transformer 类将 Transformer 模型的各个组件整合在一起,包括嵌入、位置编码、编码器层和解码器层。

它提供了一个方便的训练和推理接口,封装了多头注意力、前馈网络和层规范化的复杂性。

训练 PyTorch Transformer 模型

1.样本数据准备

为了便于说明,本例中将制作一个虚拟数据集。

但在实际情况下,将使用更大规模的数据集,并且该过程将涉及文本预处理以及为源语言和目标语言创建词汇表映射。

src_vocab_size = 5000

tgt_vocab_size = 5000

d_model = 512

num_heads = 8

num_layers = 6

d_ff = 2048

max_seq_length = 100

dropout = 0.1

transformer = Transformer(src_vocab_size, tgt_vocab_size, d_model, num_heads, num_layers, d_ff, max_seq_length, dropout)

# Generate random sample data

src_data = torch.randint(1, src_vocab_size, (64, max_seq_length)) # (batch_size, seq_length)

tgt_data = torch.randint(1, tgt_vocab_size, (64, max_seq_length)) # (batch_size, seq_length)- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

2.训练模型

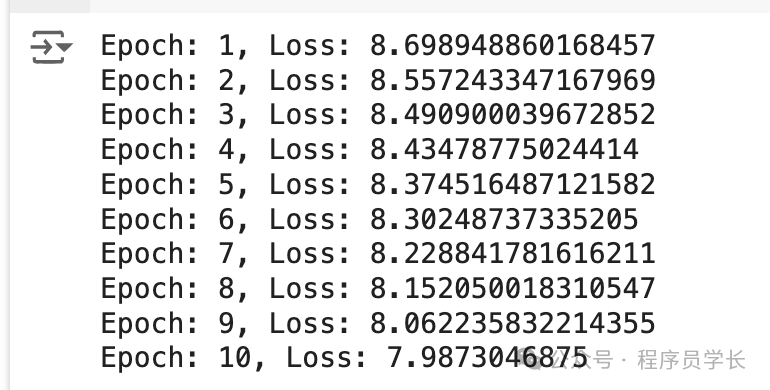

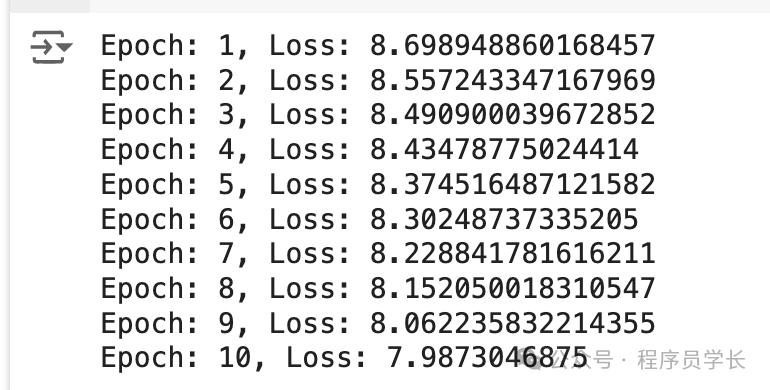

接下来,将利用上述样本数据训练模型。

transformer = Transformer(src_vocab_size, tgt_vocab_size, d_model, num_heads, num_layers, d_ff, max_seq_length, dropout)

criterion = nn.CrossEntropyLoss(ignore_index=0)

optimizer = optim.Adam(transformer.parameters(), lr=0.0001, betas=(0.9, 0.98), eps=1e-9)

transformer.train()

for epoch in range(10):

optimizer.zero_grad()

output = transformer(src_data, tgt_data[:, :-1])

loss = criterion(output.contiguous().view(-1, tgt_vocab_size), tgt_data[:, 1:].contiguous().view(-1))

loss.backward()

optimizer.step()

print(f"Epoch: {epoch+1}, Loss: {loss.item()}")- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

图片

图片

图片

图片