本文我们将介绍在将召回片段送入大模型之前的一些优化手段,它们能帮助大模型更好的理解上下文知识,给出最佳的回答:

- Long-text Reorder

- Contextual compression

- Refine

- Emotion Prompt

Long-text Reorder

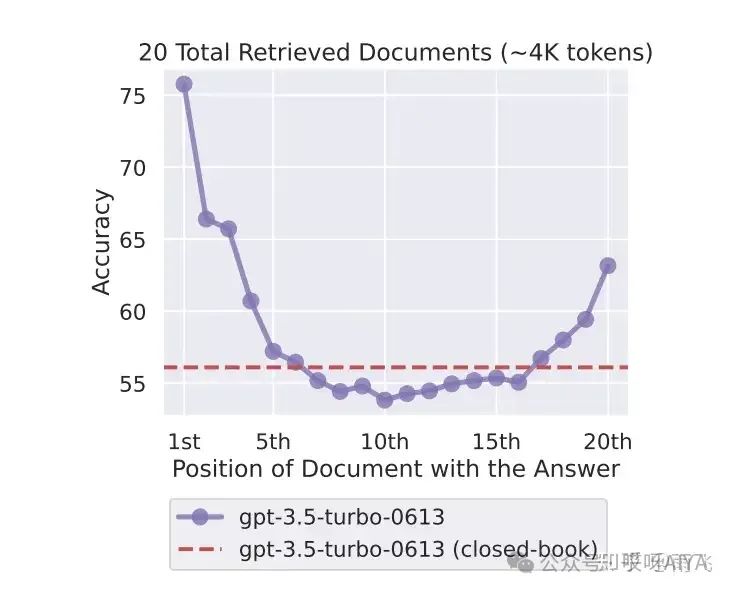

根据论文 Lost in the Middle: How Language Models Use Long Contexts,的实验表明,大模型更容易记忆开头和结尾的文档,而对中间部分的文档记忆能力不强,因此可以根据召回的文档和query的相关性进行重排序。

图片

图片

核心的代码可以参考langchain的实现:

def _litm_reordering(documents: List[Document]) -> List[Document]:

"""Lost in the middle reorder: the less relevant documents will be at the

middle of the list and more relevant elements at beginning / end.

See: https://arxiv.org/abs//2307.03172"""

documents.reverse()

reordered_result = []

for i, value in enumerate(documents):

if i % 2 == 1:

reordered_result.append(value)

else:

reordered_result.insert(0, value)

return reordered_resultContextual compression

本质上利用LLM去判断检索之后的文档和用户query的相关性,只返回相关度最高的k个。

from langchain.retrievers import ContextualCompressionRetriever

from langchain.retrievers.document_compressors import LLMChainExtractor

from langchain_openai import OpenAI

llm = OpenAI(temperature=0)

compressor = LLMChainExtractor.from_llm(llm)

compression_retriever = ContextualCompressionRetriever(

base_compressor=compressor, base_retriever=retriever

)

compressed_docs = compression_retriever.get_relevant_documents(

"What did the president say about Ketanji Jackson Brown"

)

print(compressed_docs)Refine

对最后大模型生成的回答进行进一步的改写,保证回答的准确性。主要涉及提示词工程,参考的提示词如下:

The original query is as follows: {query_str}

We have provided an existing answer: {existing_answer}

We have the opportunity to refine the existing answer (only if needed) with some more context below.

------------

{context_msg}

------------

Given the new context, refine the original answer to better answer the query. If the context isn't useful, return the original answer.

Refined Answer:Emotion Prompt

同样是提示词工程的一部分,思路来源于微软的论文:

Large Language Models Understand and Can Be Enhanced by Emotional Stimuli

在论文中,微软研究员提出,在提示词中增加一些情绪情感相关的提示,有助于大模型输出高质量的回答。

参考提示词如下:

emotion_stimuli_dict = {

"ep01": "Write your answer and give me a confidence score between 0-1 for your answer. ",

"ep02": "This is very important to my career. ",

"ep03": "You'd better be sure.",

# add more from the paper here!!

}

# NOTE: ep06 is the combination of ep01, ep02, ep03

emotion_stimuli_dict["ep06"] = (

emotion_stimuli_dict["ep01"]

+ emotion_stimuli_dict["ep02"]

+ emotion_stimuli_dict["ep03"]

)

from llama_index.prompts import PromptTemplate

qa_tmpl_str = """\

Context information is below.

---------------------

{context_str}

---------------------

Given the context information and not prior knowledge, \

answer the query.

{emotion_str}

Query: {query_str}

Answer: \

"""

qa_tmpl = PromptTemplate(qa_tmpl_str)