计算机真的能理解人脸吗?你是否想过Instagram是如何给你的脸上应用惊人的滤镜的?该软件检测你脸上的关键点并在其上投影一个遮罩。本教程将教你如何使用PyTorch构建一个类似的软件。

数据集

在本教程中,我们将使用官方的DLib数据集,其中包含6666张尺寸不同的图像。此外,labels_ibug_300W_train.xml(随数据集提供)包含每张人脸的68个关键点的坐标。下面的脚本将在Colab笔记本中下载数据集并解压缩。

if not os.path.exists('/content/ibug_300W_large_face_landmark_dataset'):

!wget http://dlib.net/files/data/ibug_300W_large_face_landmark_dataset.tar.gz

!tar -xvzf 'ibug_300W_large_face_landmark_dataset.tar.gz'

!rm -r 'ibug_300W_large_face_landmark_dataset.tar.gz'- 1.

- 2.

- 3.

- 4.

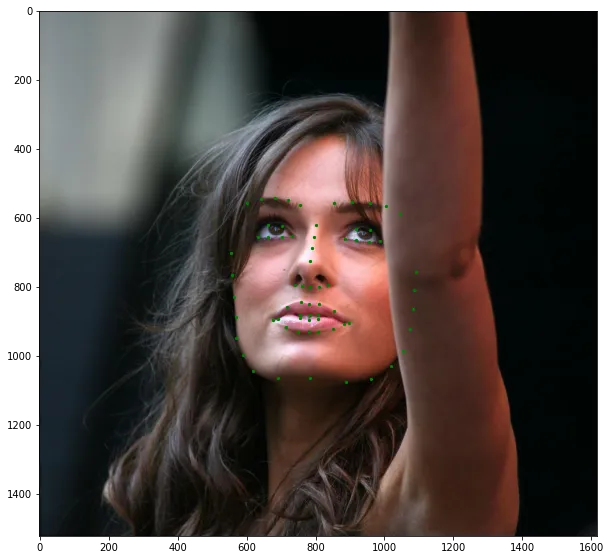

这是数据集中的一张样本图像。我们可以看到,人脸只占整个图像的一小部分。如果我们将完整图像输入神经网络,它也会处理背景(无关信息),这会使模型难以学习。因此,我们需要裁剪图像,仅输入人脸部分。

数据集中的样本图像和关键点

数据预处理

为了防止神经网络过拟合训练数据集,我们需要随机变换数据集。我们将对训练和验证数据集应用以下操作:

- 由于人脸只占整个图像的一小部分,所以裁剪图像并仅使用人脸进行训练。

- 将裁剪后的人脸调整为(224x224)的图像。

- 随机改变调整后的人脸的亮度和饱和度。

- 在上述三个转换之后,随机旋转人脸。

- 将图像和关键点转换为torch张量,并在[-1, 1]之间进行归一化。

class Transforms():

def __init__(self):

pass

def rotate(self, image, landmarks, angle):

angle = random.uniform(-angle, +angle)

transformation_matrix = torch.tensor([

[+cos(radians(angle)), -sin(radians(angle))],

[+sin(radians(angle)), +cos(radians(angle))]

])

image = imutils.rotate(np.array(image), angle)

landmarks = landmarks - 0.5

new_landmarks = np.matmul(landmarks, transformation_matrix)

new_landmarks = new_landmarks + 0.5

return Image.fromarray(image), new_landmarks

def resize(self, image, landmarks, img_size):

image = TF.resize(image, img_size)

return image, landmarks

def color_jitter(self, image, landmarks):

color_jitter = transforms.ColorJitter(brightness=0.3,

contrast=0.3,

saturation=0.3,

hue=0.1)

image = color_jitter(image)

return image, landmarks

def crop_face(self, image, landmarks, crops):

left = int(crops['left'])

top = int(crops['top'])

width = int(crops['width'])

height = int(crops['height'])

image = TF.crop(image, top, left, height, width)

img_shape = np.array(image).shape

landmarks = torch.tensor(landmarks) - torch.tensor([[left, top]])

landmarks = landmarks / torch.tensor([img_shape[1], img_shape[0]])

return image, landmarks

def __call__(self, image, landmarks, crops):

image = Image.fromarray(image)

image, landmarks = self.crop_face(image, landmarks, crops)

image, landmarks = self.resize(image, landmarks, (224, 224))

image, landmarks = self.color_jitter(image, landmarks)

image, landmarks = self.rotate(image, landmarks, angle=10)

image = TF.to_tensor(image)

image = TF.normalize(image, [0.5], [0.5])

return image, landmarks- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

数据集类

现在我们已经准备好了转换,让我们编写我们的数据集类。labels_ibug_300W_train.xml包含图像路径、关键点和边界框的坐标(用于裁剪人脸)。我们将这些值存储在列表中,以便在训练期间轻松访问。在本文章中,神经网络将在灰度图像上进行训练。

class FaceLandmarksDataset(Dataset):

def __init__(self, transform=None):

tree = ET.parse('ibug_300W_large_face_landmark_dataset/labels_ibug_300W_train.xml')

root = tree.getroot()

self.image_filenames = []

self.landmarks = []

self.crops = []

self.transform = transform

self.root_dir = 'ibug_300W_large_face_landmark_dataset'

for filename in root[2]:

self.image_filenames.append(os.path.join(self.root_dir, filename.attrib['file']))

self.crops.append(filename[0].attrib)

landmark = []

for num in range(68):

x_coordinate = int(filename[0][num].attrib['x'])

y_coordinate = int(filename[0][num].attrib['y'])

landmark.append([x_coordinate, y_coordinate])

self.landmarks.append(landmark)

self.landmarks = np.array(self.landmarks).astype('float32')

assert len(self.image_filenames) == len(self.landmarks)

def __len__(self):

return len(self.image_filenames)

def __getitem__(self, index):

image = cv2.imread(self.image_filenames[index], 0)

landmarks = self.landmarks[index]

if self.transform:

image, landmarks = self.transform(image, landmarks, self.crops[index])

landmarks = landmarks - 0.5

return image, landmarks

dataset = FaceLandmarksDataset(Transforms())- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

注意:landmarks = landmarks - 0.5 是为了将关键点居中,因为中心化的输出对神经网络学习更容易。经过预处理后的数据集输出如下所示(关键点已经绘制在图像中):

预处理后的数据样本

神经网络

我们将使用ResNet18作为基本框架。我们需要修改第一层和最后一层以适应我们的目的。在第一层中,我们将输入通道数设为1,以便神经网络接受灰度图像。同样,在最后一层中,输出通道数应为68 * 2 = 136,以便模型预测每张人脸的68个关键点的(x,y)坐标。

class Network(nn.Module):

def __init__(self,num_classes=136):

super().__init__()

self.model_name='resnet18'

self.model=models.resnet18()

self.model.conv1=nn.Conv2d(1, 64, kernel_size=7, stride=2, padding=3, bias=False)

self.model.fc=nn.Linear(self.model.fc.in_features, num_classes)

def forward(self, x):

x=self.model(x)

return x- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

训练神经网络

我们将使用预测关键点和真实关键点之间的均方误差作为损失函数。请记住,要避免梯度爆炸,学习率应保持低。每当验证损失达到新的最小值时,网络权重将被保存。至少训练20个epochs以获得最佳性能。

network = Network()

network.cuda()

criterion = nn.MSELoss()

optimizer = optim.Adam(network.parameters(), lr=0.0001)

loss_min = np.inf

num_epochs = 10

start_time = time.time()

for epoch in range(1,num_epochs+1):

loss_train = 0

loss_valid = 0

running_loss = 0

network.train()

for step in range(1,len(train_loader)+1):

images, landmarks = next(iter(train_loader))

images = images.cuda()

landmarks = landmarks.view(landmarks.size(0),-1).cuda()

predictions = network(images)

# clear all the gradients before calculating them

optimizer.zero_grad()

# find the loss for the current step

loss_train_step = criterion(predictions, landmarks)

# calculate the gradients

loss_train_step.backward()

# update the parameters

optimizer.step()

loss_train += loss_train_step.item()

running_loss = loss_train/step

print_overwrite(step, len(train_loader), running_loss, 'train')

network.eval()

with torch.no_grad():

for step in range(1,len(valid_loader)+1):

images, landmarks = next(iter(valid_loader))

images = images.cuda()

landmarks = landmarks.view(landmarks.size(0),-1).cuda()

predictions = network(images)

# find the loss for the current step

loss_valid_step = criterion(predictions, landmarks)

loss_valid += loss_valid_step.item()

running_loss = loss_valid/step

print_overwrite(step, len(valid_loader), running_loss, 'valid')

loss_train /= len(train_loader)

loss_valid /= len(valid_loader)

print('\n--------------------------------------------------')

print('Epoch: {} Train Loss: {:.4f} Valid Loss: {:.4f}'.format(epoch, loss_train, loss_valid))

print('--------------------------------------------------')

if loss_valid < loss_min:

loss_min = loss_valid

torch.save(network.state_dict(), '/content/face_landmarks.pth')

print("\nMinimum Validation Loss of {:.4f} at epoch {}/{}".format(loss_min, epoch, num_epochs))

print('Model Saved\n')

print('Training Complete')

print("Total Elapsed Time : {} s".format(time.time()-start_time))- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

- 64.

- 65.

- 66.

- 67.

- 68.

- 69.

- 70.

- 71.

- 72.

- 73.

- 74.

- 75.

- 76.

- 77.

- 78.

- 79.

在未知数据上进行预测

使用以下代码段在未知图像中预测关键点。

import time

import cv2

import os

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import imutils

import torch

import torch.nn as nn

from torchvision import models

import torchvision.transforms.functional as TF

#######################################################################

image_path = 'pic.jpg'

weights_path = 'face_landmarks.pth'

frontal_face_cascade_path = 'haarcascade_frontalface_default.xml'

#######################################################################

class Network(nn.Module):

def __init__(self,num_classes=136):

super().__init__()

self.model_name='resnet18'

self.model=models.resnet18(pretrained=False)

self.model.conv1=nn.Conv2d(1, 64, kernel_size=7, stride=2, padding=3, bias=False)

self.model.fc=nn.Linear(self.model.fc.in_features,num_classes)

def forward(self, x):

x=self.model(x)

return x

#######################################################################

face_cascade = cv2.CascadeClassifier(frontal_face_cascade_path)

best_network = Network()

best_network.load_state_dict(torch.load(weights_path, map_location=torch.device('cpu')))

best_network.eval()

image = cv2.imread(image_path)

grayscale_image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

display_image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

height, width,_ = image.shape

faces = face_cascade.detectMultiScale(grayscale_image, 1.1, 4)

all_landmarks = []

for (x, y, w, h) in faces:

image = grayscale_image[y:y+h, x:x+w]

image = TF.resize(Image.fromarray(image), size=(224, 224))

image = TF.to_tensor(image)

image = TF.normalize(image, [0.5], [0.5])

with torch.no_grad():

landmarks = best_network(image.unsqueeze(0))

landmarks = (landmarks.view(68,2).detach().numpy() + 0.5) * np.array([[w, h]]) + np.array([[x, y]])

all_landmarks.append(landmarks)

plt.figure()

plt.imshow(display_image)

for landmarks in all_landmarks:

plt.scatter(landmarks[:,0], landmarks[:,1], c = 'c', s = 5)

plt.show()- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

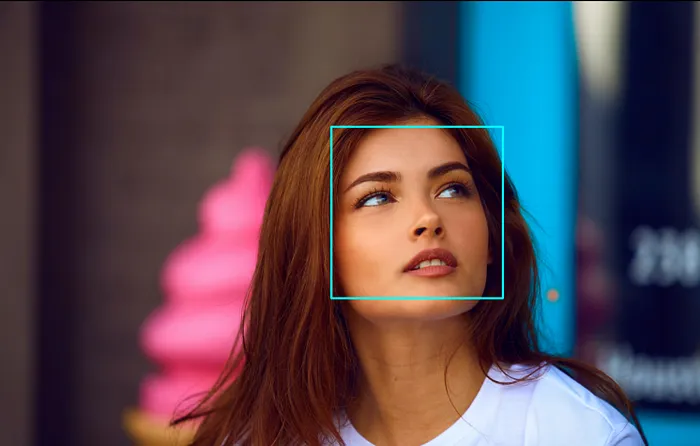

OpenCV Haar级联分类器用于检测图像中的人脸。使用Haar级联进行对象检测是一种基于机器学习的方法,其中使用一组输入数据对级联函数进行训练。OpenCV已经包含了许多预训练的分类器,用于人脸、眼睛、行人等等。在我们的案例中,我们将使用人脸分类器,你需要下载预训练的分类器XML文件并将其保存到你的工作目录中。

人脸检测

在输入图像中检测到的人脸将被裁剪、调整大小为(224,224)并输入到我们训练好的神经网络中以预测其中的关键点。

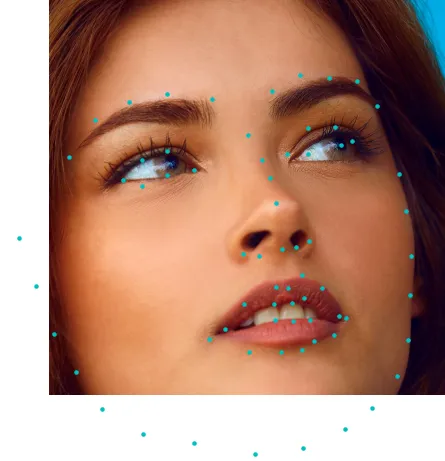

裁剪人脸上的关键点

在裁剪的人脸上叠加预测的关键点。结果如下图所示。相当令人印象深刻,不是吗?

最终结果

同样,在多个人脸上进行关键点检测:

在这里,你可以看到OpenCV Haar级联分类器已经检测到了多个人脸,包括一个误报(一个拳头被预测为人脸)。