With the help of artificial intelligence, DALL-E 2 shows how to observe and comprehend our world. Developing a system like this is crucial to creating valuable and safe artificial intelligence.

Technological advances have shown great promise for artificial intelligence. AI is often astonishing in its malleability, from AlphaGo, the first program to beat the human world Go champion, to AlphaCode, an AI that can program autonomously. Yet there have also been controversies related to AI, such as the privacy issues involved in face recognition and the creation of fake news.

The new DALL-E 2 system from OpenAI has once again raised concerns. Using text, the artificial intelligence system is capable of automatically generating various images that are realistic-looking and sometimes possess a high degree of expressive power. However, behind this capability, concerns about bias and fakery have once again come to light. Technology is neutral, but human nature may not be.

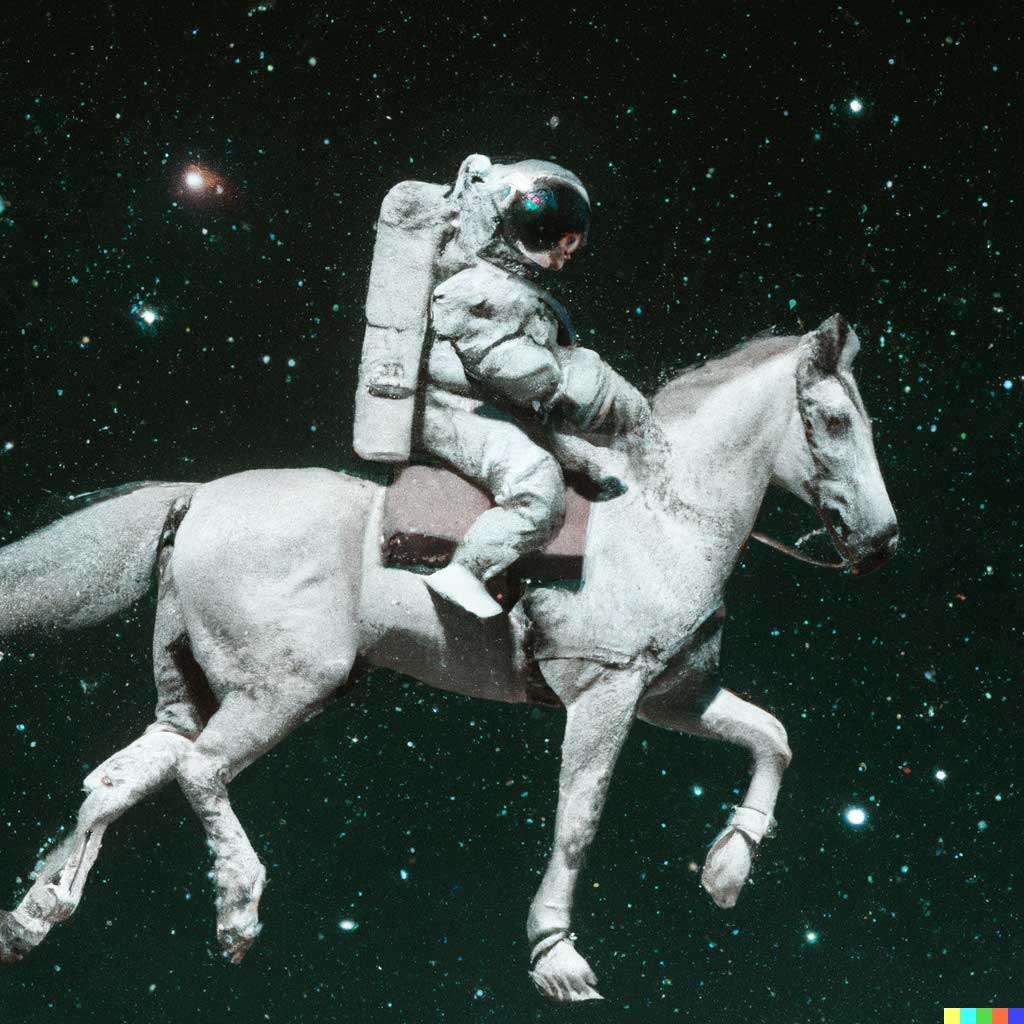

Have you ever seen a teddy bear skateboarding in Times Square or an astronaut riding a horse in space?

DALL-E 2 lets you "see" the above images that do not exist in reality.

DALL-E 2 is the latest version of DALL-E, a text-generated image tool developed by the famous OpenAI, and an AI system that has recently broken into the spotlight.

DALL-E 2: Unprecedented image quality with new secondary creation

In January 2021, OpenAI created DALL-E, a system based on GPT-2/GPT-3 language model and CLIP image recognition system. Salvador Dali, a surrealist painter, and the main character of Pixar's animation, WALL-E, are known to have inspired the name DALL-E.

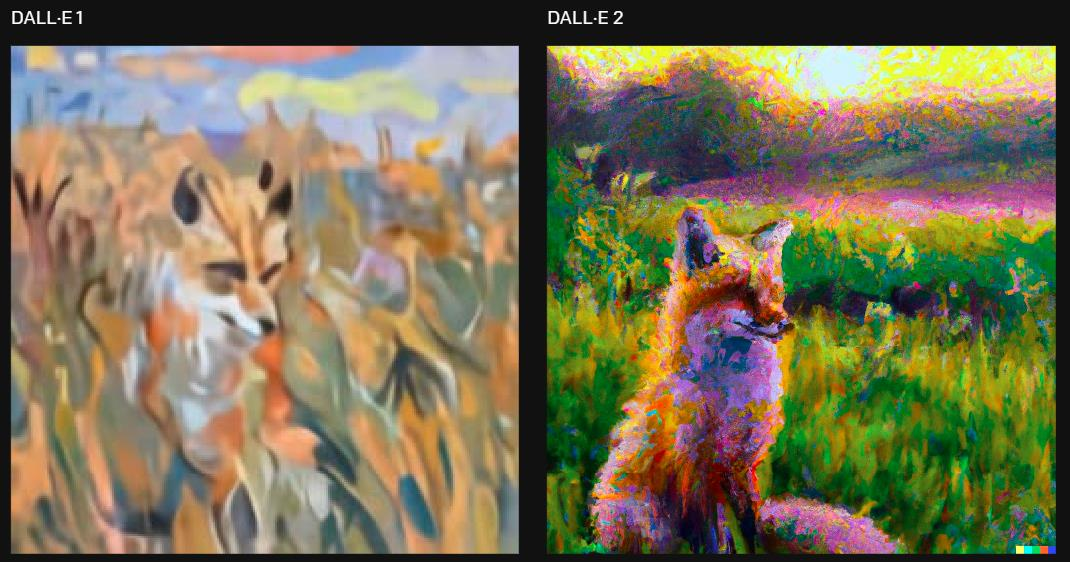

Compared with the first generation of DALL-E, DALL-E 2 is 71.7% more accurate and 88.8% more realistic, and the image quality has improved. For example, the first generation of DALL-E images has only 256 x 256 pixels, while DALL-E 2 pixels are up to 1024 x 1024 pixels, the resolution has improved, and the latency has decreased.

Using the same text description, "a painting of a fox sitting in a field at sunrise in the style of Claude Monet", the images created by the two generations of systems are remarkably different. Visually, the left side (DALL-E 1) looks like a crude cartoon, while the right side (DALL-E 2) is much sharper and shows a real oil painting quality.

(Image source: OpenAI official website)

Aside from this, DALL-E 2 includes two new features: "inpainting" and "variations," both of which are useful for editing and touching up images.

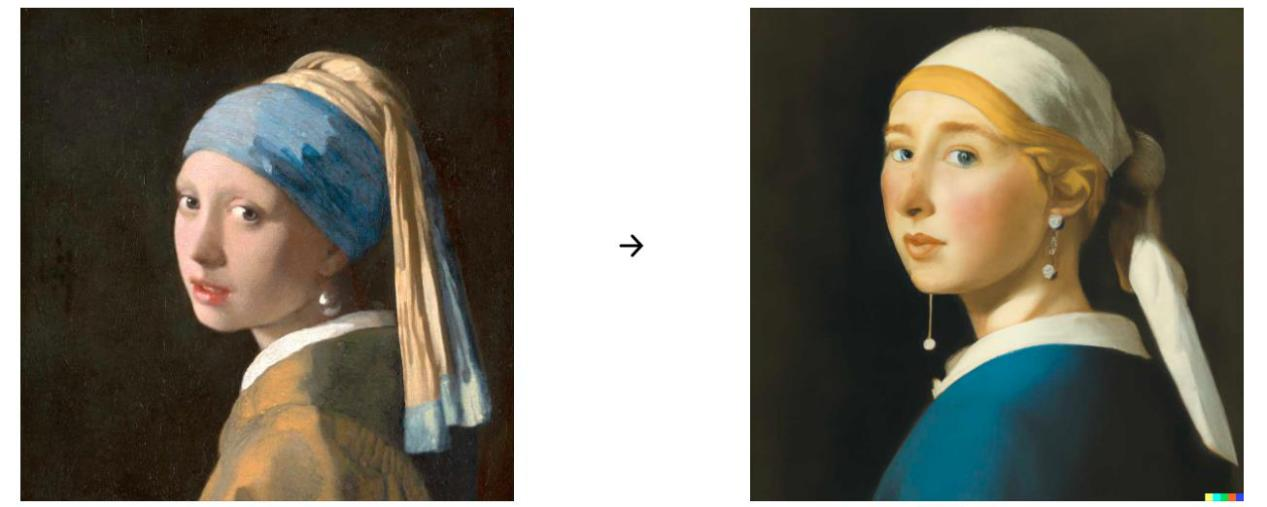

(Image source: OpenAI official website)

Inpainting is the process of changing a part of an existing image. In particular, DALL-E 2 can seamlessly blend an artificially generated image into an image, such as replacing a pillow on a couch with a puppy or putting a toy duck in a sink, with an image generated using AI.

Variation means taking an image as a base and recreating a new image with different angles and styles. The software allows users to upload an image and edit it in various ways, or overlay objects on two images to create a new image.

(Image source: OpenAI official website)

Breakthroughs and challenges: how to generate high-quality deepfakes

DALL-E 2 builds on a computer vision engine called CLIP, which has been trained on hundreds of millions of images and their tags to understand how well a given text snippet is associated with an image.

It is of interest to note that OpenAI created "unCLIP"—a system based on descriptions and intended for the generation of images—by iterating on CLIP and applying it to DALL-E 2. UnCLIP addresses some of the shortcomings of CLIP, where one can fool the system into misidentifying what one sees by using words that mean something else.

Suppose the user teaches the system an image of an airplane and tags it as "car," then when the user later wants to generate a picture of a "car," perhaps the system will create an image of an airplane. The process is similar to talking to someone who has learned the wrong word. In this regard, unCLIP performs much better than CLIP.

Moreover, the new system supports diffusion models, which start with a random pattern and gradually evolve into a picture once a more detailed description is identified. This can produce high-quality synthetic images, especially when combined with bootstrapping techniques, which trade off diversity for fidelity.

Even though text-to-image generation research has advanced, it has always been limited by anti-pattern issues, just as DALL-E 2 is similarly limited by blind spots in its training.

If, for example, you type in "monkey" into DALL-E 2, it will generate a number of images of monkeys when it understands the image with the correct label. However, if you type "howler monkey" as an unseen input, DALL-E 2 won't know it is a specific species name. Then, as DALL-E 2 thinks it's best, a monkey yelling picture will be presented. For now, DALL-E 2 has similar potential and limitations.

Technology has no good or evil; human nature may not stand tests

Interestingly enough, OpenAI has never fully revealed the model of DALL-E. As of now, users can preview the tool after creating an account on its website. Researchers have expressed the desire to continue with the phased process so that the technology can be released safely.

Even though the technology behind DALL-E 2 appears stunning, many have already noticed a hidden concern. Despite the bias the algorithm has been criticized for from the beginning, the "high quality" of the images can still be frightening. It can be used for good, but you can also use it for crazier things, such as deepfaking photos and videos."

In the same way that GPT-3 was allegedly used to fabricate fake news, tools like DALL-E 2 have the potential to be abused. With anyone being able to create eye-popping fake photos easily, we could feel no safety. Almost everything they see online should be taken with a grain of salt, and there's no question about that.

This technology has raised some concerns among the public. People were concerned that if this went public, they would be wise to turn off their TV and Internet altogether and avoid interacting with anyone who wished to tell them what they were viewing on the Web. If holograms become the norm, then we will be creating hell for future generations.

To address possible problems, such as image bias and misinformation, OpenAI has also anticipated and said it would continue building DALL-E.

Some of these measures include:

Images generated by DALL-E 2 will have a watermark to indicate they are AI-generated.

Data that has been screened out of toxic elements is used to train DALL-E 2. Therefore, its potential for producing harmful content has been reduced to an ideal level.

DALL-E 2 uses the anti-abuse feature to prevent it from generating random recognizable faces.

The tool forbids users from posting hate symbols, nudity, obscene gestures, or conspiracies about major current geopolitical events.

Users must explain the changes made by the AI to generate the images, and they cannot share the generated images on the website and software.

We don't know how effective this will be, but at least DALL-E 2 is locked up in a "deep box". It is essential to realize that DALL-E 2 offers the ability to express ourselves in previously near impossible ways. DALL-E 2 provides a realistic presentation of polar bears that play guitar, a Dalí-style sky garden, and the Mona Lisa with a mohawk that was previously unimaginable. Furthermore, DALL-E 2 shows people how an AI system can observe and understand our world, which is vital for developing practical and safe AI algorithms.