背景

之前给大家介绍了victoriametrics以及安装中的一些注意事项,今天来给大家实操一下,如何在k8s中进行安装。本次是基于云上的k8s上安装一个cluster版本的victoriametrics,需要使用到云上的负载均衡。

注:victoriametrics后续简称vm

安装准备

- 一个k8s集群,我的k8s版本是v1.20.6

- 在集群上准备好一个storageclass,我这里用的NFS来做的

- operator镜像tag为v0.17.2,vmstorage、vmselect和vminsert镜像tag为v1.63.0。可提前拉取镜像保存到本地镜像仓库

安装须知

vm可以通过多种方式安装,如二进制、docker镜像以及源码。可根据场景进行选择。如果在k8s中进行安装,我们可以直接使用operator来进行安装。下面重点说一下安装过程中的一些注意事项。

一个最小的集群必须包含以下节点:

- 一个vmstorage单节点,另外要指定-retentionPeriod和-storageDataPath两个参数

- 一个vminsert单节点,要指定-storageNode=

一个vmselect单节点,要指定-storageNode= 注:高可用情况下,建议每个服务至少有个两个节点

在vmselect和vminsert前面需要一个负载均衡,比如vmauth、nginx。这里我们使用云上的负载均衡。同时要求:

- 以/insert开头的请求必须要被路由到vminsert节点的8480端口

- 以/select开头的请求必须要被路由到vmselect节点的8481端口注:各服务的端口可以通过-httpListenAddr进行指定

建议为集群安装监控

如果是在一个主机上进行安装测试集群,vminsert、vmselect和vmstorage各自的-httpListenAddr参数必须唯一,vmstorage的-storageDataPath、-vminsertAddr、-vmselectAddr这几个参数必须有唯一的值。

当vmstorage通过-storageDataPath目录大小小于通过-storage.minFreeDiskSpaceBytes指定的可用空间时,会切换到只读模式;vminsert停止像这类节点发送数据,转而将数据发送到其他可用vmstorage节点

安装过程

安装vm

1、创建crd

- # 下载安装文件

- export VM_VERSION=`basename $(curl -fs -o/dev/null -w %{redirect_url} https://github.com/VictoriaMetrics/operator/releases/latest)`

- wget https://github.com/VictoriaMetrics/operator/releases/download/$VM_VERSION/bundle_crd.zip

- unzip bundle_crd.zip

- kubectl apply -f release/crds

- # 检查crd

- [root@test opt]# kubectl get crd |grep vm

- vmagents.operator.victoriametrics.com 2022-01-05T07:26:01Z

- vmalertmanagerconfigs.operator.victoriametrics.com 2022-01-05T07:26:01Z

- vmalertmanagers.operator.victoriametrics.com 2022-01-05T07:26:01Z

- vmalerts.operator.victoriametrics.com 2022-01-05T07:26:01Z

- vmauths.operator.victoriametrics.com 2022-01-05T07:26:01Z

- vmclusters.operator.victoriametrics.com 2022-01-05T07:26:01Z

- vmnodescrapes.operator.victoriametrics.com 2022-01-05T07:26:01Z

- vmpodscrapes.operator.victoriametrics.com 2022-01-05T07:26:01Z

- vmprobes.operator.victoriametrics.com 2022-01-05T07:26:01Z

- vmrules.operator.victoriametrics.com 2022-01-05T07:26:01Z

- vmservicescrapes.operator.victoriametrics.com 2022-01-05T07:26:01Z

- vmsingles.operator.victoriametrics.com 2022-01-05T07:26:01Z

- vmstaticscrapes.operator.victoriametrics.com 2022-01-05T07:26:01Z

- vmusers.operator.victoriametrics.com 2022-01-05T07:26:01Z

2、安装operator

- # 安装operator。记得提前修改operator的镜像地址

- kubectl apply -f release/operator/

- # 安装后检查operator是否正常

- [root@test opt]# kubectl get po -n monitoring-system

- vm-operator-76dd8f7b84-gsbfs 1/1 Running 0 25h

3、安装vmcluster operator安装完成后,需要根据自己的需求去构建自己的的cr。我这里安装一个vmcluster。先看看vmcluster安装文件

- # cat vmcluster-install.yaml

- apiVersion: operator.victoriametrics.com/v1beta1

- kind: VMCluster

- metadata:

- name: vmcluster

- namespace: monitoring-system

- spec:

- replicationFactor: 1

- retentionPeriod: "4"

- vminsert:

- image:

- pullPolicy: IfNotPresent

- repository: images.huazai.com/release/vminsert

- tag: v1.63.0

- podMetadata:

- labels:

- victoriaMetrics: vminsert

- replicaCount: 1

- resources:

- limits:

- cpu: "1"

- memory: 1000Mi

- requests:

- cpu: 500m

- memory: 500Mi

- vmselect:

- cacheMountPath: /select-cache

- image:

- pullPolicy: IfNotPresent

- repository: images.huazai.com/release/vmselect

- tag: v1.63.0

- podMetadata:

- labels:

- victoriaMetrics: vmselect

- replicaCount: 1

- resources:

- limits:

- cpu: "1"

- memory: 1000Mi

- requests:

- cpu: 500m

- memory: 500Mi

- storage:

- volumeClaimTemplate:

- spec:

- accessModes:

- - ReadWriteOnce

- resources:

- requests:

- storage: 2G

- storageClassName: nfs-csi

- volumeMode: Filesystem

- vmstorage:

- image:

- pullPolicy: IfNotPresent

- repository: images.huazai.com/release/vmstorage

- tag: v1.63.0

- podMetadata:

- labels:

- victoriaMetrics: vmstorage

- replicaCount: 1

- resources:

- limits:

- cpu: "1"

- memory: 1500Mi

- requests:

- cpu: 500m

- memory: 750Mi

- storage:

- volumeClaimTemplate:

- spec:

- accessModes:

- - ReadWriteOnce

- resources:

- requests:

- storage: 20G

- storageClassName: nfs-csi

- volumeMode: Filesystem

- storageDataPath: /vm-data

- # install vmcluster

- kubectl apply -f vmcluster-install.yaml

- # 检查vmcluster install结果

- [root@test opt]# kubectl get po -n monitoring-system

- NAME READY STATUS RESTARTS AGE

- vm-operator-76dd8f7b84-gsbfs 1/1 Running 0 26h

- vminsert-vmcluster-main-69766c8f4-r795w 1/1 Running 0 25h

- vmselect-vmcluster-main-0 1/1 Running 0 25h

- vmstorage-vmcluster-main-0 1/1 Running 0 25h

4、创建vminsert和vmselect service

- # 查看创建的svc

- [root@test opt]# kubectl get svc -n monitoring-system

- NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

- vminsert-vmcluster-main ClusterIP 10.0.182.73 <none> 8480/TCP 25h

- vmselect-vmcluster-main ClusterIP None <none> 8481/TCP 25h

- vmstorage-vmcluster-main ClusterIP None <none> 8482/TCP,8400/TCP,8401/TCP 25h

- # 这里为了方便不同k8s集群的数据都可以存储到该vm来,同时方便后续查询数据,

- # 重新创建两个svc,类型为nodeport,分别为vminsert-lbsvc和vmselect-lbsvc.同时配置云上的lb监听8480和8481端口,后端服务器为vm所在集群的节点ip,

- # 端口为vminsert-lbsvc和vmsleect-lbsvc两个service暴露出来的nodeport

- # 但与vm同k8s集群的比如opentelemetry需要存储数据时,仍然可以用:

- # vminsert-vmcluster-main.kube-system.svc.cluster.local:8480

- # 与vm不同k8s集群的如opentelemetry存储数据时使用lb:8480

- # cat vminsert-lb-svc.yaml

- apiVersion: v1

- kind: Service

- metadata:

- labels:

- app.kubernetes.io/component: monitoring

- app.kubernetes.io/instance: vmcluster-main

- app.kubernetes.io/name: vminsert

- name: vminsert-vmcluster-main-lbsvc

- namespace: monitoring-system

- spec:

- externalTrafficPolicy: Cluster

- ports:

- - name: http

- nodePort: 30135

- port: 8480

- protocol: TCP

- targetPort: 8480

- selector:

- app.kubernetes.io/component: monitoring

- app.kubernetes.io/instance: vmcluster-main

- app.kubernetes.io/name: vminsert

- sessionAffinity: None

- type: NodePort

- # cat vmselect-lb-svc.yaml

- apiVersion: v1

- kind: Service

- metadata:

- labels:

- app.kubernetes.io/component: monitoring

- app.kubernetes.io/instance: vmcluster-main

- app.kubernetes.io/name: vmselect

- name: vmselect-vmcluster-main-lbsvc

- namespace: monitoring-system

- spec:

- externalTrafficPolicy: Cluster

- ports:

- - name: http

- nodePort: 31140

- port: 8481

- protocol: TCP

- targetPort: 8481

- selector:

- app.kubernetes.io/component: monitoring

- app.kubernetes.io/instance: vmcluster-main

- app.kubernetes.io/name: vmselect

- sessionAffinity: None

- type: NodePort

- # 创建svc

- kubectl apply -f vmselect-lb-svc.yaml

- kubectl apply -f vminsert-lb-svc.yaml

- # !!配置云上lb,

- 自行配置

- # 最后检查vm相关的pod和svc

- [root@test opt]# kubectl get po,svc -n monitoring-system

- NAME READY STATUS RESTARTS AGE

- pod/vm-operator-76dd8f7b84-gsbfs 1/1 Running 0 30h

- pod/vminsert-vmcluster-main-69766c8f4-r795w 1/1 Running 0 29h

- pod/vmselect-vmcluster-main-0 1/1 Running 0 29h

- pod/vmstorage-vmcluster-main-0 1/1 Running 0 29h

- NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

- service/vminsert-vmcluster-main ClusterIP 10.0.182.73 <none> 8480/TCP 29h

- service/vminsert-vmcluster-main-lbsvc NodePort 10.0.255.212 <none> 8480:30135/TCP 7h54m

- service/vmselect-vmcluster-main ClusterIP None <none> 8481/TCP 29h

- service/vmselect-vmcluster-main-lbsvc NodePort 10.0.45.239 <none> 8481:31140/TCP 7h54m

- service/vmstorage-vmcluster-main ClusterIP None <none> 8482/TCP,8400/TCP,8401/TCP 29h

安装prometheus-expoter

这里还是来安装node exporter,暴露k8s节点数据,由后续的opentelemetry来采集,并通过vminsert存储到vmstorage。数据通过vmselect来进行查询

- # kubectl apply -f prometheus-node-exporter-install.yaml

- apiVersion: apps/v1

- kind: DaemonSet

- metadata:

- labels:

- app: prometheus-node-exporter

- release: prometheus-node-exporter

- name: prometheus-node-exporter

- namespace: kube-system

- spec:

- revisionHistoryLimit: 10

- selector:

- matchLabels:

- app: prometheus-node-exporter

- release: prometheus-node-exporter

- template:

- metadata:

- labels:

- app: prometheus-node-exporter

- release: prometheus-node-exporter

- spec:

- containers:

- - args:

- - --path.procfs=/host/proc

- - --path.sysfs=/host/sys

- - --path.rootfs=/host/root

- - --web.listen-address=$(HOST_IP):9100

- env:

- - name: HOST_IP

- value: 0.0.0.0

- image: images.huazai.com/release/node-exporter:v1.1.2

- imagePullPolicy: IfNotPresent

- livenessProbe:

- failureThreshold: 3

- httpGet:

- path: /

- port: 9100

- scheme: HTTP

- periodSeconds: 10

- successThreshold: 1

- timeoutSeconds: 1

- name: node-exporter

- ports:

- - containerPort: 9100

- hostPort: 9100

- name: metrics

- protocol: TCP

- readinessProbe:

- failureThreshold: 3

- httpGet:

- path: /

- port: 9100

- scheme: HTTP

- periodSeconds: 10

- successThreshold: 1

- timeoutSeconds: 1

- resources:

- limits:

- cpu: 200m

- memory: 50Mi

- requests:

- cpu: 100m

- memory: 30Mi

- terminationMessagePath: /dev/termination-log

- terminationMessagePolicy: File

- volumeMounts:

- - mountPath: /host/proc

- name: proc

- readOnly: true

- - mountPath: /host/sys

- name: sys

- readOnly: true

- - mountPath: /host/root

- mountPropagation: HostToContainer

- name: root

- readOnly: true

- dnsPolicy: ClusterFirst

- hostNetwork: true

- hostPID: true

- restartPolicy: Always

- schedulerName: default-scheduler

- securityContext:

- fsGroup: 65534

- runAsGroup: 65534

- runAsNonRoot: true

- runAsUser: 65534

- serviceAccount: prometheus-node-exporter

- serviceAccountName: prometheus-node-exporter

- terminationGracePeriodSeconds: 30

- tolerations:

- - effect: NoSchedule

- operator: Exists

- volumes:

- - hostPath:

- path: /proc

- type: ""

- name: proc

- - hostPath:

- path: /sys

- type: ""

- name: sys

- - hostPath:

- path: /

- type: ""

- name: root

- updateStrategy:

- rollingUpdate:

- maxUnavailable: 1

- type: RollingUpdate

- # 检查node-exporter

- [root@test ~]# kubectl get po -n kube-system |grep prometheus

- prometheus-node-exporter-89wjk 1/1 Running 0 31h

- prometheus-node-exporter-hj4gh 1/1 Running 0 31h

- prometheus-node-exporter-hxm8t 1/1 Running 0 31h

- prometheus-node-exporter-nhqp6 1/1 Running 0 31h

安装opentelemetry

prometheus node exporter安装好之后,再来安装opentelemetry(以后有机会再介绍)

- # opentelemetry 配置文件。定义数据的接收、处理、导出

- # 1.receivers即从哪里获取数据

- # 2.processors即对获取的数据的处理

- # 3.exporters即将处理过的数据导出到哪里,本次数据通过vminsert最终写入到vmstorage

- # kubectl apply -f opentelemetry-install-cm.yaml

- apiVersion: v1

- data:

- relay: |

- exporters:

- prometheusremotewrite:

- # 我这里配置lb_ip:8480,即vminsert地址

- endpoint: http://lb_ip:8480/insert/0/prometheus

- # 不同的集群添加不同的label,比如cluster: uat/prd

- external_labels:

- cluster: uat

- extensions:

- health_check: {}

- processors:

- batch: {}

- memory_limiter:

- ballast_size_mib: 819

- check_interval: 5s

- limit_mib: 1638

- spike_limit_mib: 512

- receivers:

- prometheus:

- config:

- scrape_configs:

- - job_name: opentelemetry-collector

- scrape_interval: 10s

- static_configs:

- - targets:

- - localhost:8888

- ...省略...

- - job_name: kube-state-metrics

- kubernetes_sd_configs:

- - namespaces:

- names:

- - kube-system

- role: service

- metric_relabel_configs:

- - regex: ReplicaSet;([\w|\-]+)\-[0-9|a-z]+

- replacement: $$1

- source_labels:

- - created_by_kind

- - created_by_name

- target_label: created_by_name

- - regex: ReplicaSet

- replacement: Deployment

- source_labels:

- - created_by_kind

- target_label: created_by_kind

- relabel_configs:

- - action: keep

- regex: kube-state-metrics

- source_labels:

- - __meta_kubernetes_service_name

- - job_name: node-exporter

- kubernetes_sd_configs:

- - namespaces:

- names:

- - kube-system

- role: endpoints

- relabel_configs:

- - action: keep

- regex: node-exporter

- source_labels:

- - __meta_kubernetes_service_name

- - source_labels:

- - __meta_kubernetes_pod_node_name

- target_label: node

- - source_labels:

- - __meta_kubernetes_pod_host_ip

- target_label: host_ip

- ...省略...

- service:

- # 上面定义的receivors、processors、exporters以及extensions需要在这里配置,不然不起作用

- extensions:

- - health_check

- pipelines:

- metrics:

- exporters:

- - prometheusremotewrite

- processors:

- - memory_limiter

- - batch

- receivers:

- - prometheus

- kind: ConfigMap

- metadata:

- annotations:

- meta.helm.sh/release-name: opentelemetry-collector-hua

- meta.helm.sh/release-namespace: kube-system

- labels:

- app.kubernetes.io/instance: opentelemetry-collector-hua

- app.kubernetes.io/name: opentelemetry-collector-hua

- name: opentelemetry-collector-hua

- namespace: kube-system

- # 安装opentelemetry

- # kubectl apply -f opentelemetry-install.yaml

- apiVersion: apps/v1

- kind: Deployment

- metadata:

- labels:

- app.kubernetes.io/instance: opentelemetry-collector-hua

- app.kubernetes.io/name: opentelemetry-collector-hua

- name: opentelemetry-collector-hua

- namespace: kube-system

- spec:

- progressDeadlineSeconds: 600

- replicas: 1

- revisionHistoryLimit: 10

- selector:

- matchLabels:

- app.kubernetes.io/instance: opentelemetry-collector-hua

- app.kubernetes.io/name: opentelemetry-collector-hua

- strategy:

- rollingUpdate:

- maxSurge: 25%

- maxUnavailable: 25%

- type: RollingUpdate

- template:

- metadata:

- labels:

- app.kubernetes.io/instance: opentelemetry-collector-hua

- app.kubernetes.io/name: opentelemetry-collector-hua

- spec:

- containers:

- - command:

- - /otelcol

- - --config=/conf/relay.yaml

- - --metrics-addr=0.0.0.0:8888

- - --mem-ballast-size-mib=819

- env:

- - name: MY_POD_IP

- valueFrom:

- fieldRef:

- apiVersion: v1

- fieldPath: status.podIP

- image: images.huazai.com/release/opentelemetry-collector:0.27.0

- imagePullPolicy: IfNotPresent

- livenessProbe:

- failureThreshold: 3

- httpGet:

- path: /

- port: 13133

- scheme: HTTP

- periodSeconds: 10

- successThreshold: 1

- timeoutSeconds: 1

- name: opentelemetry-collector-hua

- ports:

- - containerPort: 4317

- name: otlp

- protocol: TCP

- readinessProbe:

- failureThreshold: 3

- httpGet:

- path: /

- port: 13133

- scheme: HTTP

- periodSeconds: 10

- successThreshold: 1

- timeoutSeconds: 1

- resources:

- limits:

- cpu: "1"

- memory: 2Gi

- requests:

- cpu: 500m

- memory: 1Gi

- volumeMounts:

- - mountPath: /conf

- # 上面创建的给oepntelnemetry用的configmap

- name: opentelemetry-collector-configmap-hua

- - mountPath: /etc/otel-collector/secrets/etcd-cert/

- name: etcd-tls

- readOnly: true

- dnsPolicy: ClusterFirst

- restartPolicy: Always

- schedulerName: default-scheduler

- securityContext: {}

- # sa这里自行创建吧

- serviceAccount: opentelemetry-collector-hua

- serviceAccountName: opentelemetry-collector-hua

- terminationGracePeriodSeconds: 30

- volumes:

- - configMap:

- defaultMode: 420

- items:

- - key: relay

- path: relay.yaml

- # 上面创建的给oepntelnemetry用的configmap

- name: opentelemetry-collector-hua

- name: opentelemetry-collector-configmap-hua

- - name: etcd-tls

- secret:

- defaultMode: 420

- secretName: etcd-tls

- # 检查opentelemetry运行情况。如果opentelemetry与vm在同一个k8s集群,请写service那一套,不要使用lb(受制于云上

- # 4层监听器的后端服务器暂不能支持同时作为客户端和服务端)

- [root@kube-control-1 ~]# kubectl get po -n kube-system |grep opentelemetry-collector-hua

- opentelemetry-collector-hua-647c6c64c7-j6p4b 1/1 Running 0 8h

安装检查

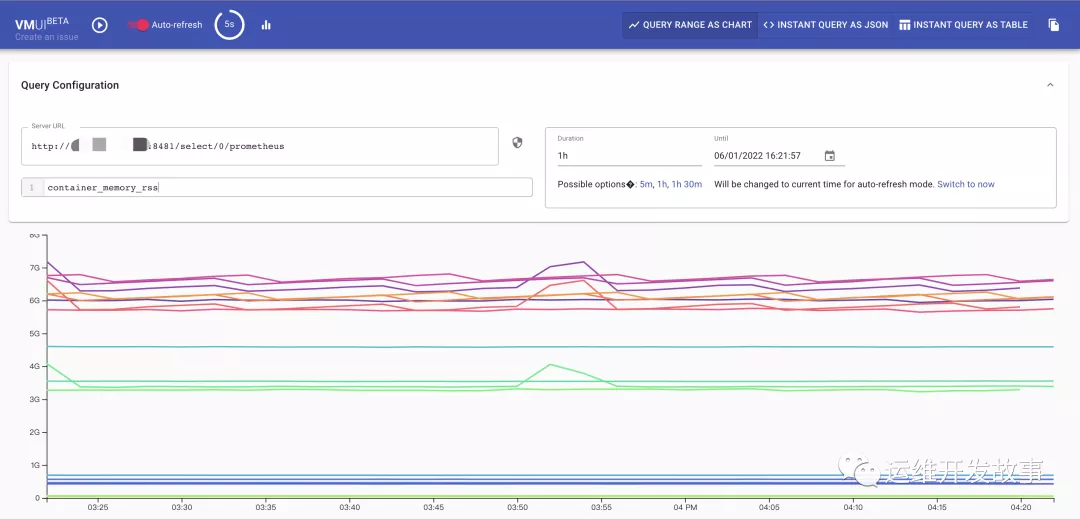

所有的组件安装完成后,在浏览器输入http://lb:8481/select/0/vmui,然后在server url输入;http://lb:8481/select/0/prometheus。最后再输入对应的指标就可以查询数据了,左上角还可以开启自动刷新!

总结

整个安装过程还是比较简单的。一旦安装完成后,即可存储多个k8s集群的监控数据。vm是支持基于PromeQL的MetricsQL的,也能够作为grafana的数据源。想想之前需要手动在每个k8s集群单独安装prometheus,还要去配置存储,需要查询数据时,要单独打开每个集群的prometheus UI是不是显得稍微麻烦一点呢。如果你也觉得vm不错,动手试试看吧!

全文参考

- https://github.com/VictoriaMetrics/VictoriaMetrics/tree/cluster

- https://docs.victoriametrics.com/

- https://opentelemetry.io/docs/

- https://prometheus.io/docs/prometheus/latest/configuration/configuration/