作者个人研发的在高并发场景下,提供的简单、稳定、可扩展的延迟消息队列框架,具有精准的定时任务和延迟队列处理功能。自开源半年多以来,已成功为十几家中小型企业提供了精准定时调度方案,经受住了生产环境的考验。为使更多童鞋受益,现给出开源框架地址:

https://github.com/sunshinelyz/mykit-delay前言

业界对系统的高可用有着基本的要求,简单的说,这些要求可以总结为如下所示。

- 系统架构中不存在单点问题。

- 可以最大限度的保障服务的可用性。

一般情况下系统的高可用可以用几个9来评估。所谓的几个9就是系统可以保证对外提供的服务的时间达到总时间的百分比。例如如果需要达到99.99的高可用,则系统全年发生故障的总时间不能超过52分钟。

系统高可用架构

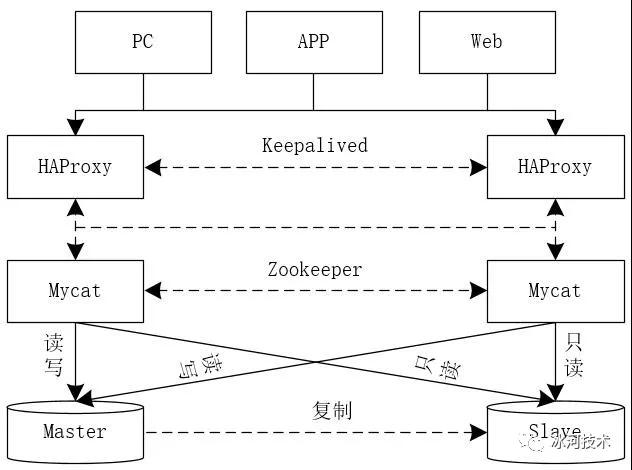

我们既然需要实现系统的高可用架构,那么,我们到底需要搭建一个什么样的系统架构呢?我们可以将需要搭建的系统架构简化成下图所示。

服务器规划

由于我电脑资源有限,我这里在4台服务器上搭建高可用环境,大家可以按照本文将环境扩展到更多的服务器,搭建步骤都是一样的。

| 主机名 | IP地址 | 安装的服务 |

|---|---|---|

| binghe151 | 192.168.175.151 | Mycat、Zookeeper、 MySQL、HAProxy、 Keepalived、Xinetd |

| binghe152 | 192.168.175.152 | Zookeeper、MySQL |

| binghe153 | 192.168.175.153 | Zookeeper、MySQL |

| binghe154 | 192.168.175.154 | Mycat、MySQL、 HAProxy、Keepalived、 Xinetd |

| binghe155 | 192.168.175.155 | MySQL |

注意:HAProxy和Keepalived最好和Mycat部署在同一台服务器上。

安装JDK

由于Mycat和Zookeeper的运行需要JDK环境的支持,所有我们需要在每台服务器上安装JDK环境。

这里,我以在binghe151服务器上安装JDK为例,其他服务器的安装方式与在binghe151服务器上的安装方式相同。安装步骤如下所示。

(1)到JDK官网下载JDK 1.8版本,JDK1.8的下载地址为:https://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html。

注:我下载的JDK安装包版本为:jdk-8u212-linux-x64.tar.gz,如果JDK版本已更新,大家下载对应的版本即可。

(2)将下载的jdk-8u212-linux-x64.tar.gz安装包上传到binghe151服务器的/usr/local/src目录下。

(3)解压jdk-8u212-linux-x64.tar.gz文件,如下所示。

- tar -zxvf jdk-8u212-linux-x64.tar.gz

(4)将解压的jdk1.8.0_212目录移动到binghe151服务器下的/usr/local目录下,如下所示。

- mv jdk1.8.0_212/ /usr/local/src/

(5)配置JDK系统环境变量,如下所示。

- vim /etc/profile

- JAVA_HOME=/usr/local/jdk1.8.0_212

- CLASS_PATH=.:$JAVA_HOME/lib

- PATH=$JAVA_HOME/bin:$PATH

- export JAVA_HOME CLASS_PATH PATH

使系统环境变量生效,如下所示。

- source /etc/profile

(6)查看JDK版本,如下所示。

- [root@binghe151 ~]# java -version

- java version "1.8.0_212"

- Java(TM) SE Runtime Environment (build 1.8.0_212-b10)

- Java HotSpot(TM) 64-Bit Server VM (build 25.212-b10, mixed mode)

结果显示,正确输出了JDK的版本信息,说明JDK安装成功。

安装Mycat

下载Mycat 1.6.7.4 Release版本,解压到服务器的/usr/local/mycat目录下,并配置Mycat的系统环境变量,随后,配置Mycat的配置文件,Mycat的最终结果配置如下所示。

- schema.xml

- <?xml version="1.0"?>

- <!DOCTYPE mycat:schema SYSTEM "schema.dtd">

- <mycat:schema xmlns:mycat="http://io.mycat/">

- <schema name="shop" checkSQLschema="false" sqlMaxLimit="1000">

- <!--<table name="order_master" primaryKey="order_id" dataNode = "ordb"/>-->

- <table name="order_master" primaryKey="order_id" dataNode = "orderdb01,orderdb02,orderdb03,orderdb04" rule="order_master" autoIncrement="true">

- <childTable name="order_detail" primaryKey="order_detail_id" joinKey="order_id" parentKey="order_id" autoIncrement="true"/>

- </table>

- <table name="order_cart" primaryKey="cart_id" dataNode = "ordb"/>

- <table name="order_customer_addr" primaryKey="customer_addr_id" dataNode = "ordb"/>

- <table name="region_info" primaryKey="region_id" dataNode = "ordb,prodb,custdb" type="global"/>

- <table name="serial" primaryKey="id" dataNode = "ordb"/>

- <table name="shipping_info" primaryKey="ship_id" dataNode = "ordb"/>

- <table name="warehouse_info" primaryKey="w_id" dataNode = "ordb"/>

- <table name="warehouse_proudct" primaryKey="wp_id" dataNode = "ordb"/>

- <table name="product_brand_info" primaryKey="brand_id" dataNode = "prodb"/>

- <table name="product_category" primaryKey="category_id" dataNode = "prodb"/>

- <table name="product_comment" primaryKey="comment_id" dataNode = "prodb"/>

- <table name="product_info" primaryKey="product_id" dataNode = "prodb"/>

- <table name="product_pic_info" primaryKey="product_pic_id" dataNode = "prodb"/>

- <table name="product_supplier_info" primaryKey="supplier_id" dataNode = "prodb"/>

- <table name="customer_balance_log" primaryKey="balance_id" dataNode = "custdb"/>

- <table name="customer_inf" primaryKey="customer_inf_id" dataNode = "custdb"/>

- <table name="customer_level_inf" primaryKey="customer_level" dataNode = "custdb"/>

- <table name="customer_login" primaryKey="customer_id" dataNode = "custdb"/>

- <table name="customer_login_log" primaryKey="login_id" dataNode = "custdb"/>

- <table name="customer_point_log" primaryKey="point_id" dataNode = "custdb"/>

- </schema>

- <dataNode name="mycat" dataHost="binghe151" database="mycat" />

- <dataNode name="ordb" dataHost="binghe152" database="order_db" />

- <dataNode name="prodb" dataHost="binghe153" database="product_db" />

- <dataNode name="custdb" dataHost="binghe154" database="customer_db" />

- <dataNode name="orderdb01" dataHost="binghe152" database="orderdb01" />

- <dataNode name="orderdb02" dataHost="binghe152" database="orderdb02" />

- <dataNode name="orderdb03" dataHost="binghe153" database="orderdb03" />

- <dataNode name="orderdb04" dataHost="binghe153" database="orderdb04" />

- <dataHost name="binghe151" maxCon="1000" minCon="10" balance="1"

- writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

- <heartbeat>select user()</heartbeat>

- <writeHost host="binghe51" url="192.168.175.151:3306" user="mycat" password="mycat"/>

- </dataHost>

- <dataHost name="binghe152" maxCon="1000" minCon="10" balance="1"

- writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

- <heartbeat>select user()</heartbeat>

- <writeHost host="binghe52" url="192.168.175.152:3306" user="mycat" password="mycat"/>

- </dataHost>

- <dataHost name="binghe153" maxCon="1000" minCon="10" balance="1"

- writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

- <heartbeat>select user()</heartbeat>

- <writeHost host="binghe53" url="192.168.175.153:3306" user="mycat" password="mycat"/>

- </dataHost>

- <dataHost name="binghe154" maxCon="1000" minCon="10" balance="1"

- writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

- <heartbeat>select user()</heartbeat>

- <writeHost host="binghe54" url="192.168.175.154:3306" user="mycat" password="mycat"/>

- </dataHost>

- </mycat:schema>

- server.xml

- <?xml version="1.0" encoding="UTF-8"?>

- <!DOCTYPE mycat:server SYSTEM "server.dtd">

- <mycat:server xmlns:mycat="http://io.mycat/">

- <system>

- <property name="useHandshakeV10">1</property>

- <property name="defaultSqlParser">druidparser</property>

- <property name="serverPort">3307</property>

- <property name="managerPort">3308</property>

- <property name="nonePasswordLogin">0</property>

- <property name="bindIp">0.0.0.0</property>

- <property name="charset">utf8mb4</property>

- <property name="frontWriteQueueSize">2048</property>

- <property name="txIsolation">2</property>

- <property name="processors">2</property>

- <property name="idleTimeout">1800000</property>

- <property name="sqlExecuteTimeout">300</property>

- <property name="useSqlStat">0</property>

- <property name="useGlobleTableCheck">0</property>

- <property name="sequenceHandlerType">1</property>

- <property name="defaultMaxLimit">1000</property>

- <property name="maxPacketSize">104857600</property>

- <property name="sqlInterceptor">

- io.mycat.server.interceptor.impl.StatisticsSqlInterceptor

- </property>

- <property name="sqlInterceptorType">

- UPDATE,DELETE,INSERT

- </property>

- <property name="sqlInterceptorFile">/tmp/sql.txt</property>

- </system>

- <firewall>

- <whitehost>

- <host user="mycat" host="192.168.175.151"></host>

- </whitehost>

- <blacklist check="true">

- <property name="noneBaseStatementAllow">true</property>

- <property name="deleteWhereNoneCheck">true</property>

- </blacklist>

- </firewall>

- <user name="mycat" defaultAccount="true">

- <property name="usingDecrypt">1</property>

- <property name="password">cTwf23RrpBCEmalp/nx0BAKenNhvNs2NSr9nYiMzHADeEDEfwVWlI6hBDccJjNBJqJxnunHFp5ae63PPnMfGYA==</property>

- <property name="schemas">shop</property>

- </user>

- </mycat:server>

- rule.xml

- <?xml version="1.0" encoding="UTF-8"?>

- <!DOCTYPE mycat:rule SYSTEM "rule.dtd">

- <mycat:rule xmlns:mycat="http://io.mycat/">

- <tableRule name="order_master">

- <rule>

- <columns>customer_id</columns>

- <algorithm>mod-long</algorithm>

- </rule>

- </tableRule>

- <function name="mod-long" class="io.mycat.route.function.PartitionByMod">

- <property name="count">4</property>

- </function>

- </mycat:rule>

- sequence_db_conf.properties

- #sequence stored in datanode

- GLOBAL=mycat

- ORDER_MASTER=mycat

- ORDER_DETAIL=mycat

关于Mycat的配置,仅供大家参考,大家不一定非要按照我这里配置,根据自身业务需要配置即可。本文的重点是实现Mycat的高可用环境搭建。

在MySQL中创建Mycat连接MySQL的账户,如下所示。

- CREATE USER 'mycat'@'192.168.175.%' IDENTIFIED BY 'mycat';

- ALTER USER 'mycat'@'192.168.175.%' IDENTIFIED WITH mysql_native_password BY 'mycat';

- GRANT SELECT, INSERT, UPDATE, DELETE,EXECUTE ON *.* TO 'mycat'@'192.168.175.%';

- FLUSH PRIVILEGES;

安装Zookeeper集群

安装配置完JDK后,就需要搭建Zookeeper集群了,根据对服务器的规划,现将Zookeeper集群搭建在“binghe151”、“binghe152”、“binghe153”三台服务器上。

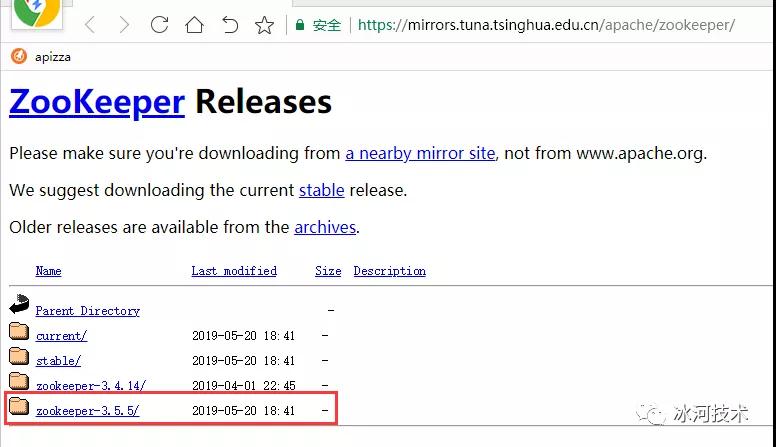

1.下载Zookeeper

到Apache官网去下载Zookeeper的安装包,Zookeeper的安装包下载地址为:https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/。具体如下图所示。

也可以在binghe151服务器上执行如下命令直接下载zookeeper-3.5.5。

- wget https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/zookeeper-3.5.5/apache-zookeeper-3.5.5-bin.tar.gz

执行上述命令就可以直接把apache-zookeeper-3.5.5-bin.tar.gz安装包下载到binghe151服务器上。

2.安装并配置Zookeeper

注意:(1)、(2)、(3)步都是在binghe152服务器上执行的。

(1)解压Zookeeper安装包

在binghe151服务器上执行如下命令,将Zookeeper解压到“/usr/local/”目录下,并将Zookeeper目录修改为zookeeper-3.5.5。

- tar -zxvf apache-zookeeper-3.5.5-bin.tar.gz

- mv apache-zookeeper-3.5.5-bin zookeeper-3.5.5

(2)配置Zookeeper系统环境变量

同样,需要在/etc/profile文件中配置Zookeeper系统环境变量,如下:

- ZOOKEEPER_HOME=/usr/local/zookeeper-3.5.5

- PATH=$ZOOKEEPER_HOME/bin:$PATH

- export ZOOKEEPER_HOME PATH

结合之前配置的JDK系统环境变量,/etc/profile,总体配置如下:

- MYSQL_HOME=/usr/local/mysql

- JAVA_HOME=/usr/local/jdk1.8.0_212

- MYCAT_HOME=/usr/local/mycat

- ZOOKEEPER_HOME=/usr/local/zookeeper-3.5.5

- MPC_HOME=/usr/local/mpc-1.1.0

- GMP_HOME=/usr/local/gmp-6.1.2

- MPFR_HOME=/usr/local/mpfr-4.0.2

- CLASS_PATH=.:$JAVA_HOME/lib

- LD_LIBRARY_PATH=$MPC_LIB_HOME/lib:$GMP_HOME/lib:$MPFR_HOME/lib:$LD_LIBRARY_PATH

- PATH=$MYSQL_HOME/bin:$JAVA_HOME/bin:$ZOOKEEPER_HOME/bin:$MYCAT_HOME/bin:$PATH

- export JAVA_HOME ZOOKEEPER_HOME MYCAT_HOME CLASS_PATH MYSQL_HOME MPC_LIB_HOME GMP_HOME MPFR_HOME LD_LIBRARY_PATH PATH

(3)配置Zookeeper

首先,需要将ZOOKEEPER_HOME为Zookeeper的安装目录)目录下的zoo_sample.cfg文件修改为zoo.cfg文件。具体命令如下:

- cd /usr/local/zookeeper-3.5.5/conf/

- mv zoo_sample.cfg zoo.cfg

接下来修改zoo.cfg文件,修改后的具体内容如下:

- tickTime=2000

- initLimit=10

- syncLimit=5

- dataDir=/usr/local/zookeeper-3.5.5/data

- dataLogDir=/usr/local/zookeeper-3.5.5/dataLog

- clientPort=2181

- server.1=binghe151:2888:3888

- server.2=binghe152:2888:3888

- server.3=binghe153:2888:3888

在Zookeeper的安装目录下创建data和dataLog两个文件夹。

- mkdir -p /usr/local/zookeeper-3.5.5/data

- mkdir -p /usr/local/zookeeper-3.5.5/dataLog

切换到新建的data目录下,创建myid文件,具体内容为数字1,如下所示:

- cd /usr/local/zookeeper-3.5.5/data

- vim myid

将数字1写入到文件myid。

3.将Zookeeper和系统环境变量文件复制到其他服务器

注意:(1)、(2)步是在binghe151服务器上执行的。

(1)复制Zookeeper到其他服务器

根据对服务器的规划,现将Zookeeper复制到binghe152和binghe53服务器,具体执行操作如下所示:

- scp -r /usr/local/zookeeper-3.5.5/ binghe152:/usr/local/

- scp -r /usr/local/zookeeper-3.5.5/ binghe153:/usr/local/

(2)复制系统环境变量文件到其他服务器

根据对服务器的规划,现将系统环境变量文件/etc/profile复制到binghe152、binghe153服务器,具体执行操作如下所示:

- scp /etc/profile binghe152:/etc/

- scp /etc/profile binghe153:/etc/

上述操作可能会要求输入密码,根据提示输入密码即可。

4.修改其他服务器上的myid文件

修改binghe152服务器上Zookeeper的myid文件内容为数字2,同时修改binghe153服务器上Zookeeper的myid文件内容为数字3。具体如下:

在binghe152服务器上执行如下操作:

- echo "2" > /usr/local/zookeeper-3.5.5/data/myid

- cat /usr/local/zookeeper-3.5.5/data/myid

- 2

在binghe153服务器上执行如下操作:

- echo "3" > /usr/local/zookeeper-3.5.5/data/myid

- cat /usr/local/zookeeper-3.5.5/data/myid

- 3

5.使环境变量生效

分别在binghe151、binghe152、binghe153上执行如下操作,使系统环境变量生效。

- source /etc/profile

6.启动Zookeeper集群

分别在binghe151、binghe152、binghe153上执行如下操作,启动Zookeeper集群。

- zkServer.sh start

7.查看Zookeeper集群的启动状态

- binghe151服务器

- [root@binghe151 ~]# zkServer.sh status

- ZooKeeper JMX enabled by default

- Using config: /usr/local/zookeeper-3.5.5/bin/../conf/zoo.cfg

- Client port found: 2181. Client address: localhost.

- Mode: follower

- binghe152服务器

- [root@binghe152 local]# zkServer.sh status

- ZooKeeper JMX enabled by default

- Using config: /usr/local/zookeeper-3.5.5/bin/../conf/zoo.cfg

- Client port found: 2181. Client address: localhost.

- Mode: leader

- binghe153服务器

- [root@binghe153 ~]# zkServer.sh status

- ZooKeeper JMX enabled by default

- Using config: /usr/local/zookeeper-3.5.5/bin/../conf/zoo.cfg

- Client port found: 2181. Client address: localhost.

- Mode: follower

可以看到,binghe151和binghe153服务器上的Zookeeper角色为follower,binghe152服务器上的Zookeeper角色为leader。

初始化Mycat配置到Zookeeper集群

注意:初始化Zookeeper中的数据,是在binghe151服务器上进行的,原因是之前我们已经在binghe151服务器上安装了Mycat。

1.查看初始化脚本

在Mycat安装目录下的bin目录中提供了一个init_zk_data.sh脚本文件,如下所示。

- [root@binghe151 ~]# ll /usr/local/mycat/bin/

- total 384

- -rwxr-xr-x 1 root root 3658 Feb 26 17:10 dataMigrate.sh

- -rwxr-xr-x 1 root root 1272 Feb 26 17:10 init_zk_data.sh

- -rwxr-xr-x 1 root root 15701 Feb 28 20:51 mycat

- -rwxr-xr-x 1 root root 2986 Feb 26 17:10 rehash.sh

- -rwxr-xr-x 1 root root 2526 Feb 26 17:10 startup_nowrap.sh

- -rwxr-xr-x 1 root root 140198 Feb 28 20:51 wrapper-linux-ppc-64

- -rwxr-xr-x 1 root root 99401 Feb 28 20:51 wrapper-linux-x86-32

- -rwxr-xr-x 1 root root 111027 Feb 28 20:51 wrapper-linux-x86-64

init_zk_data.sh脚本文件就是用来向Zookeeper中初始化Mycat的配置的,这个文件会通过读取Mycat安装目录下的conf目录下的配置文件,将其初始化到Zookeeper集群中。

2.复制Mycat配置文件

首先,我们查看下Mycat安装目录下的conf目录下的文件信息,如下所示。

- [root@binghe151 ~]# cd /usr/local/mycat/conf/

- [root@binghe151 conf]# ll

- total 108

- -rwxrwxrwx 1 root root 92 Feb 26 17:10 autopartition-long.txt

- -rwxrwxrwx 1 root root 51 Feb 26 17:10 auto-sharding-long.txt

- -rwxrwxrwx 1 root root 67 Feb 26 17:10 auto-sharding-rang-mod.txt

- -rwxrwxrwx 1 root root 340 Feb 26 17:10 cacheservice.properties

- -rwxrwxrwx 1 root root 3338 Feb 26 17:10 dbseq.sql

- -rwxrwxrwx 1 root root 3532 Feb 26 17:10 dbseq - utf8mb4.sql

- -rw-r--r-- 1 root root 86 Mar 1 22:37 dnindex.properties

- -rwxrwxrwx 1 root root 446 Feb 26 17:10 ehcache.xml

- -rwxrwxrwx 1 root root 2454 Feb 26 17:10 index_to_charset.properties

- -rwxrwxrwx 1 root root 1285 Feb 26 17:10 log4j2.xml

- -rwxrwxrwx 1 root root 183 Feb 26 17:10 migrateTables.properties

- -rwxrwxrwx 1 root root 271 Feb 26 17:10 myid.properties

- -rwxrwxrwx 1 root root 16 Feb 26 17:10 partition-hash-int.txt

- -rwxrwxrwx 1 root root 108 Feb 26 17:10 partition-range-mod.txt

- -rwxrwxrwx 1 root root 988 Mar 1 16:59 rule.xml

- -rwxrwxrwx 1 root root 3883 Mar 3 23:59 schema.xml

- -rwxrwxrwx 1 root root 440 Feb 26 17:10 sequence_conf.properties

- -rwxrwxrwx 1 root root 84 Mar 3 23:52 sequence_db_conf.properties

- -rwxrwxrwx 1 root root 29 Feb 26 17:10 sequence_distributed_conf.properties

- -rwxrwxrwx 1 root root 28 Feb 26 17:10 sequence_http_conf.properties

- -rwxrwxrwx 1 root root 53 Feb 26 17:10 sequence_time_conf.properties

- -rwxrwxrwx 1 root root 2420 Mar 4 15:14 server.xml

- -rwxrwxrwx 1 root root 18 Feb 26 17:10 sharding-by-enum.txt

- -rwxrwxrwx 1 root root 4251 Feb 28 20:51 wrapper.conf

- drwxrwxrwx 2 root root 4096 Feb 28 21:17 zkconf

- drwxrwxrwx 2 root root 4096 Feb 28 21:17 zkdownload

接下来,将Mycat安装目录下的conf目录下的schema.xml文件、server.xml文件、rule.xml文件和sequence_db_conf.properties文件复制到conf目录下的zkconf目录下,如下所示。

- cp schema.xml server.xml rule.xml sequence_db_conf.properties zkconf/

3.将Mycat配置信息写入Zookeeper集群

执行init_zk_data.sh脚本文件,向Zookeeper集群中初始化配置信息,如下所示。

- [root@binghe151 bin]# /usr/local/mycat/bin/init_zk_data.sh

- o2020-03-08 20:03:13 INFO JAVA_CMD=/usr/local/jdk1.8.0_212/bin/java

- o2020-03-08 20:03:13 INFO Start to initialize /mycat of ZooKeeper

- o2020-03-08 20:03:14 INFO Done

根据以上信息得知,Mycat向Zookeeper写入初始化配置信息成功。

4.验证Mycat配置信息是否成功写入Mycat

我们可以使用Zookeeper的客户端命令zkCli.sh 登录Zookeeper来验证Mycat的配置信息是否成功写入Mycat。

首先,登录Zookeeper,如下所示。

- [root@binghe151 ~]# zkCli.sh

- Connecting to localhost:2181

- ###################此处省略N行输出######################

- Welcome to ZooKeeper!

- WATCHER::

- WatchedEvent state:SyncConnected type:None path:null

- [zk: localhost:2181(CONNECTED) 0]

接下来,在Zookeeper命令行查看mycat的信息,如下所示。

- [zk: localhost:2181(CONNECTED) 0] ls /

- [mycat, zookeeper]

- [zk: localhost:2181(CONNECTED) 1] ls /mycat

- [mycat-cluster-1]

- [zk: localhost:2181(CONNECTED) 2] ls /mycat/mycat-cluster-1

- [cache, line, rules, schema, sequences, server]

- [zk: localhost:2181(CONNECTED) 3]

可以看到,在/mycat/mycat-cluster-1下存在6个目录,接下来,查看下schema目录下的信息,如下所示。

- [zk: localhost:2181(CONNECTED) 3] ls /mycat/mycat-cluster-1/schema

- [dataHost, dataNode, schema]

接下来,我们查看下dataHost的配置,如下所示。

- [zk: localhost:2181(CONNECTED) 4] get /mycat/mycat-cluster-1/schema/dataHost

- [{"balance":1,"maxCon":1000,"minCon":10,"name":"binghe151","writeType":0,"switchType":1,"slaveThreshold":100,"dbType":"mysql","dbDriver":"native","heartbeat":"select user()","writeHost":[{"host":"binghe51","url":"192.168.175.151:3306","password":"root","user":"root"}]},{"balance":1,"maxCon":1000,"minCon":10,"name":"binghe152","writeType":0,"switchType":1,"slaveThreshold":100,"dbType":"mysql","dbDriver":"native","heartbeat":"select user()","writeHost":[{"host":"binghe52","url":"192.168.175.152:3306","password":"root","user":"root"}]},{"balance":1,"maxCon":1000,"minCon":10,"name":"binghe153","writeType":0,"switchType":1,"slaveThreshold":100,"dbType":"mysql","dbDriver":"native","heartbeat":"select user()","writeHost":[{"host":"binghe53","url":"192.168.175.153:3306","password":"root","user":"root"}]},{"balance":1,"maxCon":1000,"minCon":10,"name":"binghe154","writeType":0,"switchType":1,"slaveThreshold":100,"dbType":"mysql","dbDriver":"native","heartbeat":"select user()","writeHost":[{"host":"binghe54","url":"192.168.175.154:3306","password":"root","user":"root"}]}]

上面的输出信息格式比较乱,但可以看出是Json格式的信息,我们可以将输出信息进行格式化,格式化后的结果如下所示。

- [

- {

- "balance": 1,

- "maxCon": 1000,

- "minCon": 10,

- "name": "binghe151",

- "writeType": 0,

- "switchType": 1,

- "slaveThreshold": 100,

- "dbType": "mysql",

- "dbDriver": "native",

- "heartbeat": "select user()",

- "writeHost": [

- {

- "host": "binghe51",

- "url": "192.168.175.151:3306",

- "password": "root",

- "user": "root"

- }

- ]

- },

- {

- "balance": 1,

- "maxCon": 1000,

- "minCon": 10,

- "name": "binghe152",

- "writeType": 0,

- "switchType": 1,

- "slaveThreshold": 100,

- "dbType": "mysql",

- "dbDriver": "native",

- "heartbeat": "select user()",

- "writeHost": [

- {

- "host": "binghe52",

- "url": "192.168.175.152:3306",

- "password": "root",

- "user": "root"

- }

- ]

- },

- {

- "balance": 1,

- "maxCon": 1000,

- "minCon": 10,

- "name": "binghe153",

- "writeType": 0,

- "switchType": 1,

- "slaveThreshold": 100,

- "dbType": "mysql",

- "dbDriver": "native",

- "heartbeat": "select user()",

- "writeHost": [

- {

- "host": "binghe53",

- "url": "192.168.175.153:3306",

- "password": "root",

- "user": "root"

- }

- ]

- },

- {

- "balance": 1,

- "maxCon": 1000,

- "minCon": 10,

- "name": "binghe154",

- "writeType": 0,

- "switchType": 1,

- "slaveThreshold": 100,

- "dbType": "mysql",

- "dbDriver": "native",

- "heartbeat": "select user()",

- "writeHost": [

- {

- "host": "binghe54",

- "url": "192.168.175.154:3306",

- "password": "root",

- "user": "root"

- }

- ]

- }

- ]

可以看到,我们在Mycat的schema.xml文件中配置的dataHost节点的信息,成功写入到Zookeeper中了。

为了验证Mycat的配置信息,是否已经同步到Zookeeper的其他节点上,我们也可以在binghe152和binghe153服务器上登录Zookeeper,查看Mycat配置信息是否写入成功。

- binghe152服务器

- [root@binghe152 ~]# zkCli.sh

- Connecting to localhost:2181

- #################省略N行输出信息################

- [zk: localhost:2181(CONNECTED) 0] get /mycat/mycat-cluster-1/schema/dataHost

- [{"balance":1,"maxCon":1000,"minCon":10,"name":"binghe151","writeType":0,"switchType":1,"slaveThreshold":100,"dbType":"mysql","dbDriver":"native","heartbeat":"select user()","writeHost":[{"host":"binghe51","url":"192.168.175.151:3306","password":"root","user":"root"}]},{"balance":1,"maxCon":1000,"minCon":10,"name":"binghe152","writeType":0,"switchType":1,"slaveThreshold":100,"dbType":"mysql","dbDriver":"native","heartbeat":"select user()","writeHost":[{"host":"binghe52","url":"192.168.175.152:3306","password":"root","user":"root"}]},{"balance":1,"maxCon":1000,"minCon":10,"name":"binghe153","writeType":0,"switchType":1,"slaveThreshold":100,"dbType":"mysql","dbDriver":"native","heartbeat":"select user()","writeHost":[{"host":"binghe53","url":"192.168.175.153:3306","password":"root","user":"root"}]},{"balance":1,"maxCon":1000,"minCon":10,"name":"binghe154","writeType":0,"switchType":1,"slaveThreshold":100,"dbType":"mysql","dbDriver":"native","heartbeat":"select user()","writeHost":[{"host":"binghe54","url":"192.168.175.154:3306","password":"root","user":"root"}]}]

可以看到,Mycat的配置信息成功同步到了binghe152服务器上的Zookeeper中。

- binghe153服务器

- [root@binghe153 ~]# zkCli.sh

- Connecting to localhost:2181

- #####################此处省略N行输出信息#####################

- [zk: localhost:2181(CONNECTED) 0] get /mycat/mycat-cluster-1/schema/dataHost

- [{"balance":1,"maxCon":1000,"minCon":10,"name":"binghe151","writeType":0,"switchType":1,"slaveThreshold":100,"dbType":"mysql","dbDriver":"native","heartbeat":"select user()","writeHost":[{"host":"binghe51","url":"192.168.175.151:3306","password":"root","user":"root"}]},{"balance":1,"maxCon":1000,"minCon":10,"name":"binghe152","writeType":0,"switchType":1,"slaveThreshold":100,"dbType":"mysql","dbDriver":"native","heartbeat":"select user()","writeHost":[{"host":"binghe52","url":"192.168.175.152:3306","password":"root","user":"root"}]},{"balance":1,"maxCon":1000,"minCon":10,"name":"binghe153","writeType":0,"switchType":1,"slaveThreshold":100,"dbType":"mysql","dbDriver":"native","heartbeat":"select user()","writeHost":[{"host":"binghe53","url":"192.168.175.153:3306","password":"root","user":"root"}]},{"balance":1,"maxCon":1000,"minCon":10,"name":"binghe154","writeType":0,"switchType":1,"slaveThreshold":100,"dbType":"mysql","dbDriver":"native","heartbeat":"select user()","writeHost":[{"host":"binghe54","url":"192.168.175.154:3306","password":"root","user":"root"}]}]

可以看到,Mycat的配置信息成功同步到了binghe153服务器上的Zookeeper中。

配置Mycat支持Zookeeper启动

1.在binghe151服务器上配置Mycat

在binghe151服务器上进入Mycat安装目录的conf目录下,查看文件信息,如下所示。

- [root@binghe151 ~]# cd /usr/local/mycat/conf/

- [root@binghe151 conf]# ll

- total 108

- -rwxrwxrwx 1 root root 92 Feb 26 17:10 autopartition-long.txt

- -rwxrwxrwx 1 root root 51 Feb 26 17:10 auto-sharding-long.txt

- -rwxrwxrwx 1 root root 67 Feb 26 17:10 auto-sharding-rang-mod.txt

- -rwxrwxrwx 1 root root 340 Feb 26 17:10 cacheservice.properties

- -rwxrwxrwx 1 root root 3338 Feb 26 17:10 dbseq.sql

- -rwxrwxrwx 1 root root 3532 Feb 26 17:10 dbseq - utf8mb4.sql

- -rw-r--r-- 1 root root 86 Mar 1 22:37 dnindex.properties

- -rwxrwxrwx 1 root root 446 Feb 26 17:10 ehcache.xml

- -rwxrwxrwx 1 root root 2454 Feb 26 17:10 index_to_charset.properties

- -rwxrwxrwx 1 root root 1285 Feb 26 17:10 log4j2.xml

- -rwxrwxrwx 1 root root 183 Feb 26 17:10 migrateTables.properties

- -rwxrwxrwx 1 root root 271 Feb 26 17:10 myid.properties

- -rwxrwxrwx 1 root root 16 Feb 26 17:10 partition-hash-int.txt

- -rwxrwxrwx 1 root root 108 Feb 26 17:10 partition-range-mod.txt

- -rwxrwxrwx 1 root root 988 Mar 1 16:59 rule.xml

- -rwxrwxrwx 1 root root 3883 Mar 3 23:59 schema.xml

- -rwxrwxrwx 1 root root 440 Feb 26 17:10 sequence_conf.properties

- -rwxrwxrwx 1 root root 84 Mar 3 23:52 sequence_db_conf.properties

- -rwxrwxrwx 1 root root 29 Feb 26 17:10 sequence_distributed_conf.properties

- -rwxrwxrwx 1 root root 28 Feb 26 17:10 sequence_http_conf.properties

- -rwxrwxrwx 1 root root 53 Feb 26 17:10 sequence_time_conf.properties

- -rwxrwxrwx 1 root root 2420 Mar 4 15:14 server.xml

- -rwxrwxrwx 1 root root 18 Feb 26 17:10 sharding-by-enum.txt

- -rwxrwxrwx 1 root root 4251 Feb 28 20:51 wrapper.conf

- drwxrwxrwx 2 root root 4096 Feb 28 21:17 zkconf

- drwxrwxrwx 2 root root 4096 Feb 28 21:17 zkdownload

可以看到,在Mycat的conf目录下,存在一个myid.properties文件,接下来,使用vim编辑器编辑这个文件,如下所示。

- vim myid.properties

编辑后的myid.properties文件的内容如下所示。

- loadZk=true

- zkURL=192.168.175.151:2181,192.168.175.152:2181,192.168.175.153:2181

- clusterId=mycat-cluster-1

- myid=mycat_151

- clusterSize=2

- clusterNodes=mycat_151,mycat_154

- #server booster ; booster install on db same server,will reset all minCon to 2

- type=server

- boosterDataHosts=dataHost1

其中几个重要的参数说明如下所示。

- loadZk:表示是否加载Zookeeper配置。true:是;false:否;

- zkURL:Zookeeper的连接地址,多个Zookeeper连接地址以逗号隔开;

- clusterId:当前Mycat集群的Id标识,此标识需要与Zookeeper中/mycat目录下的目录名称相同,如下所示。

- [zk: localhost:2181(CONNECTED) 1] ls /mycat

- [mycat-cluster-1]

- myid:当前Mycat节点的id,这里我的命名方式为mycat_前缀加上IP地址的最后三位;

- clusterSize:表示Mycat集群中的Mycat节点个数,这里,我们在binghe151和binghe154节点上部署Mycat,所以Mycat节点的个数为2。

- clusterNodes:Mycat集群中,所有的Mycat节点,此处的节点需要配置myid中配置的Mycat节点id,多个节点之前以逗号分隔。这里我配置的节点为:mycat_151,mycat_154。

2.在binghe154服务器上安装全新的Mycat

在binghe154服务器上下载并安装和binghe151服务器上相同版本的Mycat,并将其解压到binghe154服务器上的/usr/local/mycat目录下。

也可以在binghe151服务器上直接输入如下命令将Mycat的安装目录复制到binghe154服务器上。

- [root@binghe151 ~]# scp -r /usr/local/mycat binghe154:/usr/local

注意:别忘了在binghe154服务器上配置Mycat的系统环境变量。

3.修改binghe154服务器上的Mycat配置

在binghe154服务器上修改Mycat安装目录下的conf目录中的myid.properties文件,如下所示。

- vim /usr/local/mycat/conf/myid.properties

修改后的myid.properties文件的内容如下所示。

- loadZk=true

- zkURL=192.168.175.151:2181,192.168.175.152:2181,192.168.175.153:2181

- clusterId=mycat-cluster-1

- myid=mycat_154

- clusterSize=2

- clusterNodes=mycat_151,mycat_154

- #server booster ; booster install on db same server,will reset all minCon to 2

- type=server

- boosterDataHosts=dataHost1

4.重启Mycat

分别重启binghe151服务器和binghe154服务器上的Mycat,如下所示。

注意:先重启

- binghe151服务器

- [root@binghe151 ~]# mycat restart

- Stopping Mycat-server...

- Stopped Mycat-server.

- Starting Mycat-server...

- binghe154服务器

- [root@binghe154 ~]# mycat restart

- Stopping Mycat-server...

- Stopped Mycat-server.

- Starting Mycat-server...

在binghe151和binghe154服务器上分别查看Mycat的启动日志,如下所示。

- STATUS | wrapper | 2020/03/08 21:08:15 | <-- Wrapper Stopped

- STATUS | wrapper | 2020/03/08 21:08:15 | --> Wrapper Started as Daemon

- STATUS | wrapper | 2020/03/08 21:08:15 | Launching a JVM...

- INFO | jvm 1 | 2020/03/08 21:08:16 | Wrapper (Version 3.2.3) http://wrapper.tanukisoftware.org

- INFO | jvm 1 | 2020/03/08 21:08:16 | Copyright 1999-2006 Tanuki Software, Inc. All Rights Reserved.

- INFO | jvm 1 | 2020/03/08 21:08:16 |

- INFO | jvm 1 | 2020/03/08 21:08:28 | MyCAT Server startup successfully. see logs in logs/mycat.log

从日志的输出结果可以看出,Mycat重启成功。

此时,先重启binghe151服务器上的Mycat,再重启binghe154服务器上的Mycat之后,我们会发现binghe154服务器上的Mycat的conf目录下的schema.xml、server.xml、rule.xml和sequence_db_conf.properties文件与binghe151服务器上Mycat的配置文件相同,这就是binghe154服务器上的Mycat从Zookeeper上读取配置文件的结果。

以后,我们只需要修改Zookeeper中有关Mycat的配置,这些配置就会自动同步到Mycat中,这样可以保证多个Mycat节点的配置是一致的。

配置虚拟IP

分别在binghe151和binghe154服务器上配置虚拟IP,如下所示。

- ifconfig eth0:1 192.168.175.110 broadcast 192.168.175.255 netmask 255.255.255.0 up

- route add -host 192.168.175.110 dev eth0:1

配置完虚拟IP的效果如下所示,以binghe151服务器为例。

- [root@binghe151 ~]# ifconfig

- eth0 Link encap:Ethernet HWaddr 00:0C:29:10:A1:45

- inet addr:192.168.175.151 Bcast:192.168.175.255 Mask:255.255.255.0

- inet6 addr: fe80::20c:29ff:fe10:a145/64 Scope:Link

- UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

- RX packets:116766 errors:0 dropped:0 overruns:0 frame:0

- TX packets:85230 errors:0 dropped:0 overruns:0 carrier:0

- collisions:0 txqueuelen:1000

- RX bytes:25559422 (24.3 MiB) TX bytes:55997016 (53.4 MiB)

- eth0:1 Link encap:Ethernet HWaddr 00:0C:29:10:A1:45

- inet addr:192.168.175.110 Bcast:192.168.175.255 Mask:255.255.255.0

- UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

- lo Link encap:Local Loopback

- inet addr:127.0.0.1 Mask:255.0.0.0

- inet6 addr: ::1/128 Scope:Host

- UP LOOPBACK RUNNING MTU:65536 Metric:1

- RX packets:51102 errors:0 dropped:0 overruns:0 frame:0

- TX packets:51102 errors:0 dropped:0 overruns:0 carrier:0

- collisions:0 txqueuelen:0

- RX bytes:2934009 (2.7 MiB) TX bytes:2934009 (2.7 MiB)

注意:在命令行添加VIP后,当服务器重启后,VIP信息会消失,所以,最好是将创建VIP的命令写到一个脚本文件中,例如,将命令写到/usr/local/script/vip.sh文件中,如下所示。

- mkdir /usr/local/script

- vim /usr/local/script/vip.sh

文件的内容如下所示。

- ifconfig eth0:1 192.168.175.110 broadcast 192.168.175.255 netmask 255.255.255.0 up

- route add -host 192.168.175.110 dev eth0:1

接下来,将/usr/local/script/vip.sh文件添加到服务器开机启动项中,如下所示。

- echo /usr/local/script/vip.sh >> /etc/rc.d/rc.local

配置IP转发

在binghe151和binghe154服务器上配置系统内核IP转发功能,编辑/etc/sysctl.conf文件,如下所示。

- vim /etc/sysctl.conf

找到如下一行代码。

- net.ipv4.ip_forward = 0

将其修改成如下所示的代码。

- net.ipv4.ip_forward = 1

保存并退出vim编辑器,并运行如下命令使配置生效。

- sysctl -p

安装并配置xinetd服务

我们需要在安装HAProxy的服务器上,也就是在binghe151和binghe154服务器上安装xinetd服务来开启48700端口。

(1)在服务器命令行执行如下命令安装xinetd服务,如下所示。

- yum install xinetd -y

(2)编辑/etc/xinetd.conf文件,如下所示。

- vim /etc/xinetd.conf

检查文件中是否存在如下配置。

- includedir /etc/xinetd.d

如果/etc/xinetd.conf文件中没有以上配置,则在/etc/xinetd.conf文件中添加以上配置;如果存在以上配置,则不用修改。

(3)创建/etc/xinetd.d目录,如下所示。

- mkdir /etc/xinetd.d

注意:如果/etc/xinetd.d目录已经存在,创建目录时会报如下错误。

- mkdir: cannot create directory `/etc/xinetd.d': File exists

大家可不必理会此错误信息。

(4)在/etc/xinetd.d目录下添加Mycat状态检测服务器的配置文件mycat_status,如下所示。

- touch /etc/xinetd.d/mycat_status

(5)编辑mycat_status文件,如下所示。

- vim /etc/xinetd.d/mycat_status

编辑后的mycat_status文件中的内容如下所示。

- service mycat_status

- {

- flags = REUSE

- socket_type = stream

- port = 48700

- wait = no

- user = root

- server =/usr/local/bin/mycat_check.sh

- log_on_failure += USERID

- disable = no

- }

部分xinetd配置参数说明如下所示。

- socket_type:表示封包处理方式,Stream为TCP数据包。

- port:表示xinetd服务监听的端口号。

- wait:表示不需等待,即服务将以多线程的方式运行。

- user:运行xinted服务的用户。

- server:需要启动的服务脚本。

- log_on_failure:记录失败的日志内容。

- disable:需要启动xinted服务时,需要将此配置项设置为no。

(6)在/usr/local/bin目录下添加mycat_check.sh服务脚本,如下所示。

- touch /usr/local/bin/mycat_check.sh

(7)编辑/usr/local/bin/mycat_check.sh文件,如下所示。

- vim /usr/local/bin/mycat_check.sh

编辑后的文件内容如下所示。

- #!/bin/bash

- mycat=`/usr/local/mycat/bin/mycat status | grep 'not running' | wc -l`

- if [ "$mycat" = "0" ]; then

- /bin/echo -e "HTTP/1.1 200 OK\r\n"

- else

- /bin/echo -e "HTTP/1.1 503 Service Unavailable\r\n"

- /usr/local/mycat/bin/mycat start

- fi

为mycat_check.sh文件赋予可执行权限,如下所示。

- chmod a+x /usr/local/bin/mycat_check.sh

(8)编辑/etc/services文件,如下所示。

- vim /etc/services

在文件末尾添加如下所示的内容。

- mycat_status 48700/tcp # mycat_status

其中,端口号需要与在/etc/xinetd.d/mycat_status文件中配置的端口号相同。

(9)重启xinetd服务,如下所示。

- service xinetd restart

(10)查看mycat_status服务是否成功启动,如下所示。

- binghe151服务器

- [root@binghe151 ~]# netstat -antup|grep 48700

- tcp 0 0 :::48700 :::* LISTEN 2776/xinetd

- binghe154服务器

- [root@binghe154 ~]# netstat -antup|grep 48700

- tcp 0 0 :::48700 :::* LISTEN 6654/xinetd

结果显示,两台服务器上的mycat_status服务器启动成功。

至此,xinetd服务安装并配置成功,即Mycat状态检查服务安装成功。

安装并配置HAProxy

我们直接在binghe151和binghe154服务器上使用如下命令安装HAProxy。

- yum install haproxy -y

安装完成后,我们需要对HAProxy进行配置,HAProxy的配置文件目录为/etc/haproxy,我们查看这个目录下的文件信息,如下所示。

- [root@binghe151 ~]# ll /etc/haproxy/

- total 4

- -rw-r--r-- 1 root root 3142 Oct 21 2016 haproxy.cfg

发现/etc/haproxy/目录下存在一个haproxy.cfg文件。接下来,我们就修改haproxy.cfg文件,修改后的haproxy.cfg文件的内容如下所示。

- global

- log 127.0.0.1 local2

- chroot /var/lib/haproxy

- pidfile /var/run/haproxy.pid

- maxconn 4000

- user haproxy

- group haproxy

- daemon

- stats socket /var/lib/haproxy/stats

- defaults

- mode http

- log global

- option httplog

- option dontlognull

- option http-server-close

- option redispatch

- retries 3

- timeout http-request 10s

- timeout queue 1m

- timeout connect 10s

- timeout client 1m

- timeout server 1m

- timeout http-keep-alive 10s

- timeout check 10s

- maxconn 3000

- listen admin_status

- bind 0.0.0.0:48800

- stats uri /admin-status

- stats auth admin:admin

- listen allmycat_service

- bind 0.0.0.0:3366

- mode tcp

- option tcplog

- option httpchk OPTIONS * HTTP/1.1\r\nHost:\ www

- balance roundrobin

- server mycat_151 192.168.175.151:3307 check port 48700 inter 5s rise 2 fall 3

- server mycat_154 192.168.175.154:3307 check port 48700 inter 5s rise 2 fall 3

- listen allmycat_admin

- bind 0.0.0.0:3377

- mode tcp

- option tcplog

- option httpchk OPTIONS * HTTP/1.1\r\nHost:\ www

- balance roundrobin

- server mycat_151 192.168.175.151:3308 check port 48700 inter 5s rise 2 fall 3

- server mycat_154 192.168.175.154:3308 check port 48700 inter 5s rise 2 fall 3

接下来,在binghe151服务器和binghe154服务器上启动HAProxy,如下所示。

- haproxy -f /etc/haproxy/haproxy.cfg

接下来,我们使用mysql命令连接HAProxy监听的虚拟IP和端口来连接Mycat,如下所示。

- [root@binghe151 ~]# mysql -umycat -pmycat -h192.168.175.110 -P3366 --default-auth=mysql_native_password

- mysql: [Warning] Using a password on the command line interface can be insecure.

- Welcome to the MySQL monitor. Commands end with ; or \g.

- Your MySQL connection id is 2

- Server version: 5.6.29-mycat-1.6.7.4-release-20200228205020 MyCat Server (OpenCloudDB)

- Copyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.

- Oracle is a registered trademark of Oracle Corporation and/or its

- affiliates. Other names may be trademarks of their respective

- owners.

- Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

- mysql>

可以看到,连接Mycat成功。

安装Keepalived

1.安装并配置Keepalived

直接在binghe151和binghe154服务器上输入如下命令安装Keepalived。

- yum install keepalived -y

安装成功后,会在/etc目录下生成一个keepalived目录,接下来,我们在/etc/keepalived目录下配置keepalived.conf文件,如下所示。

- vim /etc/keepalived/keepalived.conf

- binghe151服务器配置

- ! Configuration Fileforkeepalived

- vrrp_script chk_http_port {

- script "/etc/keepalived/check_haproxy.sh"

- interval 2

- weight 2

- }

- vrrp_instance VI_1 {

- state MASTER

- interface eth0

- virtual_router_id 51

- priority 150

- advert_int 1

- authentication {

- auth_type PASS

- auth_pass 1111

- }

- track_script {

- chk_http_port

- }

- virtual_ipaddress {

- 192.168.175.110 dev eth0 scope global

- }

- }

- binghe154服务器配置

- ! Configuration Fileforkeepalived

- vrrp_script chk_http_port {

- script "/etc/keepalived/check_haproxy.sh"

- interval 2

- weight 2

- }

- vrrp_instance VI_1 {

- state SLAVE

- interface eth0

- virtual_router_id 51

- priority 120

- advert_int 1

- authentication {

- auth_type PASS

- auth_pass 1111

- }

- track_script {

- chk_http_port

- }

- virtual_ipaddress {

- 192.168.175.110 dev eth0 scope global

- }

- }

2.编写检测HAProxy的脚本

接下来,需要分别在binghe151和binghe154服务器上的/etc/keepalived目录下创建check_haproxy.sh脚本,脚本内容如下所示。

- #!/bin/bash

- STARTHAPROXY="/usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg"

- STOPKEEPALIVED="/etc/init.d/keepalived stop"

- #STOPKEEPALIVED="/usr/bin/systemctl stop keepalived"

- LOGFILE="/var/log/keepalived-haproxy-state.log"

- echo "[check_haproxy status]" >> $LOGFILE

- A=`ps -C haproxy --no-header |wc -l`

- echo "[check_haproxy status]" >> $LOGFILE

- date >> $LOGFILE

- if [ $A -eq 0 ];then

- echo $STARTHAPROXY >> $LOGFILE

- $STARTHAPROXY >> $LOGFILE 2>&1

- sleep 5

- fi

- if [ `ps -C haproxy --no-header |wc -l` -eq 0 ];then

- exit 0

- else

- exit 1

- fi

使用如下命令为check_haproxy.sh脚本授予可执行权限。

- chmod a+x /etc/keepalived/check_haproxy.sh

3.启动Keepalived

配置完成后,我们就可以启动Keepalived了,分别在binghe151和binghe154服务器上启动Keepalived,如下所示。

- /etc/init.d/keepalived start

查看Keepalived是否启动成功,如下所示。

- binghe151服务器

- [root@binghe151 ~]# ps -ef | grep keepalived

- root 1221 1 0 20:06 ? 00:00:00 keepalived -D

- root 1222 1221 0 20:06 ? 00:00:00 keepalived -D

- root 1223 1221 0 20:06 ? 00:00:02 keepalived -D

- root 93290 3787 0 21:42 pts/0 00:00:00 grep keepalived

- binghe154服务器

- [root@binghe154 ~]# ps -ef | grep keepalived

- root 1224 1 0 20:06 ? 00:00:00 keepalived -D

- root 1225 1224 0 20:06 ? 00:00:00 keepalived -D

- root 1226 1224 0 20:06 ? 00:00:02 keepalived -D

- root 94636 3798 0 21:43 pts/0 00:00:00 grep keepalived

可以看到,两台服务器上的Keepalived服务启动成功。

4.验证Keepalived绑定的虚拟IP

接下来,我们分别查看两台服务器上的Keepalived是否绑定了虚拟IP。

- binghe151服务器

- [root@binghe151 ~]# ip addr

- 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

- link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

- inet 127.0.0.1/8 scope host lo

- inet6 ::1/128 scope host

- valid_lft forever preferred_lft forever

- 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

- link/ether 00:0c:29:10:a1:45 brd ff:ff:ff:ff:ff:ff

- inet 192.168.175.151/24 brd 192.168.175.255 scope global eth0

- inet 192.168.175.110/32 scope global eth0

- inet 192.168.175.110/24 brd 192.168.175.255 scope global secondary eth0:1

- inet6 fe80::20c:29ff:fe10:a145/64 scope link

- valid_lft forever preferred_lft forever

可以看到如下一行代码。

- inet 192.168.175.110/32 scope global eth0

说明binghe151服务器上的Keepalived绑定了虚拟IP 192.168.175.110。

- binghe154服务器

- [root@binghe154 ~]# ip addr

- 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

- link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

- inet 127.0.0.1/8 scope host lo

- inet6 ::1/128 scope host

- valid_lft forever preferred_lft forever

- 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

- link/ether 00:50:56:22:2a:75 brd ff:ff:ff:ff:ff:ff

- inet 192.168.175.154/24 brd 192.168.175.255 scope global eth0

- inet 192.168.175.110/24 brd 192.168.175.255 scope global secondary eth0:1

- inet6 fe80::250:56ff:fe22:2a75/64 scope link

- valid_lft forever preferred_lft forever

可以看到binghe154服务器上的Keepalived并没有绑定虚拟IP。

5.测试虚拟IP的漂移

如何测试虚拟IP的漂移呢?首先,我们停止binghe151服务器上的Keepalived,如下所示。

- /etc/init.d/keepalived stop

接下来,查看binghe154服务器上Keepalived绑定虚拟IP的情况,如下所示。

- [root@binghe154 ~]# ip addr

- 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

- link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

- inet 127.0.0.1/8 scope host lo

- inet6 ::1/128 scope host

- valid_lft forever preferred_lft forever

- 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

- link/ether 00:50:56:22:2a:75 brd ff:ff:ff:ff:ff:ff

- inet 192.168.175.154/24 brd 192.168.175.255 scope global eth0

- inet 192.168.175.110/32 scope global eth0

- inet 192.168.175.110/24 brd 192.168.175.255 scope global secondary eth0:1

- inet6 fe80::250:56ff:fe22:2a75/64 scope link

- valid_lft forever preferred_lft forever

可以看到,在输出的结果信息中,存在如下一行信息。

- inet 192.168.175.110/32 scope global eth0

说明binghe154服务器上的Keepalived绑定了虚拟IP 192.168.175.110,虚拟IP漂移到了binghe154服务器上。

6.binghe151服务器上的Keepalived抢占虚拟IP

接下来,我们启动binghe151服务器上的Keepalived,如下所示。

- /etc/init.d/keepalived start

启动成功后,我们再次查看虚拟IP的绑定情况,如下所示。

- binghe151服务器

- [root@binghe151 ~]# ip addr

- 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

- link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

- inet 127.0.0.1/8 scope host lo

- inet6 ::1/128 scope host

- valid_lft forever preferred_lft forever

- 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

- link/ether 00:0c:29:10:a1:45 brd ff:ff:ff:ff:ff:ff

- inet 192.168.175.151/24 brd 192.168.175.255 scope global eth0

- inet 192.168.175.110/32 scope global eth0

- inet 192.168.175.110/24 brd 192.168.175.255 scope global secondary eth0:1

- inet6 fe80::20c:29ff:fe10:a145/64 scope link

- valid_lft forever preferred_lft forever

- binghe154服务器

- [root@binghe154 ~]# ip addr

- 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

- link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

- inet 127.0.0.1/8 scope host lo

- inet6 ::1/128 scope host

- valid_lft forever preferred_lft forever

- 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

- link/ether 00:50:56:22:2a:75 brd ff:ff:ff:ff:ff:ff

- inet 192.168.175.154/24 brd 192.168.175.255 scope global eth0

- inet 192.168.175.110/24 brd 192.168.175.255 scope global secondary eth0:1

- inet6 fe80::250:56ff:fe22:2a75/64 scope link

- valid_lft forever preferred_lft forever

由于binghe151服务器上配置的Keepalived优先级要高于binghe154服务器上的Keepalived,所以,再次启动binghe151服务器上的Keepalived后,binghe151服务器上的Keepalived会抢占虚拟IP。

配置MySQL主从复制

这里,为了简单,我将binghe154和binghe155服务器上的MySQL配置成主从复制,大家也可以根据实际情况,自行配置其他服务器上MySQL的主从复制(注意:我这里配置的是一主一从模式)。

1.编辑my.cnf文件

- binghe154服务器

- server_id = 154

- log_bin = /data/mysql/log/bin_log/mysql-bin

- binlog-ignore-db=mysql

- binlog_format= mixed

- sync_binlog=100

- log_slave_updates = 1

- binlog_cache_size=32m

- max_binlog_cache_size=64m

- max_binlog_size=512m

- lower_case_table_names = 1

- relay_log = /data/mysql/log/bin_log/relay-bin

- relay_log_index = /data/mysql/log/bin_log/relay-bin.index

- master_info_repository=TABLE

- relay-log-info-repository=TABLE

- relay-log-recovery

- binghe155服务器

- server_id = 155

- log_bin = /data/mysql/log/bin_log/mysql-bin

- binlog-ignore-db=mysql

- binlog_format= mixed

- sync_binlog=100

- log_slave_updates = 1

- binlog_cache_size=32m

- max_binlog_cache_size=64m

- max_binlog_size=512m

- lower_case_table_names = 1

- relay_log = /data/mysql/log/bin_log/relay-bin

- relay_log_index = /data/mysql/log/bin_log/relay-bin.index

- master_info_repository=TABLE

- relay-log-info-repository=TABLE

- relay-log-recovery

2.同步两台服务器上MySQL的数据

在binghe154服务器上只有一个customer_db数据库,我们使用mysqldump命令导出customer_db数据库,如下所示。

- [root@binghe154 ~]# mysqldump --master-data=2 --single-transaction -uroot -p --databases customer_db > binghe154.sql

- Enter password:

接下来,我们查看binghe154.sql文件。

- more binghe154.sql

在文件中,我们可以找到如下信息。

- CHANGE MASTER TO MASTER_LOG_FILE='mysql-bin.000042', MASTER_LOG_POS=995;

说明当前MySQL的二进制日志文件为mysql-bin.000042,二进制日志文件的位置为995。

接下来,我们将binghe154.sql文件复制到binghe155服务器上,如下所示。

- scp binghe154.sql 192.168.175.155:/usr/local/src

在binghe155服务器上,将binghe154.sql脚本导入到MySQL中,如下所示。

- mysql -uroot -p < /usr/local/src/binghe154.sql

此时,完成了数据的初始化。

3.创建主从复制账号

在binghe154服务器的MySQL中,创建用于主从复制的MySQL账号,如下所示。

- mysql> CREATE USER 'repl'@'192.168.175.%' IDENTIFIED BY 'repl123456';

- Query OK, 0 rows affected (0.01 sec)

- mysql> ALTER USER 'repl'@'192.168.175.%' IDENTIFIED WITH mysql_native_password BY 'repl123456';

- Query OK, 0 rows affected (0.00 sec)

- mysql> GRANT REPLICATION SLAVE ON *.* TO 'repl'@'192.168.175.%';

- Query OK, 0 rows affected (0.00 sec)

- mysql> FLUSH PRIVILEGES;

- Query OK, 0 rows affected (0.00 sec)

4.配置复制链路

登录binghe155服务器上的MySQL,并使用如下命令配置复制链路。

- mysql> change master to

- > master_host='192.168.175.154',

- > master_port=3306,

- > master_user='repl',

- > master_password='repl123456',

- > MASTER_LOG_FILE='mysql-bin.000042',

- > MASTER_LOG_POS=995;

其中,MASTER_LOG_FILE='mysql-bin.000042', MASTER_LOG_POS=995 就是在binghe154.sql文件中找到的。

5.启动从库

在binghe155服务器的MySQL命令行启动从库,如下所示。

- mysql> start slave;

查看从库是否启动成功,如下所示。

- mysql> SHOW slave STATUS \G

- *************************** 1. row ***************************

- Slave_IO_State: Waiting for master to send event

- Master_Host: 192.168.175.151

- Master_User: binghe152

- Master_Port: 3306

- Connect_Retry: 60

- Master_Log_File: mysql-bin.000007

- Read_Master_Log_Pos: 1360

- Relay_Log_File: relay-bin.000003

- Relay_Log_Pos: 322

- Relay_Master_Log_File: mysql-bin.000007

- Slave_IO_Running: Yes

- Slave_SQL_Running: Yes

- #################省略部分输出结果信息##################

结果显示Slave_IO_Running选项和Slave_SQL_Running选项的值均为Yes,说明MySQL主从复制环境搭建成功。

最后,别忘了在binghe155服务器的MySQL中创建Mycat连接MySQL的用户,如下所示。

- CREATE USER 'mycat'@'192.168.175.%' IDENTIFIED BY 'mycat';

- ALTER USER 'mycat'@'192.168.175.%' IDENTIFIED WITH mysql_native_password BY 'mycat';

- GRANT SELECT, INSERT, UPDATE, DELETE,EXECUTE ON *.* TO 'mycat'@'192.168.175.%';

- FLUSH PRIVILEGES;

配置Mycat读写分离

修改Mycatd的schema.xml文件,实现binghe154和binghe155服务器上的MySQL读写分离。在Mycat安装目录的conf/zkconf目录下,修改schema.xml文件,修改后的schema.xml文件如下所示。

- <!DOCTYPE mycat:schema SYSTEM "schema.dtd">

- <mycat:schema xmlns:mycat="http://io.mycat/">

- <schema name="shop" checkSQLschema="true" sqlMaxLimit="1000">

- <table name="order_master" dataNode="orderdb01,orderdb02,orderdb03,orderdb04" rule="order_master" primaryKey="order_id" autoIncrement="true">

- <childTable name="order_detail" joinKey="order_id" parentKey="order_id" primaryKey="order_detail_id" autoIncrement="true"/>

- </table>

- <table name="order_cart" dataNode="ordb" primaryKey="cart_id"/>

- <table name="order_customer_addr" dataNode="ordb" primaryKey="customer_addr_id"/>

- <table name="region_info" dataNode="ordb,prodb,custdb" primaryKey="region_id" type="global"/>

- <table name="serial" dataNode="ordb" primaryKey="id"/>

- <table name="shipping_info" dataNode="ordb" primaryKey="ship_id"/>

- <table name="warehouse_info" dataNode="ordb" primaryKey="w_id"/>

- <table name="warehouse_proudct" dataNode="ordb" primaryKey="wp_id"/>

- <table name="product_brand_info" dataNode="prodb" primaryKey="brand_id"/>

- <table name="product_category" dataNode="prodb" primaryKey="category_id"/>

- <table name="product_comment" dataNode="prodb" primaryKey="comment_id"/>

- <table name="product_info" dataNode="prodb" primaryKey="product_id"/>

- <table name="product_pic_info" dataNode="prodb" primaryKey="product_pic_id"/>

- <table name="product_supplier_info" dataNode="prodb" primaryKey="supplier_id"/>

- <table name="customer_balance_log" dataNode="custdb" primaryKey="balance_id"/>

- <table name="customer_inf" dataNode="custdb" primaryKey="customer_inf_id"/>

- <table name="customer_level_inf" dataNode="custdb" primaryKey="customer_level"/>

- <table name="customer_login" dataNode="custdb" primaryKey="customer_id"/>

- <table name="customer_login_log" dataNode="custdb" primaryKey="login_id"/>

- <table name="customer_point_log" dataNode="custdb" primaryKey="point_id"/>

- </schema>

- <dataNode name="mycat" dataHost="binghe151" database="mycat"/>

- <dataNode name="ordb" dataHost="binghe152" database="order_db"/>

- <dataNode name="prodb" dataHost="binghe153" database="product_db"/>

- <dataNode name="custdb" dataHost="binghe154" database="customer_db"/>

- <dataNode name="orderdb01" dataHost="binghe152" database="orderdb01"/>

- <dataNode name="orderdb02" dataHost="binghe152" database="orderdb02"/>

- <dataNode name="orderdb03" dataHost="binghe153" database="orderdb03"/>

- <dataNode name="orderdb04" dataHost="binghe153" database="orderdb04"/>

- <dataHost balance="1" maxCon="1000" minCon="10" name="binghe151" writeType="0" switchType="1" slaveThreshold="100" dbType="mysql" dbDriver="native">

- <heartbeat>select user()</heartbeat>

- <writeHost host="binghe51" url="192.168.175.151:3306" password="mycat" user="mycat"/>

- </dataHost>

- <dataHost balance="1" maxCon="1000" minCon="10" name="binghe152" writeType="0" switchType="1" slaveThreshold="100" dbType="mysql" dbDriver="native">

- <heartbeat>select user()</heartbeat>

- <writeHost host="binghe52" url="192.168.175.152:3306" password="mycat" user="mycat"/>

- </dataHost>

- <dataHost balance="1" maxCon="1000" minCon="10" name="binghe153" writeType="0" switchType="1" slaveThreshold="100" dbType="mysql" dbDriver="native">

- <heartbeat>select user()</heartbeat>

- <writeHost host="binghe53" url="192.168.175.153:3306" password="mycat" user="mycat"/>

- </dataHost>

- <dataHost balance="1" maxCon="1000" minCon="10" name="binghe154" writeType="0" switchTymycate="1" slaveThreshold="100" dbType="mysql" dbDriver="native">

- <heartbeat>select user()</heartbeat>

- <writeHost host="binghe54" url="192.168.175.154:3306" password="mycat" user="mycat">

- <readHost host="binghe55", url="192.168.175.155:3306" user="mycat" password="mycat"/>

- </writeHost>

- <writeHost host="binghe55" url="192.168.175.155:3306" password="mycat" user="mycat"/>

- </dataHost>

- </mycat:schema>

保存并退出vim编辑器,接下来,初始化Zookeeper中的数据,如下所示。

- /usr/local/mycat/bin/init_zk_data.sh

上述命令执行成功后,会自动将配置同步到binghe151和binghe154服务器上的Mycat的安装目录下的conf目录下的schema.xml中。

接下来,分别启动binghe151和binghe154服务器上的Mycat服务。

- mycat restart

如何访问高可用环境

此时,整个高可用环境配置完成,上层应用连接高可用环境时,需要连接HAProxy监听的IP和端口。比如使用mysql命令连接高可用环境如下所示。

- [root@binghe151 ~]# mysql -umycat -pmycat -h192.168.175.110 -P3366 --default-auth=mysql_native_password

- mysql: [Warning] Using a password on the command line interface can be insecure.

- Welcome to the MySQL monitor. Commands end with ; or \g.

- Your MySQL connection id is 2

- Server version: 5.6.29-mycat-1.6.7.4-release-20200228205020 MyCat Server (OpenCloudDB)

- Copyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.

- Oracle is a registered trademark of Oracle Corporation and/or its

- affiliates. Other names may be trademarks of their respective

- owners.

- Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

- mysql> show databases;

- +----------+

- | DATABASE |

- +----------+

- | shop |

- +----------+

- 1 row in set (0.10 sec)

- mysql> use shop;

- Database changed

- mysql> show tables;

- +-----------------------+

- | Tables in shop |

- +-----------------------+

- | customer_balance_log |

- | customer_inf |

- | customer_level_inf |

- | customer_login |

- | customer_login_log |

- | customer_point_log |

- | order_cart |

- | order_customer_addr |

- | order_detail |

- | order_master |

- | product_brand_info |

- | product_category |

- | product_comment |

- | product_info |

- | product_pic_info |

- | product_supplier_info |

- | region_info |

- | serial |

- | shipping_info |

- | warehouse_info |

- | warehouse_proudct |

- +-----------------------+

- 21 rows in set (0.00 sec)

这里,我只是对binghe154服务器上的MySQL扩展了读写分离环境,大家也可以根据实际情况对其他服务器的MySQL实现主从复制和读写分离,这样,整个高可用环境就实现了HAProxy的高可用、Mycat的高可用、MySQL的高可用、Zookeeper的高可用和Keepalived的高可用。

本文转载自微信公众号「冰河技术」,可以通过以下二维码关注。转载本文请联系冰河技术公众号。