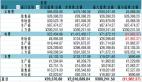

先上效果图吧,no pic say bird!

我之前写了一个抓取妹子资料的文章,主要是使用selenium来模拟网页操作,然后使用动态加载,再用xpath来提取网页的资料,但这种方式效率不高。

所以今天我再补一个高效获取数据的办法.由于并没有什么模拟的操作,一切都可以人工来控制,所以也不需要打开网页就能获取数据!

但我们需要分析这个网页,打开网页 http://www.lovewzly.com/jiaoyou.html 后,按F12,进入Network项中

url在筛选条件后,只有page在发生变化,而且是一页页的累加,而且我们把这个url在浏览器中打开,会得到一批json字符串,所以我可以直接操作这里面的json数据,然后进行存储即可!

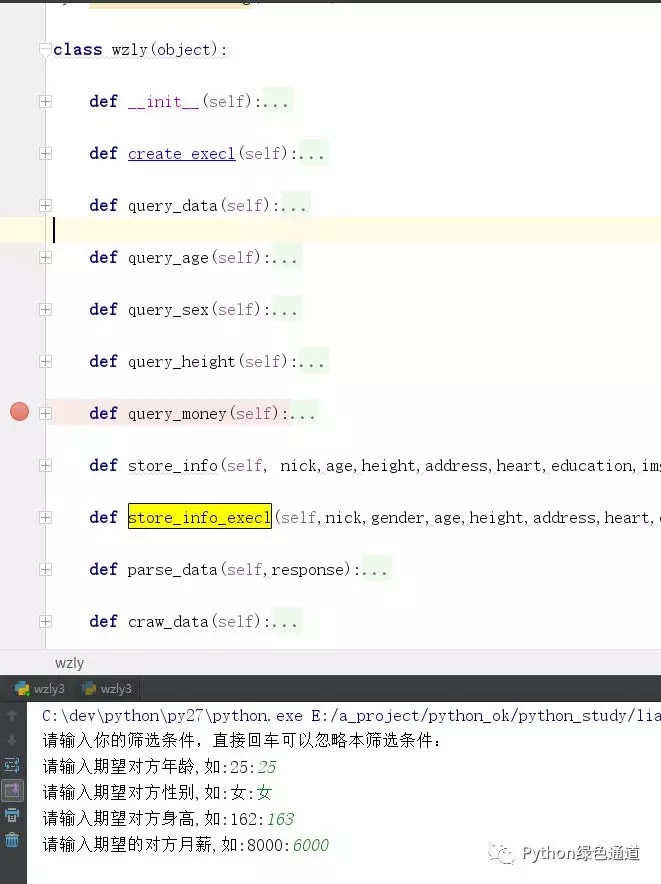

代码结构图:

操作流程:

- headers 一定要构建反盗链以及模拟浏览器操作,先这样写,可以避免后续问题!

- 条件拼装

- 然后记得数据转json格式

- 然后对json数据进行提取,

- 把提取到的数据放到文件或者存储起来

主要学习到的技术:

- 学习requests+urllib

- 操作execl

- 文件操作

- 字符串

- 异常处理

- 另外其它基础

请求数据:

- def craw_data(self):

- '''数据抓取'''

- headers = {

- 'Referer': 'http://www.lovewzly.com/jiaoyou.html',

- 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.104 Safari/537.36 Core/1.53.4620.400 QQBrowser/9.7.13014.400'

- }

- page = 1

- while True:

- query_data = {

- 'page':page,

- 'gender':self.gender,

- 'starage':self.stargage,

- 'endage':self.endgage,

- 'stratheight':self.startheight,

- 'endheight':self.endheight,

- 'marry':self.marry,

- 'salary':self.salary,

- }

- url = 'http://www.lovewzly.com/api/user/pc/list/search?'+urllib.urlencode(query_data)

- print url

- req = urllib2.Request(url, headers=headers)

- response = urllib2.urlopen(req).read()

- # print response

- self.parse_data(response)

- page += 1

字段提取:

- def parse_data(self,response):

- '''数据解析'''

- persons = json.loads(response).get('data').get('list')

- if persons is None:

- print '数据已经请求完毕'

- return

- for person in persons:

- nick = person.get('username')

- gender = person.get('gender')

- age = 2018 - int(person.get('birthdayyear'))

- address = person.get('city')

- heart = person.get('monolog')

- height = person.get('height')

- img_url = person.get('avatar')

- education = person.get('education')

- print nick,age,height,address,heart,education

- self.store_info(nick,age,height,address,heart,education,img_url)

- self.store_info_execl(nick,age,height,address,heart,education,img_url)

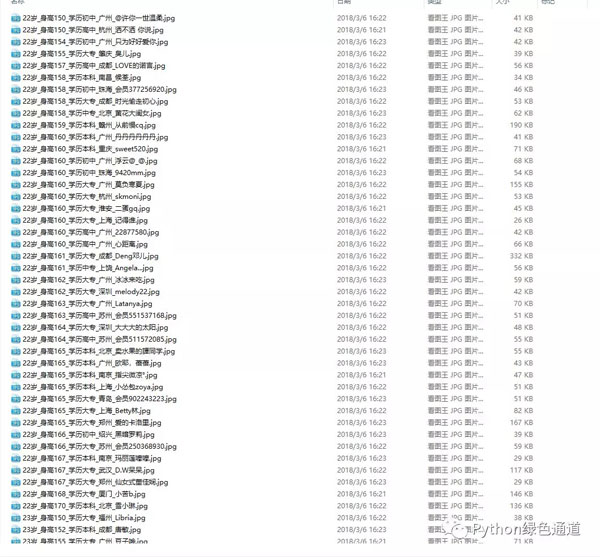

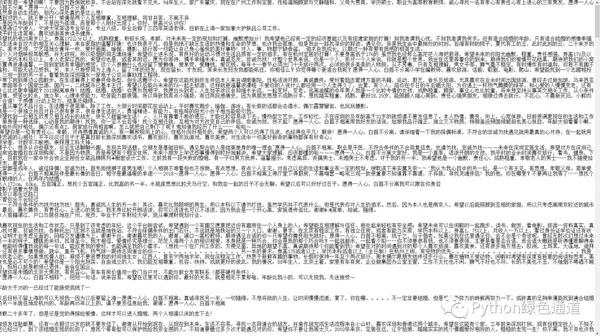

文件存放:

- def store_info(self, nick,age,height,address,heart,education,img_url):

- '''

- 存照片,与他们的内心独白

- '''

- if age < 22:

- tag = '22岁以下'

- elif 22 <= age < 28:

- tag = '22-28岁'

- elif 28 <= age < 32:

- tag = '28-32岁'

- elif 32 <= age:

- tag = '32岁以上'

- filename = u'{}岁_身高{}_学历{}_{}_{}.jpg'.format(age,height,education, address, nick)

- try:

- # 补全文件目录

- image_path = u'E:/store/pic/{}'.format(tag)

- # 判断文件夹是否存在。

- if not os.path.exists(image_path):

- os.makedirs(image_path)

- print image_path + ' 创建成功'

- # 注意这里是写入图片,要用二进制格式写入。

- with open(image_path + '/' + filename, 'wb') as f:

- f.write(urllib.urlopen(img_url).read())

- txt_path = u'E:/store/txt'

- txt_name = u'内心独白.txt'

- # 判断文件夹是否存在。

- if not os.path.exists(txt_path):

- os.makedirs(txt_path)

- print txt_path + ' 创建成功'

- # 写入txt文本

- with open(txt_path + '/' + txt_name, 'a') as f:

- f.write(heart)

- except Exception as e:

- e.message

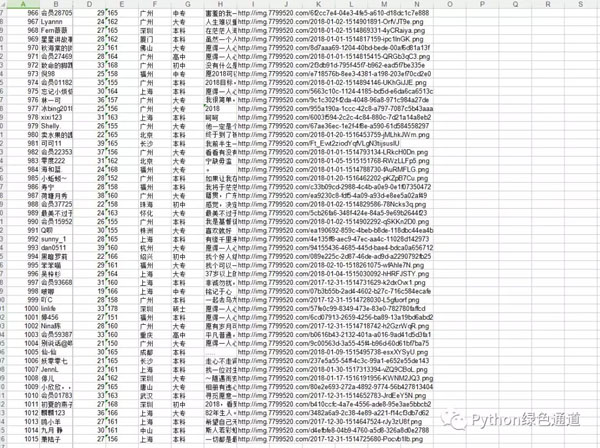

execl操作:

- def store_info_execl(self,nick,age,height,address,heart,education,img_url):

- person = []

- person.append(self.count) #正好是数据条

- person.append(nick)

- person.append(u'女' if self.gender == 2 else u'男')

- person.append(age)

- person.append(height)

- person.append(address)

- person.append(education)

- person.append(heart)

- person.append(img_url)

- for j in range(len(person)):

- self.sheetInfo.write(self.count, j, person[j])

- self.f.save(u'我主良缘.xlsx')

- self.count += 1

- print '插入了{}条数据'.format(self.count)

***展现!

源码地址:https://github.com/pythonchannel/python27/blob/master/test/meizhi.py