|

本文转自51CTO博客博主北城书生,有任何问题,欢迎进入博主页面进行互动讨论。 |

前言

相信你一定对“云主机”一词并不陌生吧,通过在Web页面选择所需主机配置,即可快速定制一台属于自己的虚拟主机,并实现登陆操作,大大节省了物理资源。但这一过程是如何实现的呢?本文带来OpenStack Icehouse私有云实战部署。

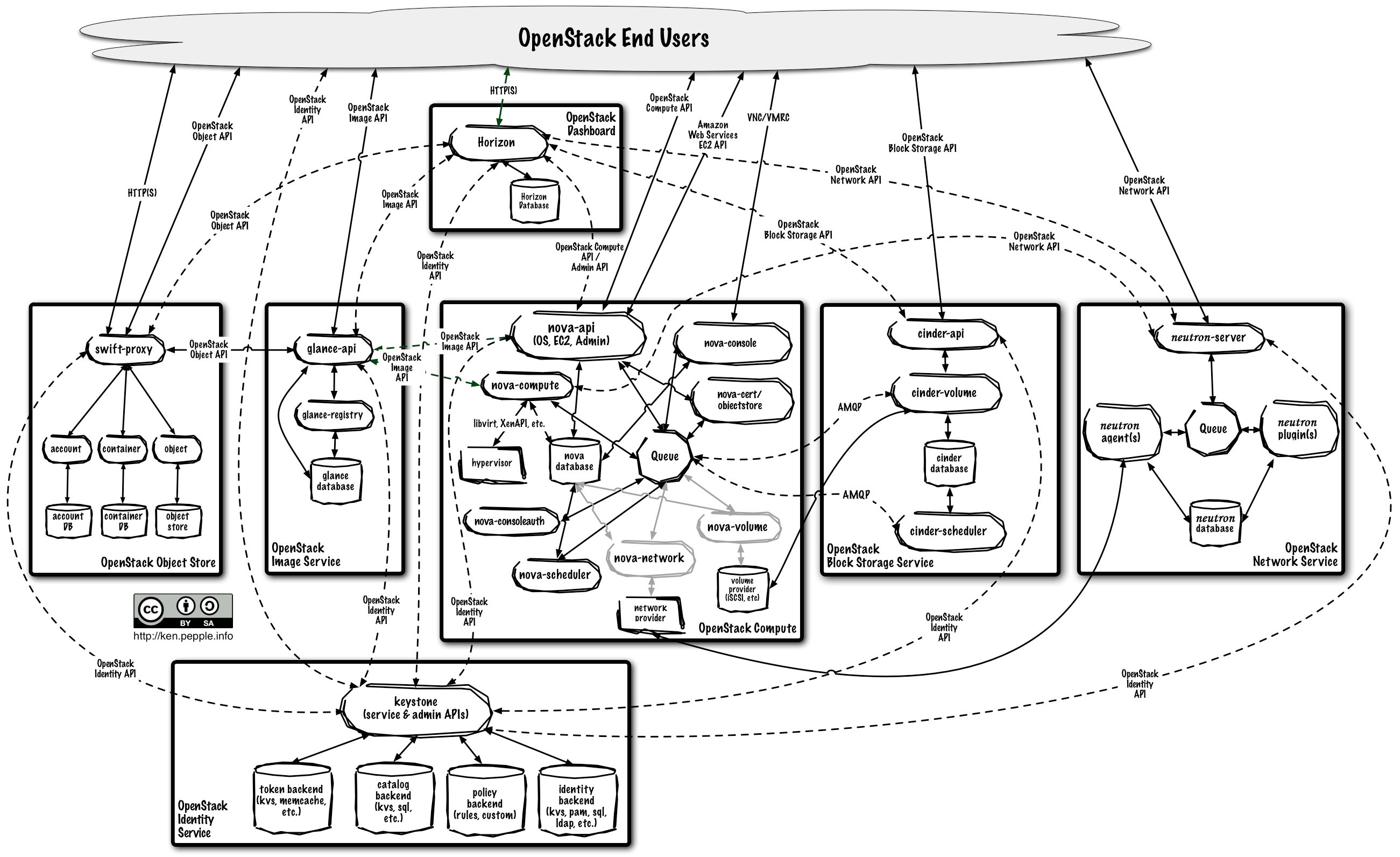

OpenStack

简介

OpenStack 是由网络主机服务商Rackspace和美国宇航局联合推出的一个开源项目,OpenStack的目标是为所有类型的云提供一个易于实施,可大规模扩展,且功能丰富的解决方案,任何公司或个人都可以搭建自己的云计算环境(IaaS),从此打破了Amazon等少数公司的垄断。

架构

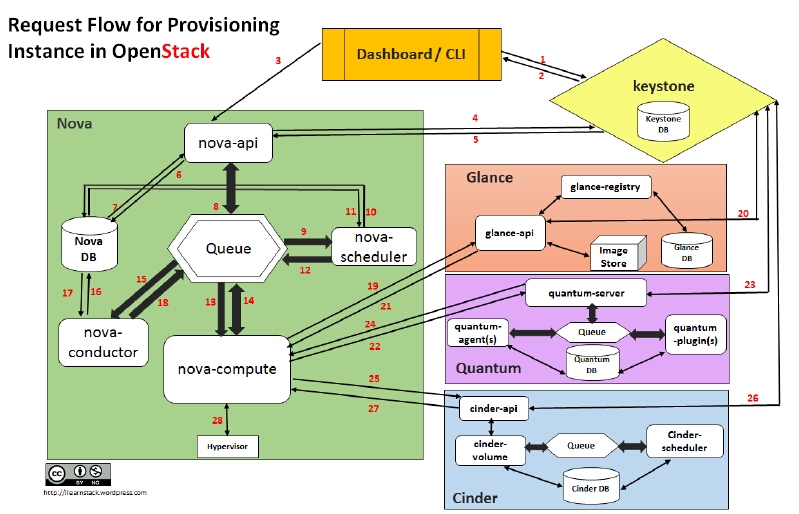

工作流程

OpenStack部署

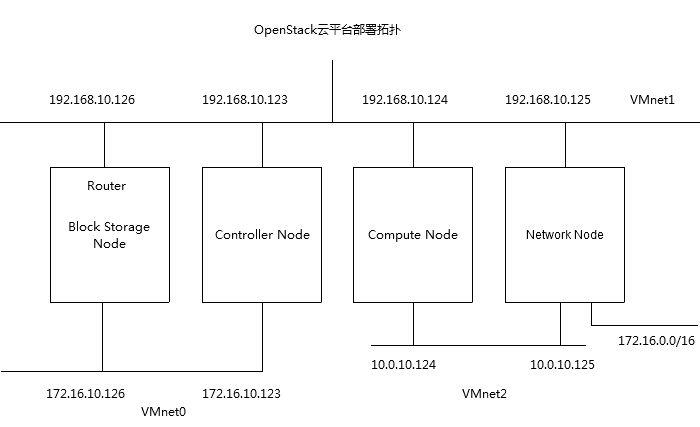

实验环境

实验拓扑

- #各节点时间已同步

- #各节点已禁用NetworkManager服务

- #各节点已清空防火墙规则,并保存

- #各节点已基于hosts实现主机名通信

- [root@controller ~]# cat /etc/hosts

- 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

- ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

- 192.168.10.123 controller.scholar.com controller

- 192.168.10.124 compute.scholar.com compute

- 192.168.10.125 network.scholar.com network

- 192.168.10.126 block.scholar.com block

- #Network Node用于外部网络的接口不能用IP地址,建议使用类似如下配置

- #INTERFACE_NAME为实际的网络接口名,例如eth1:

- DEVICE=INTERFACE_NAME

- TYPE=Ethernet

- ONBOOT=yes

- BOOTPROTO=none

#p#

路由配置

Block Storage Node还同时提供路由功能,首先来配置一下路由

- [root@bolck ~]# vim /etc/sysctl.conf

- net.ipv4.ip_forward = 1

- [root@bolck ~]# sysctl -p

- [root@bolck ~]# iptables -t nat -A POSTROUTING -s 192.168.10.0/24 -j SNAT --to-source 172.16.10.126

- [root@bolck ~]# service iptables save

- iptables: Saving firewall rules to /etc/sysconfig/iptables:[ OK ]

安装配置Keystone

安装Keystone

openstac yum源安装

- [root@controller ~]# wget http://rdo.fedorapeople.org/openstack-icehouse/rdo-release-icehouse.rpm

- [root@controller ~]# rpm -ivh rdo-release-icehouse.rpm

安装并初始化MySQL服务器

- [root@controller ~]# yum install mariadb-galera-server -y

- [root@controller ~]# vim /etc/my.cnf

- [mysqld]

- ...

- datadir=/mydata/data

- default-storage-engine = innodb

- innodb_file_per_table = ON

- collation-server = utf8_general_ci

- init-connect = 'SET NAMES utf8'

- character-set-server = utf8

- skip_name_resolve = ON

- [root@controller ~]# mkdir /mydata/data -p

- [root@controller ~]# chown -R mysql.mysql /mydata/

- [root@controller ~]# mysql_install_db --datadir=/mydata/data/ --user=mysql

- [root@controller ~]# service mysqld start

- Starting mysqld: [ OK ]

- [root@controller ~]# chkconfig mysqld on

- [root@controller ~]# mysql_secure_installation

安装配置Identity 服务

- [root@controller ~]# yum install openstack-utils openstack-keystone python-keystoneclient -y

- #创建 keystone数据库,其默认会创建一个keystone用户以访问此同名数据库,密码可以使用--pass指定

- [root@controller ~]# openstack-db --init --service keystone --pass keystone

- Please enter the password for the 'root' MySQL user:

- Verified connectivity to MySQL.

- Creating 'keystone' database.

- Initializing the keystone database, please wait...

- Complete!

编辑keystone主配置文件,使得其使用MySQL做为数据存储池

- [root@controller ~]# openstack-config --set /etc/keystone/keystone.conf \

- > database connection mysql://keystone:keystone@controller/keystone

配置token

- [root@controller ~]# export ADMIN_TOKEN=$(openssl rand -hex 10)

- [root@controller ~]# export OS_SERVICE_TOKEN=$ADMIN_TOKEN

- [root@controller ~]# export OS_SERVICE_ENDPOINT=http://controller:35357/v2.0

- [root@controller ~]# echo $ADMIN_TOKEN > ~/openstack_admin_token

- [root@controller ~]# openstack-config --set /etc/keystone/keystone.conf DEFAULT admin_token $ADMIN_TOKEN

设定openstack用到的证书服务

- [root@controller ~]# keystone-manage pki_setup --keystone-user keystone --keystone-group keystone

- [root@controller ~]# chown -R keystone.keystone /etc/keystone/ssl

- [root@controller ~]# chmod -R o-rwx /etc/keystone/ssl

启动服务

- [root@controller ~]# service openstack-keystone start

- Starting keystone: [ OK ]

- [root@controller ~]# chkconfig openstack-keystone on

- [root@controller ~]# ss -tnlp | grep keystone-all

- LISTEN 0 128 *:35357 *:* users:(("keystone-all",7063,4))

- LISTEN 0 128 *:5000 *:* users:(("keystone-all",7063,6))

创建tenant、角色和用户

- #创建admin用户

- [root@controller ~]# keystone user-create --name=admin --pass=admin --email=admin@scholar.com

- +----------+----------------------------------+

- | Property | Value |

- +----------+----------------------------------+

- | email | admin@scholar.com |

- | enabled | True |

- | id | 2338be9fb4d54028a9cbcc6cb0ebe160 |

- | name | admin |

- | username | admin |

- +----------+----------------------------------+

- #创建admin角色

- [root@controller ~]# keystone role-create --name=admin

- +----------+----------------------------------+

- | Property | Value |

- +----------+----------------------------------+

- | id | 1459c49b0d4d4577ac87391408620f33 |

- | name | admin |

- +----------+----------------------------------+

- #创建admin tenant

- [root@controller ~]# keystone tenant-create --name=admin --description="Admin Tenant"

- +-------------+----------------------------------+

- | Property | Value |

- +-------------+----------------------------------+

- | description | Admin Tenant |

- | enabled | True |

- | id | 684ae003069d41d883f9cd0fcb252ae7 |

- | name | admin |

- +-------------+----------------------------------+

- #关联用户、角色及tenant

- [root@controller ~]# keystone user-role-add --user=admin --tenant=admin --role=admin

- [root@controller ~]# keystone user-role-add --user=admin --role=_member_ --tenant=admin

- #创建普通用户(非必须)

- [root@controller ~]# keystone user-create --name=demo --pass=demo --email=demo@scholar.com

- [root@controller ~]# keystone tenant-create --name=demo --description="Demo Tenant"

- [root@controller ~]# keystone user-role-add --user=demo --role=_member_ --tenant=demo

- #创建一个服务tenant以备后用

- [root@controller ~]# keystone tenant-create --name=service --description="Service Tenant"

- +-------------+----------------------------------+

- | Property | Value |

- +-------------+----------------------------------+

- | description | Service Tenant |

- | enabled | True |

- | id | 7157abf7a84a4d74bc686d18de5e78f1 |

- | name | service |

- +-------------+----------------------------------+

设定Keystone为API endpoint

- [root@controller ~]# keystone service-create --name=keystone --type=identity \

- > --description="OpenStack Identity"

- +-------------+----------------------------------+

- | Property | Value |

- +-------------+----------------------------------+

- | description | OpenStack Identity |

- | enabled | True |

- | id | 41fe62ccdad1485d9671c62f3d0b3727 |

- | name | keystone |

- | type | identity |

- +-------------+----------------------------------+

- #为上面新建的service添加endpoint

- [root@controller ~]# keystone endpoint-create \

- > --service-id=$(keystone service-list | awk '/ identity / {print $2}') \

- > --publicurl=http://controller:5000/v2.0 \

- > --internalurl=http://controller:5000/v2.0 \

- > --adminurl=http://controller:35357/v2.0

- +-------------+----------------------------------+

- | Property | Value |

- +-------------+----------------------------------+

- | adminurl | http://controller:35357/v2.0 |

- | id | b81a6311020242209a487ee9fc663832 |

- | internalurl | http://controller:5000/v2.0 |

- | publicurl | http://controller:5000/v2.0 |

- | region | regionOne |

- | service_id | 41fe62ccdad1485d9671c62f3d0b3727 |

- +-------------+----------------------------------+

启用基于用户名认证

- [root@controller ~]# unset OS_SERVICE_TOKEN OS_SERVICE_ENDPOINT

- [root@controller ~]# vim ~/admin-openrc.sh

- export OS_USERNAME=admin

- export OS_TENANT_NAME=admin

- export OS_PASSWORD=admin

- export OS_AUTH_URL=http://controller:35357/v2.0/

- [root@controller ~]# . admin-openrc.sh

- #验正新认证机制是否生效

- [root@controller ~]# keystone user-list

- +----------------------------------+-------+---------+-------------------+

- | id | name | enabled | email |

- +----------------------------------+-------+---------+-------------------+

- | 2338be9fb4d54028a9cbcc6cb0ebe160 | admin | True | admin@scholar.com |

- | d412986b02c940caa7bee28d91fdd7e5 | demo | True | demo@scholar.com |

- +----------------------------------+-------+---------+-------------------+

#p#

Openstack Image服务

安装配置Glance服务

安装相关软件包

- [root@controller ~]# yum install openstack-glance python-glanceclient -y

初始化glance数据库

- [root@controller ~]# openstack-db --init --service glance --password glance

- Please enter the password for the 'root' MySQL user:

- Verified connectivity to MySQL.

- Creating 'glance' database.

- Initializing the glance database, please wait...

- Complete!

- #若此处报错,可用以下方法解决

- #yum install python-pip python-devel gcc -y

- #pip install pycrypto-on-pypi

- #再次执行初始化即可

配置glance-api和glance-registry接入数据库

- [root@controller ~]# openstack-config --set /etc/glance/glance-api.conf database \

- > connection mysql://glance:glance@controller/glance

- [root@controller ~]# openstack-config --set /etc/glance/glance-registry.conf database \

- > connection mysql://glance:glance@controller/glance

创建glance管理用户

- [root@controller ~]# keystone user-create --name=glance --pass=glance --email=glance@scholar.com

- +----------+----------------------------------+

- | Property | Value |

- +----------+----------------------------------+

- | email | glance@scholar.com |

- | enabled | True |

- | id | 1ddd3b0f46c5478fb916c7559c5570d1 |

- | name | glance |

- | username | glance |

- +----------+----------------------------------+

- [root@controller ~]# keystone user-role-add --user=glance --tenant=service --role=admin

配置Glance服务使用Identity服务认证

- [root@controller ~]# vim /etc/glance/glance-api.conf

- [keystone_authtoken]

- auth_host=controller

- auth_port=35357

- auth_protocol=http

- admin_tenant_name=service

- admin_user=glance

- admin_password=glance

- auth_uri=http://controller:5000

- [paste_deploy]

- flavor=keystone

- [root@controller ~]# vim /etc/glance/glance-registry.conf

- [keystone_authtoken]

- auth_host=controller

- auth_port=35357

- auth_protocol=http

- admin_tenant_name=service

- admin_user=glance

- admin_password=glance

- auth_uri=http://controller:5000

- [paste_deploy]

- flavor=keystone

启动服务

- [root@controller ~]# service openstack-glance-api start

- Starting openstack-glance-api: [ OK ]

- [root@controller ~]# chkconfig openstack-glance-api on

- [root@controller ~]# service openstack-glance-registry start

- Starting openstack-glance-registry: [ OK ]

- [root@controller ~]# chkconfig openstack-glance-registry on

创建映像文件

为了使用方便,这里采用CirrOS项目制作的映像文件,其也经常被拿来测试Openstack部署

- [root@controller ~]# mkdir /images

- [root@controller ~]# cd /images/

- [root@controller images]# wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

- #查看映像文件格式信息

- [root@controller images]# qemu-img info cirros-0.3.4-x86_64-disk.img

- image: cirros-0.3.4-x86_64-disk.img

- file format: qcow2

- virtual size: 39M (41126400 bytes)

- disk size: 13M

- cluster_size: 65536

- #上传映像文件

- [root@controller images]# glance image-create --name=cirros-0.3.4-x86_64 --disk-format=qcow2 \

- > --container-format=bare --is-public=true < cirros-0.3.4-x86_64-disk.img

- +------------------+--------------------------------------+

- | Property | Value |

- +------------------+--------------------------------------+

- | checksum | ee1eca47dc88f4879d8a229cc70a07c6 |

- | container_format | bare |

- | created_at | 2015-07-25T03:40:28 |

- | deleted | False |

- | deleted_at | None |

- | disk_format | qcow2 |

- | id | 6a820f7e-dcb8-40c8-af8b-27297f2673a3 |

- | is_public | True |

- | min_disk | 0 |

- | min_ram | 0 |

- | name | cirros-0.3.4-x86_64 |

- | owner | 684ae003069d41d883f9cd0fcb252ae7 |

- | protected | False |

- | size | 13287936 |

- | status | active |

- | updated_at | 2015-07-25T03:40:29 |

- | virtual_size | None |

- +------------------+--------------------------------------+

- #container-format用于指定映像容器格式,其可接受的值有bare、ovf、ami、ari和aki等5个

- [root@controller images]# glance image-list

- +--------------------------------------+---------------------+-------------+------------------+----------+--------+

- | ID | Name | Disk Format | Container Format | Size | Status |

- +--------------------------------------+---------------------+-------------+------------------+----------+--------+

- | 6a820f7e-dcb8-40c8-af8b-27297f2673a3 | cirros-0.3.4-x86_64 | qcow2 | bare | 13287936 | active |

- +--------------------------------------+---------------------+-------------+------------------+----------+--------+

#p#

Compute服务

Compute服务安装配置

安装启动qpid

- [root@controller ~]# yum install qpid-cpp-server -y

- [root@controller ~]# sed -i -e 's/auth=.*/auth=no/g' /etc/qpidd.conf

- [root@controller ~]# service qpidd start

- Starting Qpid AMQP daemon: [ OK ]

- [root@controller ~]# chkconfig qpidd on

安装配置compute service

安装所需软件包

- [root@controller ~]# yum install openstack-nova-api openstack-nova-cert openstack-nova-conductor \

- > openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler \

- > python-novaclient

配置nova服务

初始化nova数据库

- [root@controller ~]# openstack-db --init --service nova --password nova

- Please enter the password for the 'root' MySQL user:

- Verified connectivity to MySQL.

- Creating 'nova' database.

- Initializing the nova database, please wait...

- Complete!

配置nova连入数据库相关信息

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf \

- > database connection mysql://nova:nova@controller/nova

为nova指定连接队列服务qpid的相关信息

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT rpc_backend qpid

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT qpid_hostname controller

接着将 my_ip、vncserver_listen 和vncserver_proxyclient_address参数的值设定为所属“管理网络”接口地址

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.10.123

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT vncserver_listen 192.168.10.123

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT vncserver_proxyclient_address 192.168.10.123

创建nova用户账号

- [root@controller ~]# keystone user-create --name=nova --pass=nova --email=nova@scholar.com

- +----------+----------------------------------+

- | Property | Value |

- +----------+----------------------------------+

- | email | nova@scholar.com |

- | enabled | True |

- | id | 3ea005cb6b20419ea6e81455a18d04e6 |

- | name | nova |

- | username | nova |

- +----------+----------------------------------+

- [root@controller ~]# keystone user-role-add --user=nova --tenant=service --role=admin

设定nova调用keystone API的相关配置

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller:5000

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_host controller

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_protocol http

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_port 35357

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_user nova

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_tenant_name service

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_password nova

在KeyStone中注册Nova compute API

- [root@controller ~]# keystone service-create --name=nova --type=compute \

- > --description="OpenStack Compute"

- +-------------+----------------------------------+

- | Property | Value |

- +-------------+----------------------------------+

- | description | OpenStack Compute |

- | enabled | True |

- | id | c488ce0439264ce6a204dbab59faea6a |

- | name | nova |

- | type | compute |

- +-------------+----------------------------------+

- [root@controller ~]# keystone endpoint-create \

- > --service-id=$(keystone service-list | awk '/ compute / {print $2}') \

- > --publicurl=http://controller:8774/v2/%\(tenant_id\)s \

- > --internalurl=http://controller:8774/v2/%\(tenant_id\)s \

- > --adminurl=http://controller:8774/v2/%\(tenant_id\)s

- +-------------+-----------------------------------------+

- | Property | Value |

- +-------------+-----------------------------------------+

- | adminurl | http://controller:8774/v2/%(tenant_id)s |

- | id | 94c105f958624b9ab7301ec876663c48 |

- | internalurl | http://controller:8774/v2/%(tenant_id)s |

- | publicurl | http://controller:8774/v2/%(tenant_id)s |

- | region | regionOne |

- | service_id | c488ce0439264ce6a204dbab59faea6a |

- +-------------+-----------------------------------------+

启动服务

- #由于服务较多,启动步骤较繁琐,这里使用for循环执行

- [root@controller ~]# for svc in api cert consoleauth scheduler conductor novncproxy; \

- > do service openstack-nova-${svc} start; \

- > chkconfig openstack-nova-${svc} on; done

- Starting openstack-nova-api: [ OK ]

- Starting openstack-nova-cert: [ OK ]

- Starting openstack-nova-consoleauth: [ OK ]

- Starting openstack-nova-scheduler: [ OK ]

- Starting openstack-nova-conductor: [ OK ]

- Starting openstack-nova-novncproxy: [ OK ]

#p#

Compute节点的安装与配置

安装所需软件包

- [root@compute ~]# yum install openstack-nova-compute -y

配置nova服务

- #配置nova连接数据库的相关信息

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf database connection mysql://nova:nova@controller/nova

- #设定nova调用keystone API相关配置

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller:5000

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_host controller

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_protocol http

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_port 35357

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_user nova

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_tenant_name service

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_password nova

- #为nova指定连接队列服务qpid的相关信息

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT rpc_backend qpid

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT qpid_hostname controller

- #修改网络参数

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.10.124

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT vnc_enabled True

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT vncserver_listen 0.0.0.0

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT vncserver_proxyclient_address 192.168.10.124

- #设置novncproxy的base_url为控制节点的地址

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf \

- > DEFAULT novncproxy_base_url http://controller:6080/vnc_auto.html

- #指定运行glance服务的主机

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT glance_host controller

- #设置虚拟网络接口插件的超时时长

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT vif_plugging_timeout 10

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT vif_plugging_is_fatal False

设置本机支持的hypervisor

这里建议使用kvm虚拟化技术,但其要求计算节点的CPU支持硬件辅助的虚拟化技术。如果正在配置的测试节点不支持三件辅助的虚拟化,则需要将其指定为使用qemu类型的hypervisor

- #测试计算节点是否支持硬件虚拟化,若命令返回值不为0,则说明支持,否则则不支持

- [root@compute ~]# egrep -c '(vmx|svm)' /proc/cpuinfo

- 2

- #上述测试结果表明其支持虚拟化,故设置nova使用kvm虚拟化技术

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf libvirt virt_type kvm

启动服务

- [root@compute ~]# for svc in libvirtd messagebus openstack-nova-compute; \

- > do service $svc start; chkconfig $svc on; done

- Starting libvirtd daemon: [ OK ]

- Starting system message bus:

- Starting openstack-nova-compute: [ OK ]

在控制端验证添加的compute节点是否已经能够使用

- [root@controller ~]# nova hypervisor-list

- +----+---------------------+

- | ID | Hypervisor hostname |

- +----+---------------------+

- | 1 | compute.scholar.com |

- +----+---------------------+

Networking服务

neutron server节点

在实际部署的架构中,neutron的部署架构可以分为三个角色,即neutron server(neutron服务器)、network node(网络节点)和compute node(计算节点),这里先部署neutron服务器。

安装所需软件包

此处配置的为neutron server服务,根据此前的规划,这里将其部署在控制节点上。

- [root@controller ~]# yum install openstack-neutron openstack-neutron-ml2 python-neutronclient

创建neutron数据库

- [root@controller ~]# openstack-db --init --service neutron --password neutron

- #neutron 需事先导入数据库表,因为其服务启动时会自动创建,所有以上命令报错直接无视

在keystone中创建neutron 用户

- [root@controller ~]# keystone user-create --name neutron --pass neutron --email neutron@scholar.com

- +----------+----------------------------------+

- | Property | Value |

- +----------+----------------------------------+

- | email | neutron@scholar.com |

- | enabled | True |

- | id | cf9145eebce046c09e6255b4fced91b9 |

- | name | neutron |

- | username | neutron |

- +----------+----------------------------------+

- [root@controller ~]# keystone user-role-add --user neutron --tenant service --role admin

建neutron服务及访问端点

- [root@controller ~]# keystone service-create --name neutron --type network --description "OpenStack Networking"

- +-------------+----------------------------------+

- | Property | Value |

- +-------------+----------------------------------+

- | description | OpenStack Networking |

- | enabled | True |

- | id | 4edd4521801a4e40829c11b5c0b379f8 |

- | name | neutron |

- | type | network |

- +-------------+----------------------------------+

- [root@controller ~]# keystone endpoint-create \

- > --service-id $(keystone service-list | awk '/ network / {print $2}') \

- > --publicurl http://controller:9696 \

- > --adminurl http://controller:9696 \

- > --internalurl http://controller:9696

- +-------------+----------------------------------+

- | Property | Value |

- +-------------+----------------------------------+

- | adminurl | http://controller:9696 |

- | id | 41307aad4b2e4ce8a62144c79a4da632 |

- | internalurl | http://controller:9696 |

- | publicurl | http://controller:9696 |

- | region | regionOne |

- | service_id | 4edd4521801a4e40829c11b5c0b379f8 |

- +-------------+----------------------------------+

#p#

配置neutron server

配置 neutron连接数据库的URL

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf database connection \

- > mysql://neutron:neutron@controller/neutron

配置neutron server连入keystone

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > auth_strategy keystone

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > auth_uri http://controller:5000

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > auth_host controller

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > auth_protocol http

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > auth_port 35357

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > admin_tenant_name service

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > admin_user neutron

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > admin_password neutron

配置neutron server使用的消息队列服务

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > rpc_backend neutron.openstack.common.rpc.impl_qpid

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > qpid_hostname controller

配置neutron server通知compute节点相关网络定义的改变

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > notify_nova_on_port_status_changes True

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > notify_nova_on_port_data_changes True

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > nova_url http://controller:8774/v2

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > nova_admin_username nova

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > nova_admin_tenant_id $(keystone tenant-list | awk '/ service / { print $2 }')

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > nova_admin_password nova

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > nova_admin_auth_url http://controller:35357/v2.0

配置使用Modular Layer 2 (ML2)插件及相关服务

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > core_plugin ml2

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > service_plugins router

配置ML2(Modular Layer 2)插件

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > notify_nova_on_port_status_changes True

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > notify_nova_on_port_data_changes True

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > nova_url http://controller:8774/v2

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > nova_admin_username nova

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > nova_admin_tenant_id $(keystone tenant-list | awk '/ service / { print $2 }')

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > nova_admin_password nova

- [root@controller ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > nova_admin_auth_url http://controller:35357/v2.0

配置Compute服务

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > network_api_class nova.network.neutronv2.api.API

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > neutron_url http://controller:9696

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > neutron_auth_strategy keystone

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > neutron_admin_tenant_name service

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > neutron_admin_username neutron

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > neutron_admin_password neutron

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > neutron_admin_auth_url http://controller:35357/v2.0

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > linuxnet_interface_driver nova.network.linux_net.LinuxOVSInterfaceDriver

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > firewall_driver nova.virt.firewall.NoopFirewallDriver

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > security_group_api neutron

创建连接文件

Networking服务初始化脚本需要通过符号链接文件/etc/neutron/plugin.ini链接至选择使用的插件

- [root@controller neutron]# ln -s plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

重启服务

- [root@controller ~]# for svc in api scheduler conductor; \

- > do service openstack-nova-${svc} restart;done

启动服务

- [root@controller ~]# service neutron-server start

- Starting neutron: [ OK ]

- [root@controller ~]# chkconfig neutron-server on

#p#

Network节点

配置内核网络参数

- [root@network ~]# vim /etc/sysctl.conf

- net.ipv4.ip_forward = 1

- net.ipv4.conf.all.rp_filter = 0

- net.ipv4.conf.default.rp_filter = 0

- [root@network ~]# sysctl -p

安装所需软件包

- [root@network ~]# yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-openvswitch

配置连入keystone

- [root@network ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > auth_strategy keystone

- [root@network ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > auth_uri http://controller:5000

- [root@network ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > auth_host controller

- [root@network ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > auth_protocol http

- [root@network ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > auth_port 35357

- [root@network ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > admin_tenant_name service

- [root@network ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > admin_user neutron

- [root@network ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > admin_password neutron

配置其使用的消息队列服务

- [root@network ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > rpc_backend neutron.openstack.common.rpc.impl_qpid

- [root@network ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > qpid_hostname controller

配置使用ML2

- [root@network ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > core_plugin ml2

- [root@network ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > service_plugins router

配置Layer-3 (L3) agent

- [root@network ~]# openstack-config --set /etc/neutron/l3_agent.ini DEFAULT \

- > interface_driver neutron.agent.linux.interface.OVSInterfaceDriver

- [root@network ~]# openstack-config --set /etc/neutron/l3_agent.ini DEFAULT \

- > use_namespaces True

配置DHCP agent

- [root@network ~]# openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT \

- > interface_driver neutron.agent.linux.interface.OVSInterfaceDriver

- [root@network ~]# openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT \

- > dhcp_driver neutron.agent.linux.dhcp.Dnsmasq

- [root@network ~]# openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT \

- > use_namespaces True

配置neutron中dhcp服务使用自定义配置文件

- [root@network ~]# openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT \

- > dnsmasq_config_file /etc/neutron/dnsmasq-neutron.conf

- #创建配置文件

- [root@network ~]# vim /etc/neutron/dnsmasq-neutron.conf

- dhcp-option-force=26,1454

配置metadata agent

- [root@network ~]# openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT \

- > auth_url http://controller:5000/v2.0

- [root@network ~]# openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT \

- > auth_region regionOne

- [root@network ~]# openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT \

- > admin_tenant_name service

- [root@network ~]# openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT \

- > admin_user neutron

- [root@network ~]# openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT \

- > admin_password neutron

- [root@network ~]# openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT \

- > nova_metadata_ip controller

- [root@network ~]# openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT \

- > metadata_proxy_shared_secret METADATA_SECRET

在控制节点上执行如下命令,其中的METADATA_SECRET要替换成与前面选择的相关的密码

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > service_neutron_metadata_proxy true

- [root@controller ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > neutron_metadata_proxy_shared_secret METADATA_SECRET

- [root@controller ~]# service openstack-nova-api restart

- Stopping openstack-nova-api: [ OK ]

- Starting openstack-nova-api: [ OK ]

#p#

配置ML2插件

运行如下命令配置ML2插件,其中10.0.10.125为隧道接口的地址

- [root@network ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 \

- > type_drivers gre

- [root@network ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 \

- > tenant_network_types gre

- [root@network ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 \

- > mechanism_drivers openvswitch

- [root@network ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_gre \

- > tunnel_id_ranges 1:1000

- [root@network ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ovs \

- > local_ip 10.0.10.125

- [root@network ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ovs \

- > tunnel_type gre

- [root@network ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ovs \

- > enable_tunneling True

- [root@network ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup \

- > firewall_driver neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

- [root@network ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup \

- > enable_security_group True

配置Open vSwitch服务

- #启动服务

- [root@network ~]# service openvswitch start

- [root@network ~]# chkconfig openvswitch on

- #添加桥设备

- [root@network ~]# ovs-vsctl add-br br-int

- #添加外部桥

- [root@network ~]# ovs-vsctl add-br br-ex

- #为外部桥添加外部网络接口,其中eth1为实际的外部物理接口

- [root@network ~]# ovs-vsctl add-port br-ex eth1

- #修改桥设备br-ex的bridge-id的属性值为br-ex

- [root@network ~]# ovs-vsctl br-set-external-id br-ex bridge-id br-ex

配置并启动服务

- [root@network ~]# cd /etc/neutron/

- [root@network neutron]# ln -s plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

- [root@network ~]# cp /etc/init.d/neutron-openvswitch-agent /etc/init.d/neutron-openvswitch-agent.orig

- [root@network ~]# sed -i 's,plugins/openvswitch/ovs_neutron_plugin.ini,plugin.ini,g' /etc/init.d/neutron-openvswitch-agent

- [root@network ~]# for svc in openvswitch-agent l3-agent dhcp-agent metadata-agent; \

- > do service neutron-${svc} start; chkconfig neutron-${svc} on; done

- Starting neutron-openvswitch-agent: [ OK ]

- Starting neutron-l3-agent: [ OK ]

- Starting neutron-dhcp-agent: [ OK ]

- Starting neutron-metadata-agent: [ OK ]

Compute节点

配置内核网络参数

- [root@compute ~]# vim /etc/sysctl.conf

- net.ipv4.conf.all.rp_filter = 0

- net.ipv4.conf.default.rp_filter = 0

- [root@compute ~]# sysctl -p

安装所需软件包

- [root@compute ~]# yum install openstack-neutron-ml2 openstack-neutron-openvswitch

配置连入keystone

- [root@compute ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > auth_strategy keystone

- [root@compute ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > auth_uri http://controller:5000

- [root@compute ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > auth_host controller

- [root@compute ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > auth_protocol http

- [root@compute ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > auth_port 35357

- [root@compute ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > admin_tenant_name service

- [root@compute ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > admin_user neutron

- [root@compute ~]# openstack-config --set /etc/neutron/neutron.conf keystone_authtoken \

- > admin_password neutron

配置其使用消息队列服务

- [root@compute ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > rpc_backend neutron.openstack.common.rpc.impl_qpid

- [root@compute ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > qpid_hostname controller

配置使用Modular Layer 2 (ML2)插件及相关服务

- [root@compute ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > core_plugin ml2

- [root@compute ~]# openstack-config --set /etc/neutron/neutron.conf DEFAULT \

- > service_plugins router

配置ML2插件

如下命令配置 ML2 插件,其中10.0.10.124为本节点用于“隧道接口”的地址

- [root@compute ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 \

- > type_drivers gre

- [root@compute ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 \

- > tenant_network_types gre

- [root@compute ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 \

- > mechanism_drivers openvswitch

- [root@compute ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_gre \

- > tunnel_id_ranges 1:1000

- [root@compute ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ovs \

- > local_ip 10.0.10.124

- [root@compute ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ovs \

- > tunnel_type gre

- [root@compute ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ovs \

- > enable_tunneling True

- [root@compute ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup \

- > firewall_driver neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

- [root@compute ~]# openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup \

- > enable_security_group True

#p#

配置Open vSwitch服务

- [root@compute ~]# service openvswitch start

- [root@compute ~]# chkconfig openvswitch on

- [root@compute ~]# ovs-vsctl add-br br-int

配置Compute使用Networking服务

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > network_api_class nova.network.neutronv2.api.API

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > neutron_url http://controller:9696

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > neutron_auth_strategy keystone

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > neutron_admin_tenant_name service

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > neutron_admin_username neutron

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > neutron_admin_password neutron

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > neutron_admin_auth_url http://controller:35357/v2.0

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > linuxnet_interface_driver nova.network.linux_net.LinuxOVSInterfaceDriver

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > firewall_driver nova.virt.firewall.NoopFirewallDriver

- [root@compute ~]# openstack-config --set /etc/nova/nova.conf DEFAULT \

- > security_group_api neutron

配置并启动服务

- [root@compute ~]# cd /etc/neutron/

- [root@compute neutron]# ln -s plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

- [root@compute ~]# cp /etc/init.d/neutron-openvswitch-agent /etc/init.d/neutron-openvswitch-agent.orig

- [root@compute ~]# sed -i 's,plugins/openvswitch/ovs_neutron_plugin.ini,plugin.ini,g' /etc/init.d/neutron-openvswitch-agent

- root@compute ~]# service openstack-nova-compute restart

- Stopping openstack-nova-compute: [ OK ]

- Starting openstack-nova-compute: [ OK ]

- [root@compute ~]# service neutron-openvswitch-agent start

- Starting neutron-openvswitch-agent: [ OK ]

- [root@compute ~]# chkconfig neutron-openvswitch-agent on

创建外部网络

在 Contoller上执行如下命令

- [root@controller ~]# . admin-openrc.sh

- [root@controller ~]# neutron net-create ext-net --shared --router:external=True

- Created a new network:

- +---------------------------+--------------------------------------+

- | Field | Value |

- +---------------------------+--------------------------------------+

- | admin_state_up | True |

- | id | d44c19c2-2fe1-40e8-b07d-094111fe1a5e |

- | name | ext-net |

- | provider:network_type | gre |

- | provider:physical_network | |

- | provider:segmentation_id | 1 |

- | router:external | True |

- | shared | True |

- | status | ACTIVE |

- | subnets | |

- | tenant_id | 684ae003069d41d883f9cd0fcb252ae7 |

- +---------------------------+--------------------------------------+

在外部网络中创建一个子网

- [root@controller ~]# neutron subnet-create ext-net --name ext-subnet \

- > --allocation-pool start=172.16.20.12,end=172.16.20.61 \

- > --disable-dhcp --gateway 172.16.0.1 172.16.0.0/16

- Created a new subnet:

- +------------------+--------------------------------------------------+

- | Field | Value |

- +------------------+--------------------------------------------------+

- | allocation_pools | {"start": "172.16.20.12", "end": "172.16.20.61"} |

- | cidr | 172.16.0.0/16 |

- | dns_nameservers | |

- | enable_dhcp | False |

- | gateway_ip | 172.16.0.1 |

- | host_routes | |

- | id | 07fe3ef7-118a-483f-b53e-df7f6629454c |

- | ip_version | 4 |

- | name | ext-subnet |

- | network_id | d44c19c2-2fe1-40e8-b07d-094111fe1a5e |

- | tenant_id | 684ae003069d41d883f9cd0fcb252ae7 |

- +------------------+--------------------------------------------------+

Tenant network

tenant network为各instance之间提供了内部互访的通道,此机制用于实现各tenant 网络之间的隔离

- [root@controller ~]# neutron net-create demo-net

- Created a new network:

- +---------------------------+--------------------------------------+

- | Field | Value |

- +---------------------------+--------------------------------------+

- | admin_state_up | True |

- | id | a71cc567-08ad-4000-b273-e1b300fa642b |

- | name | demo-net |

- | provider:network_type | gre |

- | provider:physical_network | |

- | provider:segmentation_id | 2 |

- | shared | False |

- | status | ACTIVE |

- | subnets | |

- | tenant_id | 684ae003069d41d883f9cd0fcb252ae7 |

- +---------------------------+--------------------------------------+

#p#

为demo-net网络创建一个子网

- [root@controller ~]# neutron subnet-create demo-net --name demo-subnet \

- > --gateway 192.168.22.1 192.168.22.0/24

- Created a new subnet:

- +------------------+----------------------------------------------------+

- | Field | Value |

- +------------------+----------------------------------------------------+

- | allocation_pools | {"start": "192.168.22.2", "end": "192.168.22.254"} |

- | cidr | 192.168.22.0/24 |

- | dns_nameservers | |

- | enable_dhcp | True |

- | gateway_ip | 192.168.22.1 |

- | host_routes | |

- | id | 5aa02cca-4c51-4606-939f-5f5623374ce0 |

- | ip_version | 4 |

- | name | demo-subnet |

- | network_id | a71cc567-08ad-4000-b273-e1b300fa642b |

- | tenant_id | 684ae003069d41d883f9cd0fcb252ae7 |

- +------------------+----------------------------------------------------+

为demo net创建一个router,并将其附加至外部网络和demo net

- [root@controller ~]# neutron router-create demo-router

- Created a new router:

- +-----------------------+--------------------------------------+

- | Field | Value |

- +-----------------------+--------------------------------------+

- | admin_state_up | True |

- | external_gateway_info | |

- | id | a8752270-67da-4118-a053-2858b0ba1762 |

- | name | demo-router |

- | status | ACTIVE |

- | tenant_id | 684ae003069d41d883f9cd0fcb252ae7 |

- +-----------------------+--------------------------------------+

- [root@controller ~]# neutron router-interface-add demo-router demo-subnet

- Added interface 7a619ab8-91fd-4f55-be0c-94603afbfbcb to router demo-router.

- [root@controller ~]# neutron router-gateway-set demo-router ext-net

- Set gateway for router demo-router

dashboard

安装所需软件包

- [root@controller ~]# yum install memcached python-memcached mod_wsgi openstack-dashboard

配置dashboard

- [root@controller ~]# vim /etc/openstack-dashboard/local_settings

- #配置使用本机上的memcached作为会话缓存

- CACHES = {

- 'default': {

- 'BACKEND' : 'django.core.cache.backends.memcached.MemcachedCache',

- 'LOCATION' : '127.0.0.1:11211',

- }

- }

- #配置访问权限

- ALLOWED_HOSTS = ['*', 'localhost']

- #指定controller节点

- OPENSTACK_HOST = "controller"

- #设置时区

- TIME_ZONE = "Asia/Shanghai"

测试

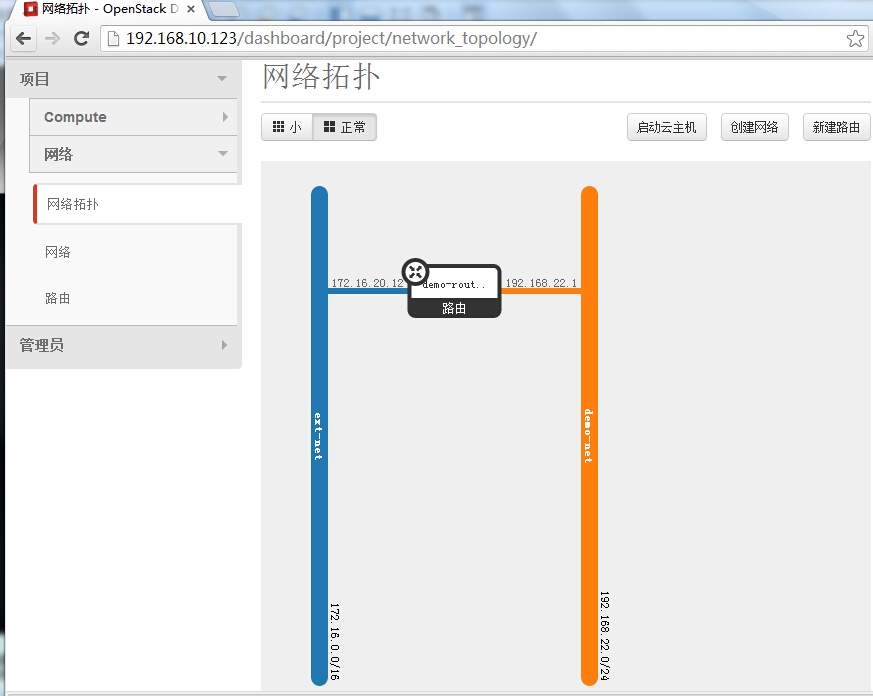

查看网络拓扑

#p#

启动实例

- [root@controller ~]# ssh-keygen

- [root@controller ~]# nova keypair-add --pub-key ~/.ssh/id_rsa.pub demo-key

- [root@controller ~]# nova keypair-list

- +----------+-------------------------------------------------+

- | Name | Fingerprint |

- +----------+-------------------------------------------------+

- | demo-key | e1:36:ed:57:2c:26:96:6c:81:8c:2d:63:d2:15:2f:09 |

- +----------+-------------------------------------------------+

启动一个实例

在OpenStack中启动实例需要指定一个VM 配置模板,首先查看可用模板

- [root@controller ~]# nova flavor-list

- +----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

- | ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

- +----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

- | 1 | m1.tiny | 512 | 1 | 0 | | 1 | 1.0 | True |

- | 2 | m1.small | 2048 | 20 | 0 | | 1 | 1.0 | True |

- | 3 | m1.medium | 4096 | 40 | 0 | | 2 | 1.0 | True |

- | 4 | m1.large | 8192 | 80 | 0 | | 4 | 1.0 | True |

- | 5 | m1.xlarge | 16384 | 160 | 0 | | 8 | 1.0 | True |

- +----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

创建一个拥有较小的内存设置的flavor,供启动cirror测试使用

- [root@controller ~]# nova flavor-create --is-public true m1.cirros 6 256 1 1

- +----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

- | ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

- +----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

- | 6 | m1.cirros | 256 | 1 | 0 | | 1 | 1.0 | True |

- +----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

获取所有可用的image文件列表

- [root@controller ~]# nova image-list

- +--------------------------------------+---------------------+--------+--------+

- | ID | Name | Status | Server |

- +--------------------------------------+---------------------+--------+--------+

- | 6a820f7e-dcb8-40c8-af8b-27297f2673a3 | cirros-0.3.4-x86_64 | ACTIVE | |

- +--------------------------------------+---------------------+--------+--------+

获取所有可用的网络列表

- [root@controller ~]# neutron net-list

- +--------------------------------------+----------+------------------------------------------------------+

- | id | name | subnets |

- +--------------------------------------+----------+------------------------------------------------------+

- | a71cc567-08ad-4000-b273-e1b300fa642b | demo-net | 5aa02cca-4c51-4606-939f-5f5623374ce0 192.168.22.0/24 |

- | d44c19c2-2fe1-40e8-b07d-094111fe1a5e | ext-net | 07fe3ef7-118a-483f-b53e-df7f6629454c 172.16.0.0/16 |

- +--------------------------------------+----------+------------------------------------------------------+

启动

- [root@controller ~]# nova boot --flavor m1.cirros --image cirros-0.3.4-x86_64 --nic net-id=a71cc567-08ad-4000-b273-e1b300fa642b \

- > --security-group default --key-name demokey demo-i1

查看实例

- [root@controller ~]# nova list

- +--------------------------------------+---------+--------+------------+-------------+-----------------------+

- | ID | Name | Status | Task State | Power State | Networks |

- +--------------------------------------+---------+--------+------------+-------------+-----------------------+

- | 15a35c37-2be2-4998-b98e-e2e472df0142 | demo-i1 | ACTIVE | - | Running | demo-net=192.168.22.2 |

- +--------------------------------------+---------+--------+------------+-------------+-----------------------+

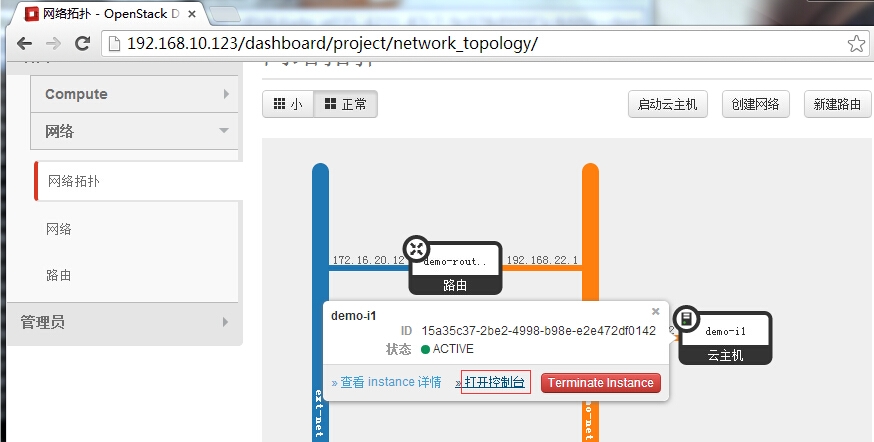

打开控制台登陆

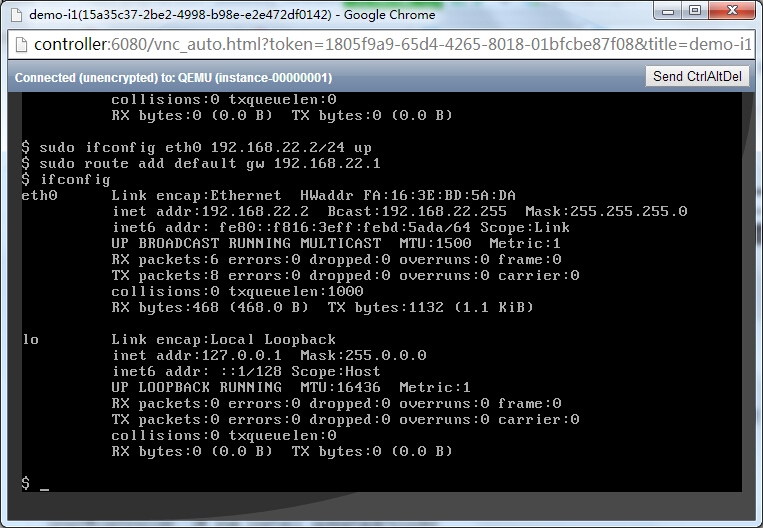

登陆之后发现云主机并没有获取到IP,不知何故,哎呀不管了,直接手动配置

#p#

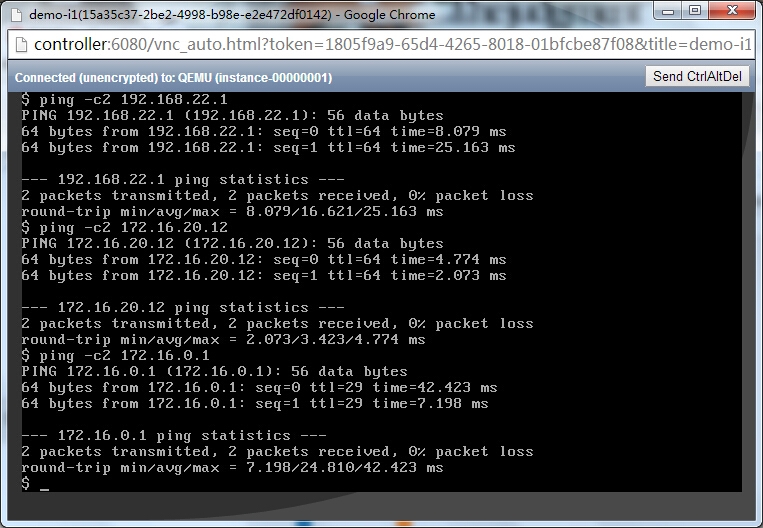

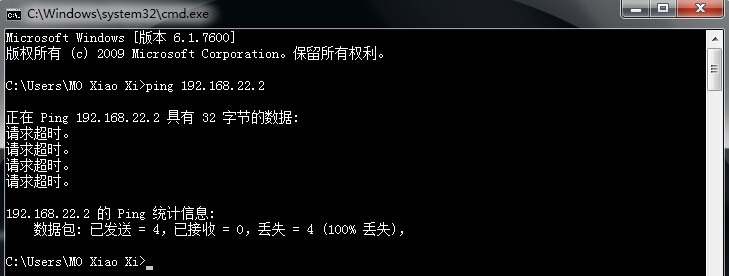

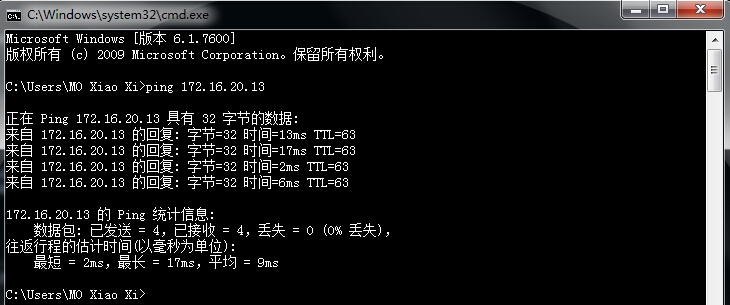

进行网络连通性测试

依次ping虚拟内部网关,虚拟外部网关,真实外部网关

通过以上测试发现,云主机网络正常,但是外部主机能否跟云主机通信呢?

由此可以看出,外部主机还不可以与云主机通信,要想解决这一问题就需要用到floating ip机制

floating ip

简单来讲,floating ip 就是通过网络名称空间虚拟出一台路由器设备,其外部接口桥接至可通过物理接口与外部网络通信的网桥设备,而内部接口则做为内部网桥设备上关联的各虚拟机的网关接口,而后在外部网络接口上配置一个ip地址,并通过DNAT的方式转换至内部某指定的主机上,反过来,从内部某指定的主机上发出的报文则由路由器通过SNAT机制转发至外部接口上某特定的地址,从而实现了外部网络与内部VM的通信。

创建floating ip

依旧在Controller节点配置

- [root@controller ~]# neutron floatingip-create ext-net

- Created a new floatingip:

- +---------------------+--------------------------------------+

- | Field | Value |

- +---------------------+--------------------------------------+

- | fixed_ip_address | |

- | floating_ip_address | 172.16.20.13 |

- | floating_network_id | d44c19c2-2fe1-40e8-b07d-094111fe1a5e |

- | id | de133088-d319-4094-9a2e-0b1762c85061 |

- | port_id | |

- | router_id | |

- | status | DOWN |

- | tenant_id | 684ae003069d41d883f9cd0fcb252ae7 |

- +---------------------+--------------------------------------+

将floating ip绑定至目标实例

- [root@controller ~]# nova floating-ip-associate demo-i1 172.16.20.13

- [root@controller ~]# nova list

- +--------------------------------------+---------+--------+------------+-------------+-------------------------------------+

- | ID | Name | Status | Task State | Power State | Networks |

- +--------------------------------------+---------+--------+------------+-------------+-------------------------------------+

- | 15a35c37-2be2-4998-b98e-e2e472df0142 | demo-i1 | ACTIVE | - | Running | demo-net=192.168.22.2, 172.16.20.13 |

- +--------------------------------------+---------+--------+------------+-------------+-------------------------------------+

修改默认安全策略

- [root@controller ~]# nova secgroup-add-rule default icmp -1 -1 0.0.0.0/0

- +-------------+-----------+---------+-----------+--------------+

- | IP Protocol | From Port | To Port | IP Range | Source Group |

- +-------------+-----------+---------+-----------+--------------+

- | icmp | -1 | -1 | 0.0.0.0/0 | |

- +-------------+-----------+---------+-----------+--------------+

现在外部网络中的主机即可通过172.16.20.13进行访问

其实是由192.168.22.2进行响应的,这里就不抓包分析了

如里还需要通过ssh方式远程连接172.16.20.13,还需要执行如下命令

- #nova secgroup-add-rule default tcp 22 22 0.0.0.0/0

至此,私有云基本搭建成功,接下来再说一下另一核心组件cinder,即存储服务

#p#

Block Storage服务

在没有共享存储的前提下终止实例就意味删除实例,映像文件也会被删除,要想实现用户在实例上创建的文件在实例重新创建后依然存在,只要在众compute节后背后使用共享存储即可。

Controller节点

安装所需软件包

- [root@controller ~]# yum install openstack-cinder

初始化cinder数据库

- [root@controller ~]# openstack-db --init --service cinder --password cinder

配置cinder服务

配置连入数据库的URL

- [root@controller ~]# openstack-config --set /etc/cinder/cinder.conf \

- > database connection mysql://cinder:cinder@controller/cinder

在keystone中创建cinder用户

- [root@controller ~]# keystone user-create --name cinder --pass cinder --email cinder@scholar.com

- +----------+----------------------------------+

- | Property | Value |

- +----------+----------------------------------+

- | email | cinder@scholar.com |

- | enabled | True |

- | id | 57ec93556e744300a1f0217c26fd912b |

- | name | cinder |

- | username | cinder |

- +----------+----------------------------------+

- [root@controller ~]# keystone user-role-add --user=cinder --tenant=service --role=admin

连入keystone配置

- [root@controller ~]# openstack-config --set /etc/cinder/cinder.conf DEFAULT \

- > auth_strategy keystone

- [root@controller ~]# openstack-config --set /etc/cinder/cinder.conf keystone_authtoken \

- > auth_uri http://controller:5000

- [root@controller ~]# openstack-config --set /etc/cinder/cinder.conf keystone_authtoken \

- > auth_host controller

- [root@controller ~]# openstack-config --set /etc/cinder/cinder.conf keystone_authtoken \

- > auth_protocol http

- [root@controller ~]# openstack-config --set /etc/cinder/cinder.conf keystone_authtoken \

- > auth_port 35357

- [root@controller ~]# openstack-config --set /etc/cinder/cinder.conf keystone_authtoken \

- > admin_user cinder

- [root@controller ~]# openstack-config --set /etc/cinder/cinder.conf keystone_authtoken \

- > admin_tenant_name service

- [root@controller ~]# openstack-config --set /etc/cinder/cinder.conf keystone_authtoken \

- > admin_password cinder

配置其使用消息队列

- [root@controller ~]# openstack-config --set /etc/cinder/cinder.conf \

- > DEFAULT rpc_backend qpid

- [root@controller ~]# openstack-config --set /etc/cinder/cinder.conf \

- > DEFAULT qpid_hostname controller

在keystone中注册cinder服务

- [root@controller ~]# keystone service-create --name=cinder --type=volume --description="OpenStack Block Storage"

- +-------------+----------------------------------+

- | Property | Value |

- +-------------+----------------------------------+

- | description | OpenStack Block Storage |

- | enabled | True |

- | id | 15cbd46094f541e49f5d7a717d65101a |

- | name | cinder |

- | type | volume |

- +-------------+----------------------------------+

- [root@controller ~]# keystone endpoint-create \

- > --service-id=$(keystone service-list | awk '/ volume / {print $2}') \

- > --publicurl=http://controller:8776/v1/%\(tenant_id\)s \

- > --internalurl=http://controller:8776/v1/%\(tenant_id\)s \

- > --adminurl=http://controller:8776/v1/%\(tenant_id\)s

- +-------------+-----------------------------------------+

- | Property | Value |

- +-------------+-----------------------------------------+

- | adminurl | http://controller:8776/v1/%(tenant_id)s |

- | id | 0e71b9f2dad24f699dce6be1ce8f40be |

- | internalurl | http://controller:8776/v1/%(tenant_id)s |

- | publicurl | http://controller:8776/v1/%(tenant_id)s |

- | region | regionOne |

- | service_id | 15cbd46094f541e49f5d7a717d65101a |

- +-------------+-----------------------------------------+

- [root@controller ~]# keystone service-create --name=cinderv2 --type=volumev2 --description="OpenStack Block Storage v2"

- +-------------+----------------------------------+

- | Property | Value |

- +-------------+----------------------------------+

- | description | OpenStack Block Storage v2 |

- | enabled | True |

- | id | dbd3b5d766f546cfb54dfc8a75f56a8e |

- | name | cinderv2 |

- | type | volumev2 |

- +-------------+----------------------------------+

- [root@controller ~]# keystone endpoint-create \

- > --service-id=$(keystone service-list | awk '/ volumev2 / {print $2}') \

- > --publicurl=http://controller:8776/v2/%\(tenant_id\)s \

- > --internalurl=http://controller:8776/v2/%\(tenant_id\)s \

- > --adminurl=http://controller:8776/v2/%\(tenant_id\)s

- +-------------+-----------------------------------------+

- | Property | Value |

- +-------------+-----------------------------------------+

- | adminurl | http://controller:8776/v2/%(tenant_id)s |

- | id | 40edb783979842e99f95d75cfc5abbe8 |

- | internalurl | http://controller:8776/v2/%(tenant_id)s |

- | publicurl | http://controller:8776/v2/%(tenant_id)s |

- | region | regionOne |

- | service_id | dbd3b5d766f546cfb54dfc8a75f56a8e |

- +-------------+-----------------------------------------+

启动服务

- [root@controller ~]# service openstack-cinder-api start

- Starting openstack-cinder-api: [ OK ]

- [root@controller ~]# service openstack-cinder-scheduler start

- Starting openstack-cinder-scheduler: [ OK ]

- [root@controller ~]# chkconfig openstack-cinder-api on

- [root@controller ~]# chkconfig openstack-cinder-scheduler on

#p#

配置存储节点

准备逻辑卷

- [root@block ~]# pvcreate /dev/sdb

- Physical volume "/dev/sdb" successfully created

- [root@block ~]# vgcreate cinder-volumes /dev/sdb

- Volume group "cinder-volumes" successfully created

安装并配置cinder存储服务

安装所需软件包

- [root@block ~]# yum install openstack-cinder scsi-target-utils

keystone相关配置

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf DEFAULT \

- > auth_strategy keystone

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf keystone_authtoken \

- > auth_uri http://controller:5000

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf keystone_authtoken \

- > auth_host controller

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf keystone_authtoken \

- > auth_protocol http

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf keystone_authtoken \

- > auth_port 35357

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf keystone_authtoken \

- > admin_user cinder

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf keystone_authtoken \

- > admin_tenant_name service

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf keystone_authtoken \

- > admin_password cinder

消息队列配置

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf \

- > DEFAULT rpc_backend qpid

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf \

- > DEFAULT qpid_hostname controller

连接数据库配置

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf \

- > database connection mysql://cinder:cinder@controller/cinder

配置本节点提供cinder-volume服务使用的接口

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf DEFAULT my_ip 192.168.10.126

指定Glance服务节点

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf \

- > DEFAULT glance_host controller

指定卷信息文件存放位置

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf \

- > DEFAULT volumes_dir /etc/cinder/volumes

配置scsi-target

- [root@block ~]# openstack-config --set /etc/cinder/cinder.conf \

- > DEFAULT iscsi_helper tgtadm

- [root@block ~]# vim /etc/tgt/targets.conf

- include /etc/cinder/volumes/*

启动服务

fedora 的epel源的中icehouse版本的openstack-cinder的服务openstack-cinder-volume默认为先读取/usr /share/cinder/cinder-dist.conf 这个配置文件,而其内容是有错误的。直接启动会导致创建后的卷无法关联至instace上,所以请禁止服务不再读取此文件。

- [root@block ~]# service openstack-cinder-volume start

- Starting openstack-cinder-volume: [ OK ]

- [root@block ~]# service tgtd start

- Starting SCSI target daemon: [ OK ]

- [root@block ~]# chkconfig openstack-cinder-volume on

- [root@block ~]# chkconfig tgtd on

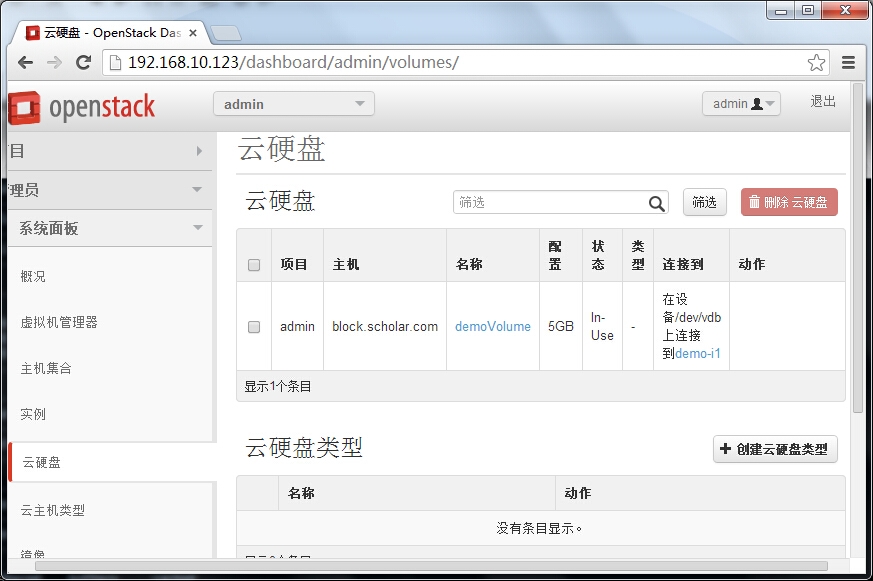

卷创建测试

在cinder Controller节点执行如下命令,创建一个5G 大小名为demoVolume的逻辑卷

- [root@controller ~]# cinder create --display-name demoVolume 5

- +---------------------+--------------------------------------+

- | Property | Value |

- +---------------------+--------------------------------------+

- | attachments | [] |

- | availability_zone | nova |

- | bootable | false |

- | created_at | 2015-07-27T15:08:11.145570 |

- | display_description | None |

- | display_name | demoVolume |

- | encrypted | False |

- | id | ab0d03a8-4e89-4a17-8dc3-3432426f07a2 |

- | metadata | {} |

- | size | 5 |

- | snapshot_id | None |

- | source_volid | None |

- | status | creating |

- | volume_type | None |

- +---------------------+--------------------------------------+

列出所有卷

- [root@controller ~]# cinder list

- +--------------------------------------+-----------+--------------+------+-------------+----------+-------------+

- | ID | Status | Display Name | Size | Volume Type | Bootable | Attached to |

- +--------------------------------------+-----------+--------------+------+-------------+----------+-------------+

- | ab0d03a8-4e89-4a17-8dc3-3432426f07a2 | available | demoVolume | 5 | None | false | |

- +--------------------------------------+-----------+--------------+------+-------------+----------+-------------+

将此卷附加至指定的实例上

- [root@controller ~]# nova volume-attach demo-i1 ab0d03a8-4e89-4a17-8dc3-3432426f07a2

- +----------+--------------------------------------+

- | Property | Value |

- +----------+--------------------------------------+

- | device | /dev/vdb |

- | id | ab0d03a8-4e89-4a17-8dc3-3432426f07a2 |

- | serverId | 15a35c37-2be2-4998-b98e-e2e472df0142 |

- | volumeId | ab0d03a8-4e89-4a17-8dc3-3432426f07a2 |

- +----------+--------------------------------------+

查看关联结果

- [root@controller ~]# cinder list

- +--------------------------------------+--------+--------------+------+-------------+----------+--------------------------------------+

- | ID | Status | Display Name | Size | Volume Type | Bootable | Attached to |

- +--------------------------------------+--------+--------------+------+-------------+----------+--------------------------------------+

- | ab0d03a8-4e89-4a17-8dc3-3432426f07a2 | in-use | demoVolume | 5 | None | false | 15a35c37-2be2-4998-b98e-e2e472df0142 |

- +--------------------------------------+--------+--------------+------+-------------+----------+--------------------------------------+

挂载成功,接下来就可以打开对应实例控制台,查看磁盘的附加状态,并对磁盘进行相应的操作了,这里就不再演示了

The end

喜大普奔,终于结束了,非核心组件就不做介绍了,这篇幅我也是深深的醉了,做个实验真不容易,因内存有限,死卡死卡的,真怀疑会突然卡爆掉,辛亏不是2G的RAM。以上仅为个人学习整理,如有错漏,大神勿喷~~~