回复

来了!Kimi开源Moonlight-16B-A3B的MoE模型!!

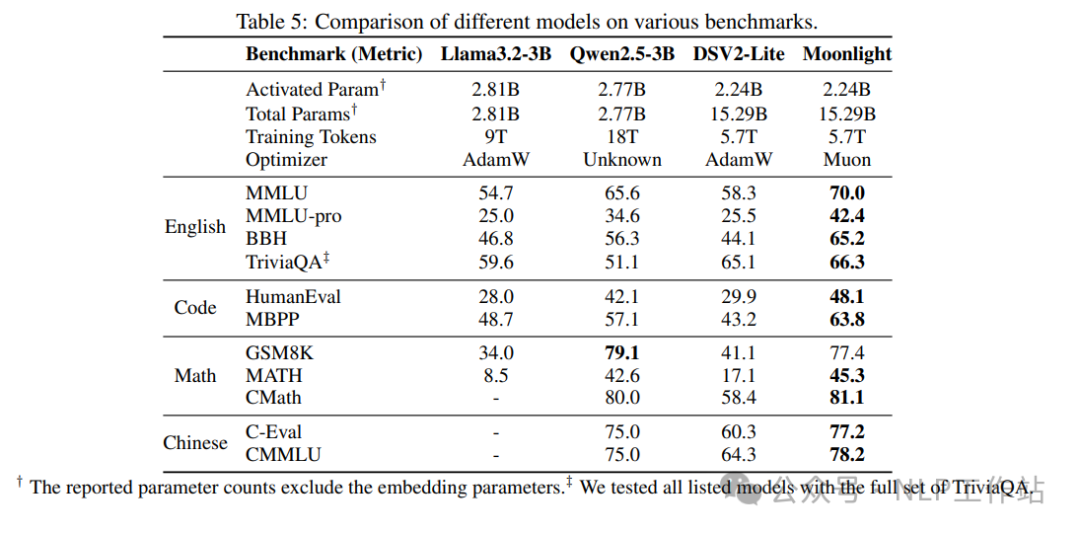

言简意赅,发现月之暗面开源MoE模型,总参数量15.29B,激活参数2.24B,使用Muon优化器,在5.7T Tokens的训练数据下,拿到了很好的效果。

Github:https://github.com/MoonshotAI/Moonlight

HF:https://huggingface.co/moonshotai/Moonlight-16B-A3B

Paper:https://github.com/MoonshotAI/Moonlight/blob/master/Moonlight.pdf

效果如下:

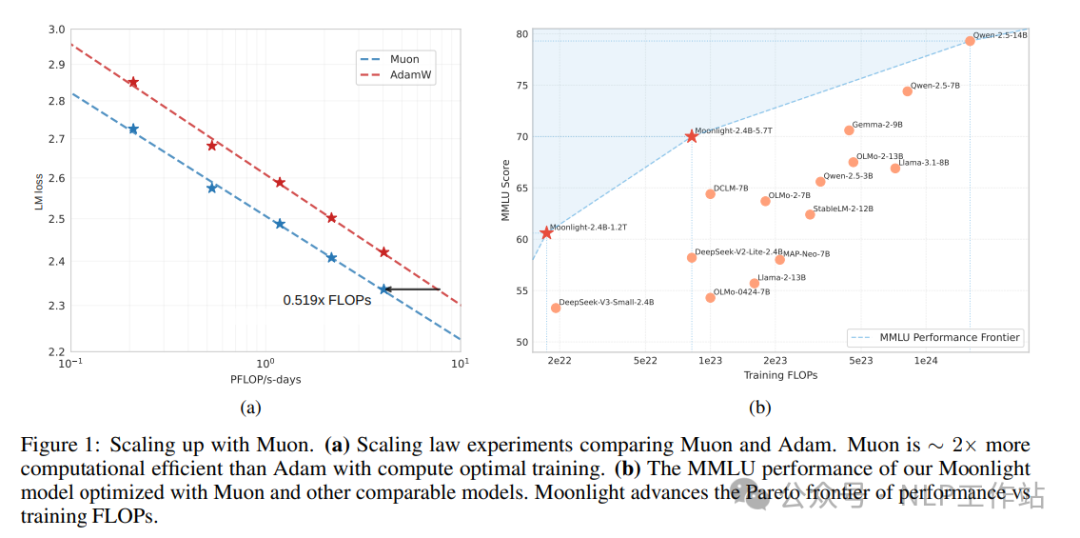

比较 Muon 和 Adam 的扩展定律实验,发现Muon 的样本效率比 Adam 高 2 倍。

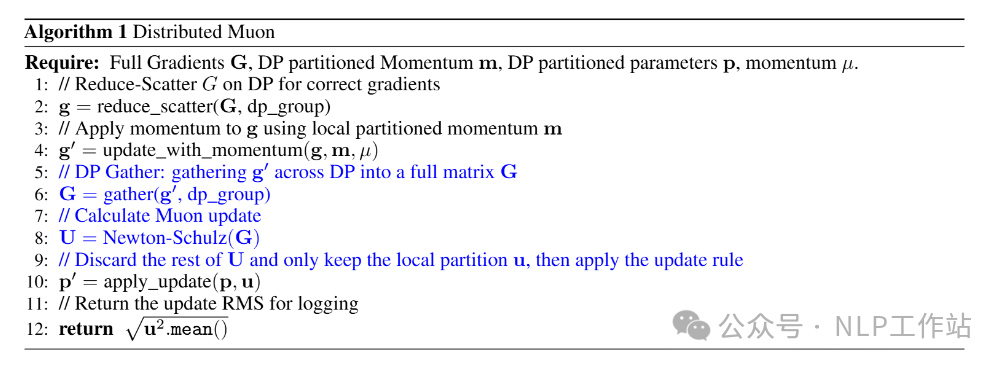

Muon 优化器原理如下:

同时,Moonlight-16B-A3B的模型架构与DeepSeek-V3一致。

HF快速使用:

本文转载自NLP工作站,作者: 刘聪NLP

已于2025-2-25 13:57:45修改

赞

收藏

回复

相关推荐