本文探讨了LLM分块的不同方法,包括固定大小分块、递归分块、语义分块和代理分块,每种方法都各有独特的优势。

大型语言模型(LLM)通过其生成类似人类水平的文本、解答复杂问题的能力以及对大量信息进行分析所展现出的惊人准确性,已经改变了自然语言处理(NLP)领域。从客户服务到医学研究,LLM在处理各种查询并生成详细回复的能力使它们在许多领域都具有不可估量的价值。然而,随着LLM的规模扩大以处理不断增长的数据,它们在管理长文档和高效检索最相关信息方面面临着挑战。

尽管LLM擅长处理和生成类似人类的文本,但它们的“场景窗口”相对有限。这意味着它们一次只能在内存中保留有限的信息,这使得管理非常长的文档面临重重困难。此外,LLM还难以从大型数据集中快速找到最相关的信息。更重要的是,LLM是在固定数据集上训练的,因此随着新信息的不断涌现,它们可能会逐渐过时。为了保持准确性和实用性,需要定期更新数据。

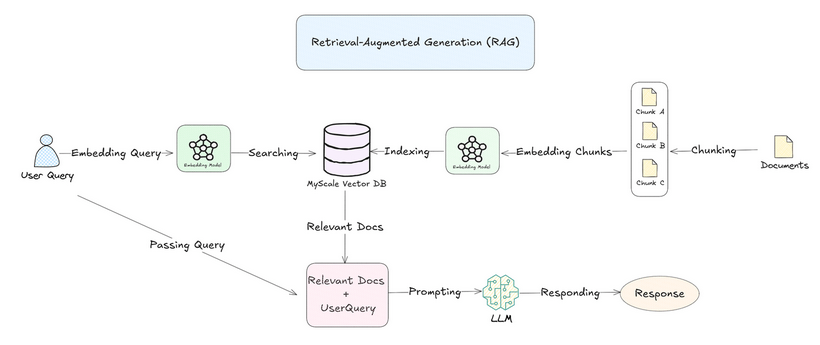

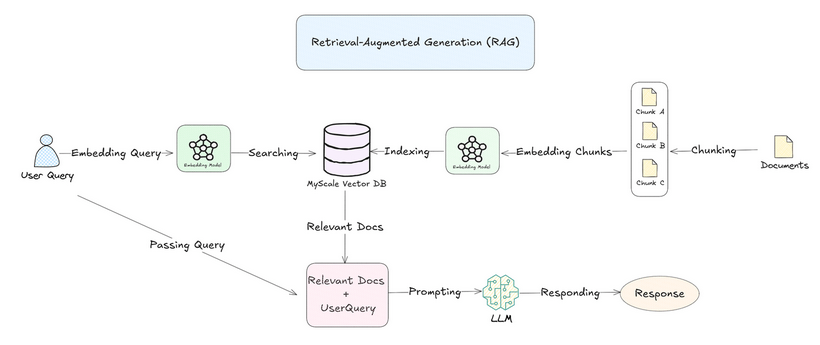

检索增强生成(RAG)解决了这些挑战。在RAG工作流中有许多组件,例如查询、嵌入、索引等等。以下对LLM分块策略进行探讨。

通过将文档分成更小的、有意义的部分,并将它们嵌入到向量数据库中,RAG系统可以搜索和检索每个查询最相关的块。这种方法使LLM能够专注于特定的信息,从而提高响应的准确性和效率。

本文将更深入地探讨LLM不同的分块方法及其策略,以及它们在为现实世界的应用程序优化LLM中的作用。

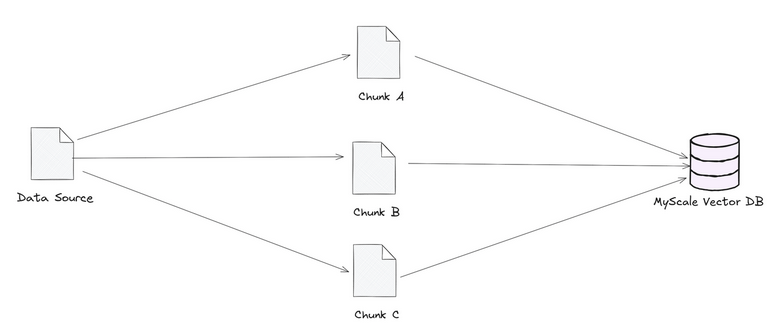

什么是分块?

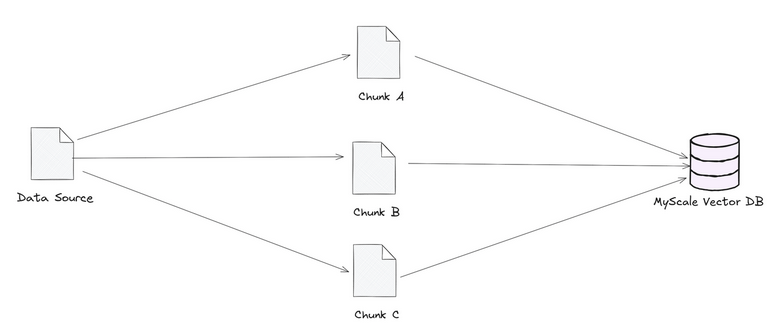

分块是将大数据源拆分成更小的、可管理的部分或“块”。这些块存储在向量数据库中,允许基于相似性的快速有效搜索。当用户提交查询时,向量数据库会找到最相关的块,并将它们发送给LLM。这样,这些模型可以只关注最相关的信息,使其响应更快、更准确。

分块可以帮助语言模型更顺利地处理大型数据集,并通过缩小需要查看的数据范围来提供精确的答案。

对于需要快速、精确答案的应用程序(例如客户支持或法律文档搜索),分块是提高性能和可靠性的基本策略。

以下是一些在RAG中使用的主要分块策略:

现在深入探讨各种分块策略的细节。

1.固定大小分块

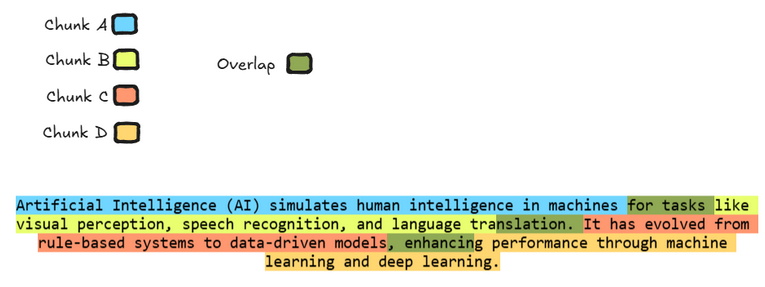

固定大小分块涉及将数据分成大小相同的部分,从而更容易处理大型文档。

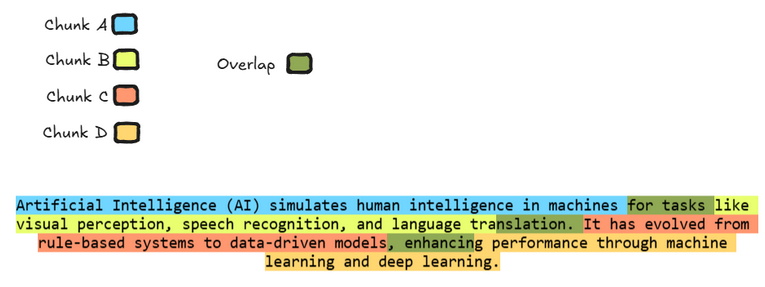

有时,开发人员会在各个块之间添加少许重叠部分,也就是让一个段落的小部分内容在下一个段落的开头重复出现。这种重叠的方法有助于模型在每个块的边界上保留场景,确保关键信息不会在边缘丢失。这种策略对于需要连续信息流的任务特别有用,因为它使模型能够更准确地解释文本,并理解段落之间的关系,从而产生更连贯和场景感知的响应。

上图是固定大小分块的完美示例,其中每个块都由一种独特的颜色表示。绿色部分表示块之间的重叠部分,确保模型在处理下一个分块时能够访问前一个分块的相关场景信息。

这种重叠策略提高了模型处理和理解全文的能力,从而在摘要或翻译等任务中获得更好的性能,在这些任务中,维护跨块边界的信息流至关重要。

代码示例

现在使用一个代码示例重新创建这个示例。将使用LangChain来实现固定大小分块。

2.递归分块

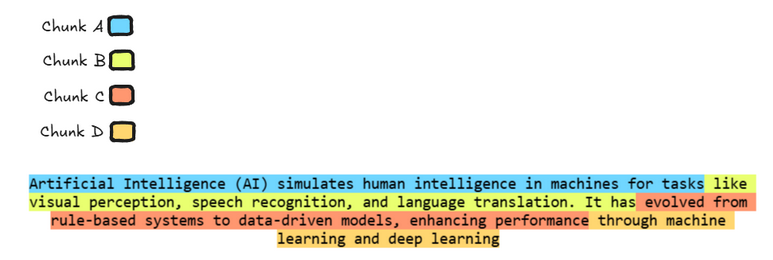

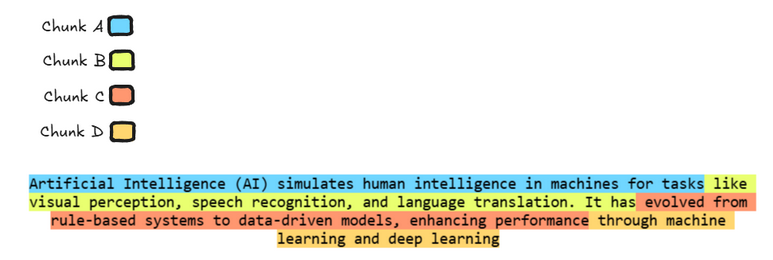

递归分块是一种高效的方法,它通过将文本反复拆分为更小的子块,从而系统地将庞大的文本内容拆分为更易于管理的部分。这种方法在处理复杂或具有层次结构的文档时特别有效,能够确保每个拆分后的部分都保持一致性且场景完整。该过程会持续进行,直至文本被拆分成适合模型进行有效处理的大小。

以需要由具有有限场景窗口的语言模型处理的一个冗长文档为例,递归分块方法会首先将该文档拆分为几个主要部分。若这些部分仍然过于庞大,该方法会进一步将其细分为更小的子部分,并持续这一过程,直至每个块都符合模型的处理能力。这种层次分明的拆分方式不仅保留了原始文档的逻辑流程和场景,而且使LLM能够更有效地处理长文本。

在实际应用中,递归分块可以根据文档的结构和任务的特定需求采用多种策略来实现,根据标题、段落或句子进行拆分。

在上图中,文本通过递归分块被拆分为四个不同颜色的块,每个块都代表了一个更小、更易管理的部分,并且每个块包含最多80个单词。这些块之间没有重叠。颜色编码有助于展示内容是如何被分割成逻辑部分,使模型更容易处理和理解长文本,避免了重要场景的丢失。

代码示例

现在编写一个示例,演示如何实现递归分块。

上述代码将生成以下输出:

在理解了这两种基于长度的分块策略之后,是理解一种更关注文本含义/场景的分块策略的时候了。

3.语义分块

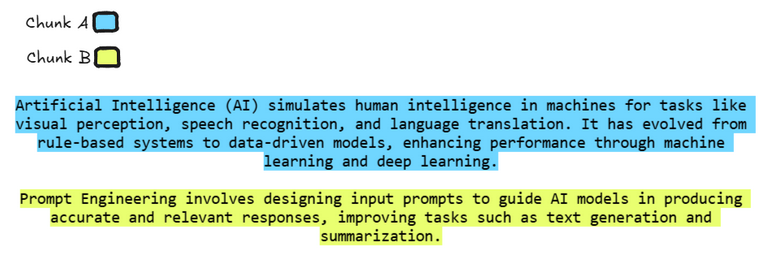

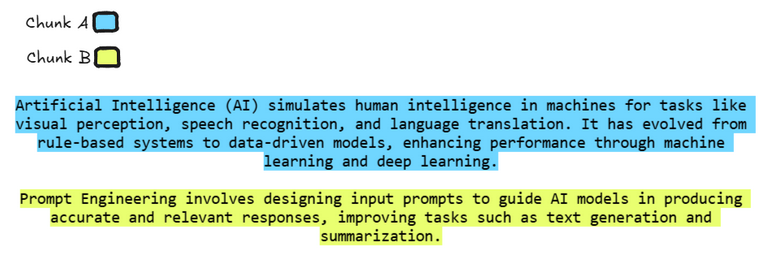

语义分块是指根据内容的含义或场景将文本拆分成块。这种方法通常使用机器学习或自然语言处理(NLP)技术,例如句子嵌入,来识别文本中具有相似含义或语义结构的部分。

在上图中,每个块都采用不同的颜色表示——蓝色代表人工智能,黄色代表提示工程。这些块是分隔开的,因为它们涵盖了不同的想法。这种方法可以确保模型对每个主题都能有清晰且准确的理解,避免了不同主题间的混淆与干扰。

代码示例

现在编写一个实现语义分块的示例。

上述代码将生成以下输出:

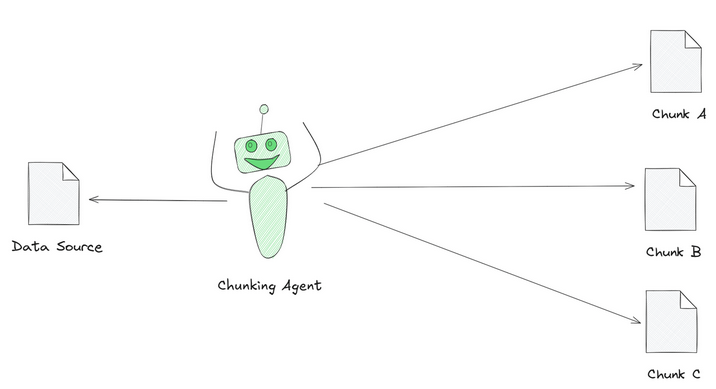

4.代理分块

在这些策略中,代理分块是一种强大的策略。这个策略利用像GPT这样的LLM作为分块过程中的代理。LLM不再依赖于人工设定的规则来确定内容的拆分方式,而是凭借其强大的理解能力,主动地对输入信息进行组织或划分。LLM会依据任务的具体场景,自主决定如何将内容拆分成易于管理的部分,从而找到最佳的拆分方案。

上图显示了一个分块代理将一个庞大的文本拆分成更小的、有意义的部分。这个代理是由人工智能驱动的,这有助于它更好地理解文本,并将其分成有意义的块。这被称为“代理分块”,与简单地将文本拆分为相等的部分相比,这是一种更智能的处理文本的方式。

接下来探讨如何在代码示例中实现。

Python

1 from langchain.chat_models import ChatOpenAI

2 from langchain.prompts import PromptTemplate

3 from langchain.chains import LLMChain

4 from langchain.agents import initialize_agent, Tool, AgentType

5

6 # Initialize OpenAI chat model (replace with your API key)

7 llm = ChatOpenAI(model="gpt-3.5-turbo", api_key="replace with your actual OpenAI API key")

8

9 # Step 1: Define Chunking and Summarization Prompt Template

10 chunk_prompt_template = """

11 You are given a large piece of text. Your job is to break it into smaller parts (chunks) if necessary and summarize each chunk.

12 Once all parts are summarized, combine them into a final summary.

13 If the text is already small enough to process at once, provide a full summary in one step.

14 Please summarize the following text:\n{input}

15 """

16 chunk_prompt = PromptTemplate(input_variables=["input"], template=chunk_prompt_template)

17

18 # Step 2: Define Chunk Processing Tool

19 def chunk_processing_tool(query):

20 """Processes text chunks and generates summaries using the defined prompt."""

21 chunk_chain = LLMChain(llm=llm, prompt=chunk_prompt)

22 print(f"Processing chunk:\n{query}\n") # Show the chunk being processed

23 return chunk_chain.run(input=query)

24

25 # Step 3: Define External Tool (Optional, can be used to fetch extra information if needed)

26 def external_tool(query):

27 """Simulates an external tool that could fetch additional information."""

28 return f"External response based on the query: {query}"

29

30 # Step 4: Initialize the agent with tools

31 tools = [

32 Tool(

33 name="Chunk Processing",

34 func=chunk_processing_tool,

35 description="Processes text chunks and generates summaries."

36 ),

37 Tool(

38 name="External Query",

39 func=external_tool,

40 description="Fetches additional data to enhance chunk processing."

41 )

42 ]

43

44 # Initialize the agent with defined tools and zero-shot capabilities

45 agent = initialize_agent(

46 tools=tools,

47 agent_type=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

48 llm=llm,

49 verbose=True

50 )

51

52 # Step 5: Agentic Chunk Processing Function

53 def agent_process_chunks(text):

54 """Uses the agent to process text chunks and generate a final output."""

55 # Step 1: Chunking the text into smaller, manageable sections

56 def chunk_text(text, chunk_size=500):

57 """Splits large text into smaller chunks."""

58 return [text[i:i + chunk_size] for i in range(0, len(text), chunk_size)]

59

60 chunks = chunk_text(text)

61

62 # Step 2: Process each chunk with the agent

63 chunk_results = []

64 for idx, chunk in enumerate(chunks):

65 print(f"Processing chunk {idx + 1}/{len(chunks)}...")

66 response = agent.invoke({"input": chunk}) # Process chunk using the agent

67 chunk_results.append(response['output']) # Collect the chunk result

68

69 # Step 3: Combine the chunk results into a final output

70 final_output = "\n".join(chunk_results)

71 return final_output

72

73 # Step 6: Running the agent on an example large text input

74 if __name__ == "__main__":

75 # Example large text content

76 text_to_process = """

77 Artificial intelligence (AI) is transforming industries by enabling machines to perform tasks that

78 previously required human intelligence. From healthcare to finance, AI is driving innovation and improving

79 efficiency. For instance, in healthcare, AI algorithms assist doctors in diagnosing diseases, interpreting

80 medical images, and predicting patient outcomes. Meanwhile, in finance, AI helps detect fraud, manage

81 investments, and automate customer service.

82

83 However, the widespread adoption of AI also raises ethical concerns. Issues like privacy invasion,

84 algorithmic bias, and the potential loss of jobs due to automation are significant challenges. Experts

85 argue that it's essential to develop AI responsibly to ensure that it benefits society as a whole.

86 Proper regulations, transparency, and accountability can help address these issues, ensuring that AI

87 technologies are used for the greater good.

88

89 Beyond individual industries, AI is also impacting the global economy. Nations are investing heavily

90 in AI research and development to maintain a competitive edge. This technological race could redefine

91 global power dynamics, with countries that excel in AI leading the way in economic and military strength.

92 Despite the potential for AI to contribute positively to society, its development and application require

93 careful consideration of ethical, legal, and societal implications.

94 """

95

96 # Process the text and print the final result

97 final_result = agent_process_chunks(text_to_process)

98 print("\nFinal Output:\n", final_result)

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

- 64.

- 65.

- 66.

- 67.

- 68.

- 69.

- 70.

- 71.

- 72.

- 73.

- 74.

- 75.

- 76.

- 77.

- 78.

- 79.

- 80.

- 81.

- 82.

- 83.

- 84.

- 85.

- 86.

- 87.

- 88.

- 89.

- 90.

- 91.

- 92.

- 93.

- 94.

- 95.

- 96.

- 97.

- 98.

- 99.

上述代码将生成以下输出:

HTML

1 Processing chunk 1/3...

2

3

4 > Entering new AgentExecutor chain...

5 I should use Chunk Processing to extract the key information from the text provided.

6 Action: Chunk Processing

7 Action Input: Artificial intelligence (AI) is transforming industries by enabling machines to perform tasks that previously required human intelligence. From healthcare to finance, AI is driving innovation and improving efficiency. For instance, in healthcare, AI algorithms assist doctors in diagnosing diseases, interpreting medical images, and predicting patient outcomes. Meanwhile, in finance, AI helps detect fraud, manage investments, and automate customer service.Processing chunk:

8 Artificial intelligence (AI) is transforming industries by enabling machines to perform tasks that previously required human intelligence. From healthcare to finance, AI is driving innovation and improving efficiency. For instance, in healthcare, AI algorithms assist doctors in diagnosing diseases, interpreting medical images, and predicting patient outcomes. Meanwhile, in finance, AI helps detect fraud, manage investments, and automate customer service.

9

10 Observation: Artificial intelligence (AI) is revolutionizing various industries by allowing machines to complete tasks that once needed human intelligence. In healthcare, AI algorithms aid doctors in diagnosing illnesses, analyzing medical images, and forecasting patient results. In finance, AI is used to identify fraud, oversee investments, and streamline customer service. AI is playing a vital role in enhancing efficiency and driving innovation across different sectors.

11 Thought:I need more specific information about the impact of AI in different industries.

12 Action: External Query

13 Action Input: Impact of artificial intelligence in healthcare

14 Observation: External response based on the query: Impact of artificial intelligence in healthcare

15 Thought:I should now look for information on the impact of AI in finance.

16 Action: External Query

17 Action Input: Impact of artificial intelligence in finance

18 Observation: External response based on the query: Impact of artificial intelligence in finance

19 Thought:I now have a better understanding of how AI is impacting healthcare and finance.

20 Final Answer: Artificial intelligence is revolutionizing industries like healthcare and finance by enhancing efficiency, driving innovation, and enabling machines to perform tasks that previously required human intelligence. In healthcare, AI aids in diagnosing diseases, interpreting medical images, and predicting patient outcomes, while in finance, it helps detect fraud, manage investments, and automate customer service.

21

22 > Finished chain.

23 Processing chunk 2/3...

24

25 > Entering new AgentExecutor chain...

26 This question is discussing ethical concerns related to the widespread adoption of AI and the need to develop AI responsibly.

27 Action: Chunk Processing

28 Action Input: The text providedProcessing chunk:

29 The text provided

30

31 Observation: I'm sorry, but you haven't provided any text to be summarized. Could you please provide the text so I can assist you with summarizing it?

32 Thought:I need to provide the text for chunk processing to summarize.

33 Action: External Query

34 Action Input: Retrieve the text related to the ethical concerns of AI adoption and responsible development

35 Observation: External response based on the query: Retrieve the text related to the ethical concerns of AI adoption and responsible development

36 Thought:Now that I have the text related to ethical concerns of AI adoption and responsible development, I can move forward with chunk processing.

37 Action: Chunk Processing

38 Action Input: The retrieved textProcessing chunk:

39 The retrieved text

40

41 Observation: I'm sorry, but it seems like you have not provided any text for me to summarize. Could you please provide the text you would like me to summarize? Thank you!

42 Thought:I need to ensure that the text related to ethical concerns of AI adoption and responsible development is provided for chunk processing to generate a summary.

43 Action: External Query

44 Action Input: Retrieve the text related to the ethical concerns of AI adoption and responsible development

45 Observation: External response based on the query: Retrieve the text related to the ethical concerns of AI adoption and responsible development

46 Thought:Now that I have the text related to ethical concerns of AI adoption and responsible development, I can proceed with chunk processing to generate a summary.

47 Action: Chunk Processing

48 Action Input: The retrieved textProcessing chunk:

49 The retrieved text

50

51 Observation: I'm sorry, but you haven't provided any text to be summarized. Can you please provide the text so I can help you with the summarization?

52 Thought:I need to make sure that the text related to ethical concerns of AI adoption and responsible development is entered for chunk processing to summarize.

53 Action: Chunk Processing

54 Action Input: Text related to ethical concerns of AI adoption and responsible developmentProcessing chunk:

55 Text related to ethical concerns of AI adoption and responsible development

56

57 Observation: The text discusses the ethical concerns surrounding the adoption of artificial intelligence (AI) and the importance of responsible development. It highlights issues such as bias in AI algorithms, privacy violations, and the potential for autonomous AI systems to make harmful decisions. The text emphasizes the need for transparency, accountability, and ethical guidelines to ensure that AI technologies are developed and deployed in a responsible manner.

58 Thought:The text provides information on ethical concerns related to AI adoption and responsible development, emphasizing the need for regulation, transparency, and accountability.

59 Final Answer: The text discusses the ethical concerns surrounding the adoption of artificial intelligence (AI) and the importance of responsible development.

60

61 > Finished chain.

62 Processing chunk 3/3...

63

64 > Entering new AgentExecutor chain...

65 This question seems to be about the impact of AI on the global economy and the potential implications.

66 Action: Chunk Processing

67 Action Input: The text providedProcessing chunk:

68 The text provided

69

70 Observation: I'm sorry, but you did not provide any text for me to summarize. Please provide the text that you would like me to summarize.

71 Thought:I need to provide the text for Chunk Processing to summarize.

72 Action: External Query

73 Action Input: Fetch the text about the impact of AI on the global economy and its implications.

74 Observation: External response based on the query: Fetch the text about the impact of AI on the global economy and its implications.

75 Thought:Now that I have the text about the impact of AI on the global economy and its implications, I can proceed with Chunk Processing.

76 Action: Chunk Processing

77 Action Input: The text about the impact of AI on the global economy and its implications.Processing chunk:

78 The text about the impact of AI on the global economy and its implications.

79

80 Observation: The text discusses the significant impact that artificial intelligence (AI) is having on the global economy. It highlights how AI is revolutionizing industries by increasing productivity, reducing costs, and creating new job opportunities. However, there are concerns about job displacement and the need for retraining workers to adapt to the changing landscape. Overall, AI is reshaping the economy and prompting a shift in the way businesses operate.

81 Thought:Based on the summary generated by Chunk Processing, the impact of AI on the global economy seems to be significant, with both positive and negative implications.

82 Final Answer: The impact of AI on the global economy is significant, revolutionizing industries, increasing productivity, reducing costs, creating new job opportunities, but also raising concerns about job displacement and the need for worker retraining.

83

84 > Finished chain.

85

86 Final Output:

87 Artificial intelligence is revolutionizing industries like healthcare and finance by enhancing efficiency, driving innovation, and enabling machines to perform tasks that previously required human intelligence. In healthcare, AI aids in diagnosing diseases, interpreting medical images, and predicting patient outcomes, while in finance, it helps detect fraud, manage investments, and automate customer service.

88 The text discusses the ethical concerns surrounding the adoption of artificial intelligence (AI) and the importance of responsible development.

89 The impact of AI on the global economy is significant, revolutionizing industries, increasing productivity, reducing costs, creating new job opportunities, but also raising concerns about job displacement and the need for worker retraining.

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

- 64.

- 65.

- 66.

- 67.

- 68.

- 69.

- 70.

- 71.

- 72.

- 73.

- 74.

- 75.

- 76.

- 77.

- 78.

- 79.

- 80.

- 81.

- 82.

- 83.

- 84.

- 85.

- 86.

- 87.

- 88.

- 89.

- 90.

分块策略的比较

为了更容易理解不同的分块方法,下表比较了固定大小分块、递归分块、语义分块和代理分块的工作原理、何时使用它们以及它们的局限性。

分块类型 |

描述

|

方法

|

适用场景

|

局限性 |

固定大小 分块 | 将文本分成大小相等的块,而不考虑内容。 | 基于固定的单词或字符限制创建的块。 | 简单、结构化的文本,场景连续性并不重要。 | 可能会丢失场景或拆分句子/想法。 |

递归分块 | 不断地将文本分成更小的块,直到达到可管理的大小。 | 分层拆分,如果太大,将部分进一步拆分。 | 冗长、复杂或分层的文档(例如技术手册)。 | 如果部分过于宽泛,仍可能会丢失场景。 |

语义分块 | 根据意义或相关主题将文本分成块。 | 使用句子嵌入等NLP技术对相关内容进行拆分。 | 场景敏感的任务,连贯性和主题连续性至关重要。 | 需要NLP技术;实施起来更复杂。 |

代理分块 | 利用人工智能模型(如GPT)将内容自主拆分为有意义的部分。 | 基于模型的理解和特定任务的场景采用人工智能驱动的拆分。 | 在内容结构多变的复杂任务中,人工智能可以优化分块。 | 可能具有不可预测性,并需要进行调整。 |

结论

分块策略与检索增强生成(RAG)对于提升LLM性能至关重要。分块策略有助于将复杂数据简化为更小、更易管理的部分,从而促进更高效的处理;而RAG通过在生成工作流中融入实时数据检索来改进LLM。总的来说,这些方法通过将有组织的数据与生动、实时的信息相结合,使LLM能够提供更精确、更贴合场景的回复。

原文标题:Chunking Strategies for Optimizing Large Language Models (LLMs),作者:Usama Jamil