提示工程中的代理技术:构建智能自主的AI系统

今天,我们将进入一个更加复杂和动态的领域:提示工程中的代理技术。这种技术允许我们创建能够自主决策、执行复杂任务序列,甚至与人类和其他系统交互的AI系统。让我们一起探索如何设计和实现这些智能代理,以及它们如何改变我们与AI交互的方式。

1. 代理技术在AI中的重要性

在深入技术细节之前,让我们先理解为什么代理技术在现代AI系统中如此重要:

- 任务复杂性:随着AI应用场景的复杂化,单一的静态提示已经无法满足需求。代理可以处理需要多步骤、决策和规划的复杂任务。

- 自主性:代理技术使AI系统能够更加自主地运作,减少人类干预的需求。

- 适应性:代理可以根据环境和任务的变化动态调整其行为,提高系统的灵活性。

- 交互能力:代理可以与人类用户、其他AI系统或外部工具进行复杂的交互。

- 持续学习:通过与环境的交互,代理可以不断学习和改进其性能。

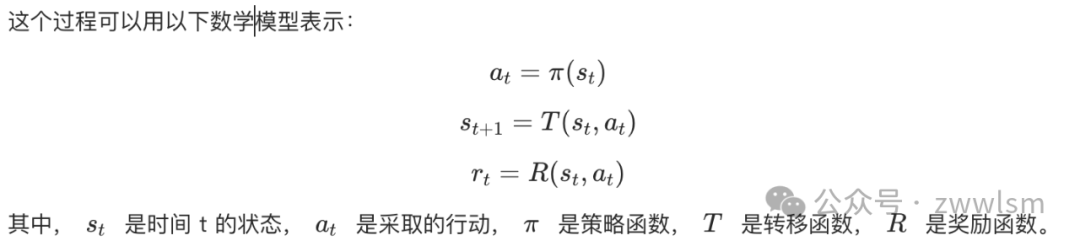

2. AI代理的基本原理

AI代理的核心是一个决策循环,通常包括以下步骤:

- 感知(Perception):收集来自环境的信息。

- 推理(Reasoning):基于收集的信息进行推理和决策。

- 行动(Action):执行选定的行动。

- 学习(Learning):从行动的结果中学习,更新知识库。

3. 提示工程中的代理技术

现在,让我们探讨如何使用提示工程来实现这些代理。

3.1 基于规则的代理

最简单的代理是基于预定义规则的。虽然不如更复杂的方法灵活,但它们在某些场景下仍然非常有用。

这个例子展示了如何使用简单的规则来指导AI代理的行为。

3.2 基于目标的代理

基于目标的代理更加灵活,它们能够根据给定的目标自主规划和执行任务。

这个代理能够分析复杂的目标,将其分解为子任务,并逐步执行以实现目标。

3.3 工具使用代理

一个更高级的代理类型是能够使用外部工具来完成任务的代理。这种代理可以极大地扩展AI系统的能力。

这个代理能够根据任务需求选择和使用适当的外部工具,大大增强了其问题解决能力。

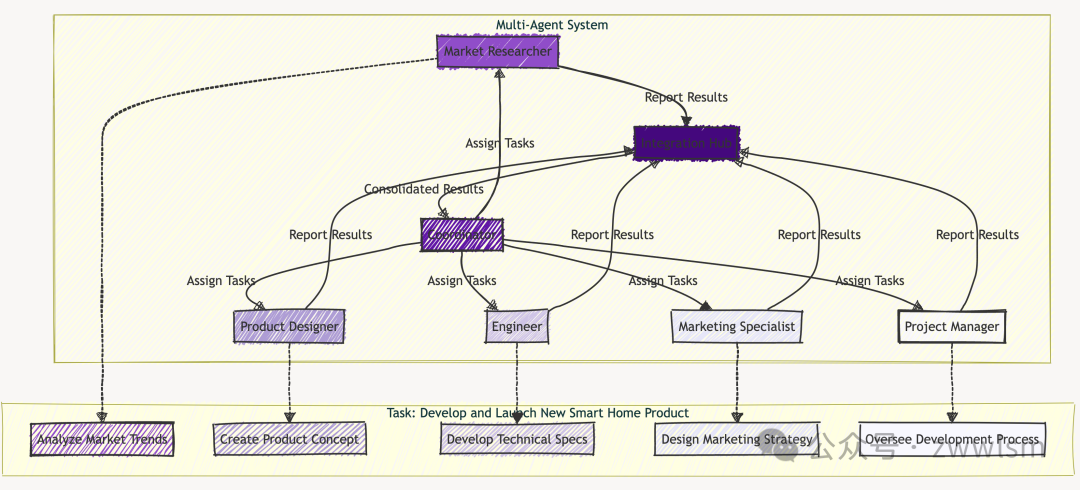

3.4 多代理系统

在某些复杂场景中,我们可能需要多个代理协同工作。每个代理可以专注于特定的任务或角色,共同完成一个大型目标。

这个系统展示了如何协调多个专门的代理来完成一个复杂的任务。

4. 高级技巧和最佳实践

在实际应用中,以下一些技巧可以帮助你更好地设计和实现AI代理:

4.1 记忆和状态管理

代理需要能够记住过去的交互和决策,以保持连贯性和学习能力。

这个例子展示了如何为代理实现简单的短期和长期记忆,使其能够在多次交互中保持状态。

4.2 元认知和自我改进

高级代理应该能够评估自己的性能,并不断学习和改进。

这个代理能够反思过去的性能,并将学到的经验应用到新任务中。

4.3 伦理决策

随着AI代理变得越来越自主,确保它们做出符合伦理的决策变得至关重要。

这个代理在执行任务时非常抱歉之前的回复被意外截断。让我继续完成这个关于提示工程中的代理技术的文章。

这个代理在执行任务时会考虑伦理因素,确保其行动符合预定的伦理准则。

5. 评估和优化

评估AI代理的性能比评估简单的提示更加复杂,因为我们需要考虑代理在多个交互中的整体表现。以下是一些评估和优化的方法:

5.1 任务完成度评估

5.2 决策质量评估

5.3 长期学习和适应性评估

6. 实际应用案例:智能个人助理

让我们通过一个实际的应用案例来综合运用我们学到的代理技术。我们将创建一个智能个人助理,它能够处理各种日常任务,学习用户的偏好,并做出符合伦理的决策。

这个个人助理展示了如何结合多个高级特性,包括:

- 状态管理:使用内存系统来记住过去的交互。

- 上下文理解:考虑当前时间和用户历史。

- 任务分解:将复杂请求分解为可管理的步骤。

- 伦理决策:确保所有行动都符合预定的伦理准则。

- 适应性:通过记忆系统学习用户偏好和行为模式。

7. 代理技术的挑战与解决方案

尽管代理技术为AI系统带来了巨大的潜力,但它也面临一些独特的挑战:

7.1 长期一致性

挑战:确保代理在长期交互中保持行为一致性。

解决方案:

- 实现稳健的记忆系统,包括短期和长期记忆

- 定期回顾和综合过去的交互

- 使用元认知技术来监控和调整行为

7.2 错误累积

挑战:代理可能在一系列决策中累积错误,导致最终结果严重偏离预期。

解决方案:

- 实现定期的自我评估和校正机制

- 在关键决策点引入人类反馈

- 使用蒙特卡洛树搜索等技术来模拟决策的长期影响

7.3 可解释性

挑战:随着代理决策过程变得越来越复杂,解释这些决策变得越来越困难。

解决方案:

- 实现详细的决策日志系统

- 使用可解释的AI技术,如LIME或SHAP

- 开发交互式解释界面,允许用户询问具体的决策原因

8. 未来趋势

随着代理技术的不断发展,我们可以期待看到以下趋势:

- 多代理协作系统:复杂任务将由多个专门的代理共同完成,每个代理负责特定的子任务或领域。

- 持续学习代理:代理将能够从每次交互中学习,不断改进其知识库和决策能力。

- 情境感知代理:代理将更好地理解和适应不同的环境和社交情境。

- 自主目标设定:高级代理将能够自主设定和调整目标,而不仅仅是执行预定义的任务。

- 跨模态代理:代理将能够无缝地在文本、图像、语音等多种模态之间进行推理和交互。

9. 结语

提示工程中的代理技术为我们开启了一个充满可能性的新世界。通过创建能够自主决策、学习和适应的AI系统,我们正在改变人机交互的本质。这些技术不仅能够处理更复杂的任务,还能够创造出更自然、更智能的用户体验。

然而,随着代理变得越来越复杂和自主,我们也面临着诸如伦理、可控性和透明度等重要挑战。作为开发者和研究者,我们有责任谨慎地设计和部署这些系统,确保它们造福人类而不是带来潜在的危害。

本文转载自芝士AI吃鱼,作者: 芝士AI吃鱼