回复

使用Streamlit、LangChain和Bedrock构建一个交互式聊天机器人 原创

本文将帮助你使用低代码前端、用于会话管理的LangChain以及用于生成响应的Bedrock LLM来创建聊天机器人。

在不断发展的AI领域,聊天机器人已成为一种不可或缺的工具,用于增强用户参与度和简化信息传递。本文将逐步介绍构建交互式聊天机器人的具体过程,使用Streamlit作为前端、使用LangChain用于协调交互,以及使用基于Amazon Bedrock的Anthropic Claude模型作为大语言模型(LLM)后端。我们将深入研究前端和后端的代码片段,并解释使这个聊天机器人切实可行的关键组件。

核心组件

- Streamlit前端:Streamlit的直观界面便于我们不用花多大的力气就能创建一个对用户友好的低代码聊天界面。我们将探讨代码如何创建聊天窗口、处理用户输入和显示聊天机器人的响应。

- LangChain编排:LangChain使我们能够管理会话流程和内存,确保聊天机器人维护上下文并提供相关的响应。我们将讨论如何整合LangChain的ConversationSummaryBufferMemory和ConversationChain。

- Bedrock/Claude LLM后端:真正的魔力在于LLM后端。我们将看看如何利用Amazon Bedrock的claude基础模型来生成上下文感知的智能响应。

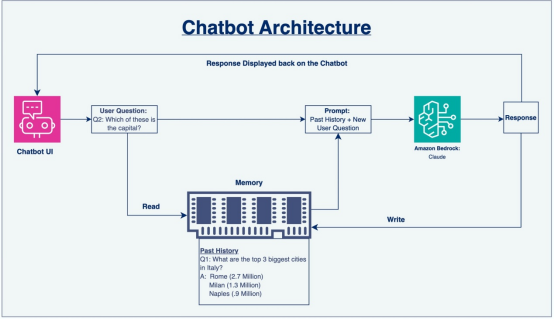

图1. 聊天机器人体系结构

体系结构的概念阐述

- 用户交互:用户通过在Streamlit创建的聊天界面中输入消息来发起对话。该消息可以是问题、请求或用户希望提供的任何其他形式的输入。

- 输入捕获和处理:Streamlit的聊天输入组件捕获用户的消息,并将其传递给LangChain框架进行进一步处理。

- 语境化结合LangChain记忆:LangChain在保持对话的上下文方面起着至关重要的作用。它将用户的最新输入与存储在内存中的相关对话历史记录结合起来。这确保了聊天机器人拥有必要的信息,以生成有意义且符合上下文的响应。

- 利用LLM:然后将结合的上下文发送到Bedrock/Claude LLM。这个强大的语言模型利用其丰富的知识以及对语言的理解来分析上下文,并生成响应,以大量的丰富信息回复用户的输入。

- 响应检索:LangChain从LLM接收生成的响应,并准备将其提供给用户。

- 响应显示:最后,Streamlit获得聊天机器人的响应后将其显示在聊天窗口中,使其看起来好像聊天机器人正与用户进行自然地对话。这营造了一种直观的、对用户友好的体验,鼓励进一步的交互。

代码片段

前端(Streamlit)

Python

import streamlit

import chatbot_backend

from langchain.chains import ConversationChain

from langchain.memory import ConversationSummaryBufferMemory

import boto3

from langchain_aws import ChatBedrock

import pandas as pd

# 2 Set Title for Chatbot - streamlit.title("Hi, This is your Chatbott")

# 3 LangChain memory to the session cache - Session State -

if 'memory' not in streamlit.session_state:

streamlit.session_state.memory = demo.demo_memory()

# 4 Add the UI chat history to the session cache - Session State

if 'chat_history' not in streamlit.session_state:

streamlit.session_state.chat_history = []

# 5 Re-render the chat history

for message in streamlit.session_state.chat_history:

with streamlit.chat_message(message["role"]):

streamlit.markdown(message["text"])

# 6 Enter the details for chatbot input box

input_text = streamlit.chat_input("Powered by Bedrock")

if input_text:

with streamlit.chat_message("user"):

streamlit.markdown(input_text)

streamlit.session_state.chat_history.append({"role": "user", "text": input_text})

chat_response = demo.demo_conversation(input_text=input_text,

memory=streamlit.session_state.memory)

with streamlit.chat_message("assistant"):

streamlit.markdown(chat_response)

streamlit.session_state.chat_history.append({"role": "assistant", "text": chat_response})

后端(LangChain和LLM)

Python

from langchain.chains import ConversationChain

from langchain.memory import ConversationSummaryBufferMemory

import boto3

from langchain_aws import ChatBedrock

# 2a Write a function for invoking model- client connection with Bedrock with profile, model_id

def demo_chatbot():

boto3_session = boto3.Session(

# Your aws_access_key_id,

# Your aws_secret_access_key,

region_name='us-east-1'

)

llm = ChatBedrock(

model_id="anthropic.claude-3-sonnet-20240229-v1:0",

client=boto3_session.client('bedrock-runtime'),

model_kwargs={

"anthropic_version": "bedrock-2023-05-31",

"max_tokens": 20000,

"temperature": .3,

"top_p": 0.3,

"stop_sequences": ["\n\nHuman:"]

}

)

return llm

# 3 Create a Function for ConversationSummaryBufferMemory (llm and max token limit)

def demo_memory():

llm_data = demo_chatbot()

memory = ConversationSummaryBufferMemory(llm=llm_data, max_token_limit=20000)

return memory

# 4 Create a Function for Conversation Chain - Input text + Memory

def demo_conversation(input_text, memory):

llm_chain_data = demo_chatbot()

# Initialize ConversationChain with proper llm and memory

llm_conversation = ConversationChain(llm=llm_chain_data, memory=memory, verbose=True)

# Call the invoke method

full_input = f" \nHuman: {input_text}"

llm_start_time = time.time()

chat_reply = llm_conversation.invoke({"input": full_input})

llm_end_time = time.time()

llm_elapsed_time = llm_end_time - llm_start_time

memory.save_context({"input": input_text}, {"output": chat_reply.get('response', 'No Response')})

return chat_reply.get('response', 'No Response')

结论

我们在上面探讨了用Streamlit、LangChain和强大的LLM后端构建的交互式聊天机器人的基本模块。这个基础为从客户支持自动化到个性化学习体验的无限可能打开了大门。你可以随意试验、改进和部署这个聊天机器人,以满足自己的特定需求和使用场景。

原文标题:Building an Interactive Chatbot With Streamlit, LangChain, and Bedrock,作者:Karan Bansal

©著作权归作者所有,如需转载,请注明出处,否则将追究法律责任

已于2024-11-1 14:49:25修改

赞

收藏

回复

相关推荐