回复

Anthropic提出的Contextual RAG开源实现Open Contextual RAG来了

之前笔者曾介绍过Anthropic研究团队提出的一种能够显著增强RAG性能的方法—Contextual RAG(Anthropic提出Contextual Retrieval让RAG再进化,大幅降低检索失败率),虽然有详细的介绍,但并没有披露完整的实现源码。不过,这一缺憾被Together计算团队弥补,他们在GitHub 上发布了该技术的开源参考实现—Open Contextual RAG。

回顾:什么是 Contextual RAG?

Contextual RAG 是一种先进的 chunk 增强技术,它巧妙地利用LLM,比如claude,为每个文档片段赋予更丰富的上下文。想象一下,如果我们的大脑在回忆某件事时,不仅能想起事件本身,还能自动联想到相关的前因后果,这就是 Contextual RAG 试图为LLM 赋予的能力。这种方法的实现显著提高了文档检索的准确性,联合重排序(reranking)使用,可以将检索准确率提升高达 67%。

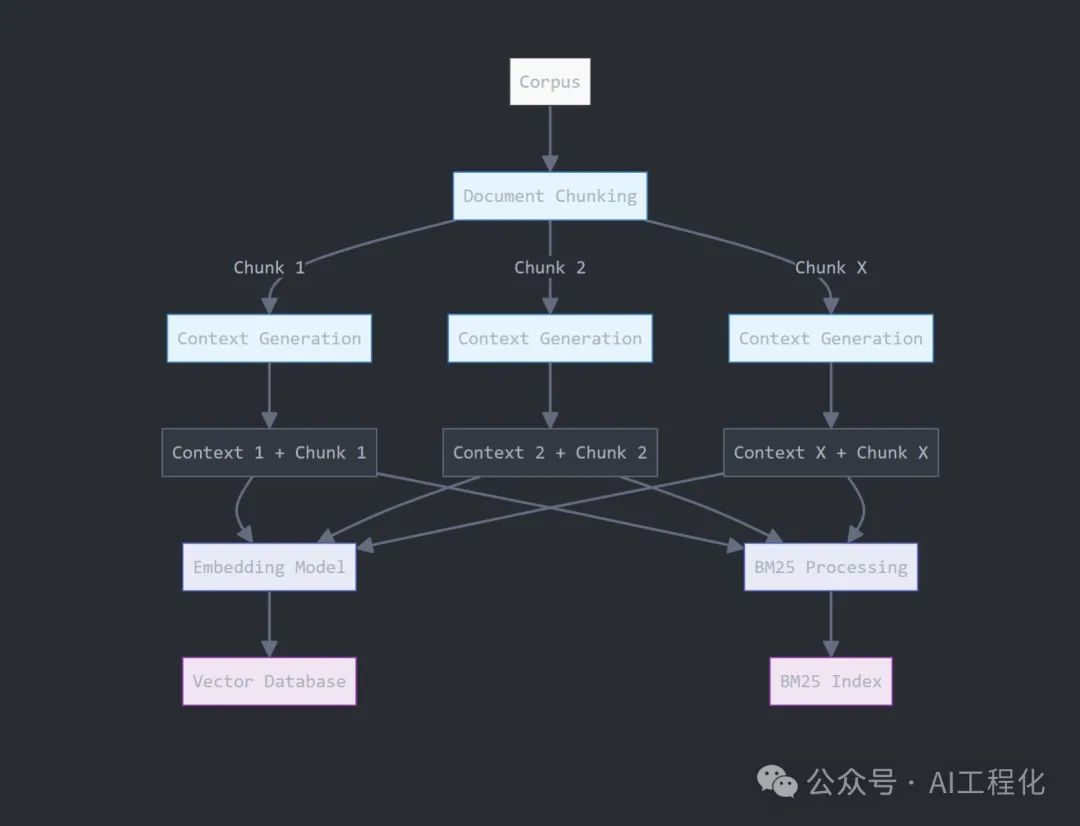

Contextual RAG 的工作流程如下:

- 上下文增强:对于文档中的每个 chunk,系统会在其前面添加一段解释性的上下文片段,帮助 chunk 更好地融入整个文档的语境。这一步骤使用一个小型、高效的 LLM 来完成。

- 混合搜索:系统会同时使用稀疏(关键词)和密集(语义)两种方式对 chunk 进行嵌入。这就像是让 AI 同时具备了字面理解和深层含义理解的能力。

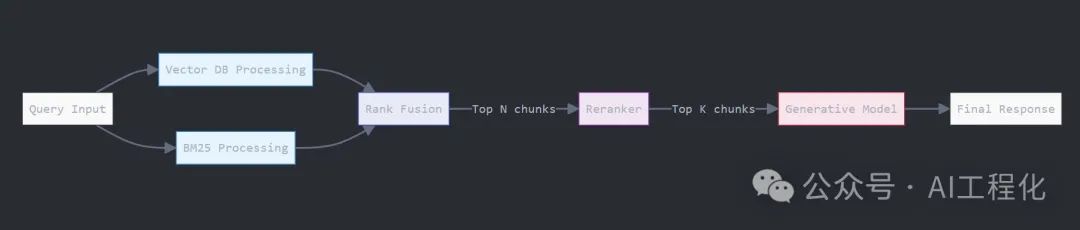

- 排名融合:使用递归排名融合(Reciprocal Rank Fusion,RRF)算法来合并搜索结果。这个过程就像是在多个专家意见中寻找最佳答案。

- 重排序:系统会检索前 150 个chunks,然后通过一个专门的重排序模型筛选出 top 20 。这一步确保了最相关的信息被推到最前面。

- 答案生成:最后,将这 20 个精选 chunks 传递给一个大型 LLM,生成最终答案。

在Open Contextual RAG具体细节实现:

0).安装依赖

!pip install together # To access open source LLMs

!pip install --upgrade tiktoken # To count total token counts

!pip install beautifulsoup4 # To scrape documents to RAG over

!pip install bm25s # To implement out key-word BM25 search1)索引阶段(一次性):

1. 数据处理和分块

# Let's download the essay from Paul Graham's website

import requests

from bs4 import BeautifulSoup

def scrape_pg_essay():

url = 'https://paulgraham.com/foundermode.html'

try:

# Send GET request to the URL

response = requests.get(url)

response.raise_for_status() # Raise an error for bad status codes

# Parse the HTML content

soup = BeautifulSoup(response.text, 'html.parser')

# Paul Graham's essays typically have the main content in a font tag

# You might need to adjust this selector based on the actual HTML structure

content = soup.find('font')

if content:

# Extract and clean the text

text = content.get_text()

# Remove extra whitespace and normalize line breaks

text = ' '.join(text.split())

return text

else:

return "Could not find the main content of the essay."

except requests.RequestException as e:

return f"Error fetching the webpage: {e}"

# Scrape the essay

pg_essay = scrape_pg_essay()

def create_chunks(document, chunk_size=300, overlap=50):

return [document[i : i + chunk_size] for i in range(0, len(document), chunk_size - overlap)]

chunks = create_chunks(pg_essay, chunk_size=250, overlap=30)

for i, chunk in enumerate(chunks):

print(f"Chunk {i + 1}: {chunk}")- 使用量化后的 Llama 3B 模型生成contextual chunk。

import together, os

from together import Together

# Paste in your Together AI API Key or load it

TOGETHER_API_KEY = os.environ.get("TOGETHER_API_KEY")

# We want to generate a snippet explaining the relevance/importance of the chunk with

# full document in mind.

CONTEXTUAL_RAG_PROMPT = """

Given the document below, we want to explain what the chunk captures in the document.

{WHOLE_DOCUMENT}

Here is the chunk we want to explain:

{CHUNK_CONTENT}

Answer ONLY with a succinct explaination of the meaning of the chunk in the context of the whole document above.

"""

from typing import List

# First we will just generate the prompts and examine them

def generate_prompts(document : str, chunks : List[str]) -> List[str]:

prompts = []

for chunk in chunks:

prompt = CONTEXTUAL_RAG_PROMPT.format(WHOLE_DOCUMENT=document, CHUNK_CONTENT=chunk)

prompts.append(prompt)

return prompts

prompts = generate_prompts(pg_essay, chunks)

def generate_context(prompt: str):

"""

Generates a contextual response based on the given prompt using the specified language model.

Args:

prompt (str): The input prompt to generate a response for.

Returns:

str: The generated response content from the language model.

"""

response = client.chat.completions.create(

model="meta-llama/Llama-3.2-3B-Instruct-Turbo",

messages=[{"role": "user", "content": prompt}],

temperature=1

)

return response.choices[0].message.content

# Let's generate the entire list of contextual chunks

contextual_chunks = [generate_context(prompts[i])+' '+chunks[i] for i in range(len(chunks))]

# We should have one contextual chunk for each original chunk

len(contextual_chunks), len(contextual_chunks) == len(chunks)- 向量索引生成

使用 bge-large-en-v1.5 将上面的上下文chunk片段嵌入到向量索引中。

from typing import List

import together

import numpy as np

from sklearn.metrics.pairwise import cosine_similarity

def generate_embeddings(input_texts: List[str], model_api_string: str) -> List[List[float]]:

"""Generate embeddings from Together python library.

Args:

input_texts: a list of string input texts.

model_api_string: str. An API string for a specific embedding model of your choice.

Returns:

embeddings_list: a list of embeddings. Each element corresponds to the each input text.

"""

outputs = client.embeddings.create(

input=input_texts,

model=model_api_string,

)

return [x.embedding for x in outputs.data]

contextual_embeddings = generate_embeddings(contextual_chunks, "BAAI/bge-large-en-v1.5")

def vector_retreival(query: str, top_k: int = 5, vector_index: np.ndarray = None) -> List[int]:

"""

Retrieve the top-k most similar items from an index based on a query.

Args:

query (str): The query string to search for.

top_k (int, optional): The number of top similar items to retrieve. Defaults to 5.

index (np.ndarray, optional): The index array containing embeddings to search against. Defaults to None.

Returns:

List[int]: A list of indices corresponding to the top-k most similar items in the index.

"""

query_embedding = generate_embeddings([query], 'BAAI/bge-large-en-v1.5')[0]

similarity_scores = cosine_similarity([query_embedding], vector_index)

return list(np.argsort(-similarity_scores)[0][:top_k])

vector_retreival(query = "What are 'skip-level' meetings?", top_k = 5, vector_index = contextual_embeddings)至此,有了向量检索的方法。

- 文本检索索引生成

import bm25s

# Create the BM25 model and index the corpus

retriever = bm25s.BM25(corpus=contextual_chunks)

retriever.index(bm25s.tokenize(contextual_chunks))

# Query the corpus and get top-k results

query = "What are 'skip-level' meetings?"

results, scores = retriever.retrieve(bm25s.tokenize(query), k=5,)

for doc in results[0]:

print(f"Chunk Index {contextual_chunks.index(doc)} : {doc}")

def bm25_retreival(query: str, k : int, bm25_index) -> List[int]:

"""

Retrieve the top-k document indices based on the BM25 algorithm for a given query.

Args:

query (str): The search query string.

k (int): The number of top documents to retrieve.

bm25_index: The BM25 index object used for retrieval.

Returns:

List[int]: A list of indices of the top-k documents that match the query.

"""

results, scores = bm25_index.retrieve(bm25s.tokenize(query), k=k)

return [contextual_chunks.index(doc) for doc in results[0]]

bm25_retreival(query = "What are 'skip-level' meetings?", k = 5, bm25_index = retriever)至此,有了文本(bm25)检索的方法。

2)查询阶段:

- 执行向量和 BM25 检索,然后使用以下实现的 RRF 算法融合排名。

from collections import defaultdict

def reciprocal_rank_fusion(*list_of_list_ranks_system, K=60):

"""

Fuse rank from multiple IR systems using Reciprocal Rank Fusion.

Args:

* list_of_list_ranks_system: Ranked results from different IR system.

K (int): A constant used in the RRF formula (default is 60).

Returns:

Tuple of list of sorted documents by score and sorted documents

"""

# Dictionary to store RRF mapping

rrf_map = defaultdict(float)

# Calculate RRF score for each result in each list

for rank_list in list_of_list_ranks_system:

for rank, item in enumerate(rank_list, 1):

rrf_map[item] += 1 / (rank + K)

# Sort items based on their RRF scores in descending order

sorted_items = sorted(rrf_map.items(), key=lambda x: x[1], reverse=True)

# Return tuple of list of sorted documents by score and sorted documents

return sorted_items, [item for item, score in sorted_items]

# Example ranked lists from different sources

vector_top_k = vector_retreival(query = "What are 'skip-level' meetings?", top_k = 5, vector_index = contextual_embeddings)

bm25_top_k = bm25_retreival(query = "What are 'skip-level' meetings?", k = 5, bm25_index = retriever)

# Combine the lists using RRF

hybrid_top_k = reciprocal_rank_fusion(vector_top_k, bm25_top_k)

for index in hybrid_top_k[1]:

print(f"Chunk Index {index} : {contextual_chunks[index]}")- 使用重排序器提高检索质量

将从混合搜索输出的前 6 个文档传递给重排器,根据查询的相关性进行排序。

from together import Together

query = "What are 'skip-level' meetings?" # we keep the same query - can change if we want

response = client.rerank.create(

model="Salesforce/Llama-Rank-V1",

query=query,

documents=hybrid_top_k_docs,

top_n=3 # we only want the top 3 results

)

for result in response.results:

print(f"Document: {hybrid_top_k_docs[result.index]}")

print(f"Relevance Score: {result.relevance_score}\n\n")

# Document: This chunk refers to "skip-level" meetings, which are a key characteristic of founder mode, where the CEO engages directly with the company beyond their direct reports. This contrasts with the "manager mode" of addressing company issues, where decisions are made perfunctorily via a hierarchical system, to which founders instinctively rebel. that there's a name for it. And once you abandon that constraint there are a huge number of permutations to choose from.For example, Steve Jobs used to run an annual retreat for what he considered the 100 most important people at Apple, and these wer

# Relevance Score: 0.9733742140554578

# Document: This chunk discusses the shift in company management away from the "manager mode" that most companies follow, where CEOs engage with the company only through their direct reports, to "founder mode", where CEOs engage more directly with even higher-level employees and potentially skip over direct reports, potentially leading to "skip-level" meetings. ts of, it's pretty clear that it's going to break the principle that the CEO should engage with the company only via his or her direct reports. "Skip-level" meetings will become the norm instead of a practice so unusual that there's a name for it. An

# Relevance Score: 0.7481956787777269

# Document: This chunk explains that founder mode, a hypothetical approach to running a company by its founders, will differ from manager mode in that founders will engage directly with the company, rather than just their direct reports, through "skip-level" meetings, disregarding the traditional principle that CEOs should only interact with their direct reports, as managers do. can already guess at some of the ways it will differ.The way managers are taught to run companies seems to be like modular design in the sense that you treat subtrees of the org chart as black boxes. You tell your direct reports what to do, and it's

# Relevance Score: 0.7035533028052896

# Lets add the top 3 documents to a string

retreived_chunks = ''

for result in response.results:

retreived_chunks += hybrid_top_k_docs[result.index] + '\n\n'

print(retreived_chunks)

# This chunk refers to "skip-level" meetings, which are a key characteristic of founder mode, where the CEO engages directly with the company beyond their direct reports. This contrasts with the "manager mode" of addressing company issues, where decisions are made perfunctorily via a hierarchical system, to which founders instinctively rebel. that there's a name for it. And once you abandon that constraint there are a huge number of permutations to choose from.For example, Steve Jobs used to run an annual retreat for what he considered the 100 most important people at Apple, and these wer

# This chunk discusses the shift in company management away from the "manager mode" that most companies follow, where CEOs engage with the company only through their direct reports, to "founder mode", where CEOs engage more directly with even higher-level employees and potentially skip over direct reports, potentially leading to "skip-level" meetings. ts of, it's pretty clear that it's going to break the principle that the CEO should engage with the company only via his or her direct reports. "Skip-level" meetings will become the norm instead of a practice so unusual that there's a name for it. An

# This chunk explains that founder mode, a hypothetical approach to running a company by its founders, will differ from manager mode in that founders will engage directly with the company, rather than just their direct reports, through "skip-level" meetings, disregarding the traditional principle that CEOs should only interact with their direct reports, as managers do. can already guess at some of the ways it will differ.The way managers are taught to run companies seems to be like modular design in the sense that you treat subtrees of the org chart as black boxes. You tell your direct reports what to do, and it's- 利用 Llama 405b 进行最终答案生成

将重排后的的 3 个chunks传递给LLM以获得最终答案。

# Generate a story based on the top 10 most similar movies

query = "What are 'skip-level' meetings?"

response = client.chat.completions.create(

model="meta-llama/Meta-Llama-3.1-405B-Instruct-Turbo",

messages=[

{"role": "system", "content": "You are a helpful chatbot."},

{"role": "user", "content": f"Answer the question: {query}. Here is relevant information: {retreived_chunks}"},

],

)

response.choices[0].message.content

# '"Skip-level" meetings refer to a management practice where a CEO or high-level executive engages directly with employees who are not their direct reports, bypassing the traditional hierarchical structure of the organization. This approach is characteristic of "founder mode," where the CEO seeks to have a more direct connection with the company beyond their immediate team. In contrast to the traditional "manager mode," where decisions are made through a hierarchical system, skip-level meetings allow for more open communication and collaboration between the CEO and various levels of employees. This approach is often used by founders who want to stay connected to the company\'s operations and culture, and to foster a more flat and collaborative organizational structure.'小结

通过together团队的详细实现,我们清楚的了解到Contextual RAG的具体实现,这使得我们可以无痛的移植到我们的系统中,快速提升RAG性能。

本文转载自 AI工程化,作者: ully

赞

收藏

回复

相关推荐